- Table of Contents

-

- H3C UniServer B16000 Blade Server Configuration Examples-6W100

- 01-FC and FCoE Services Configuration Examples

- 02-Ethernet Services Configuration Examples

- 03-Virtual Management Network Configuration Examples

- 04-Shared Storage Configuration Examples

- 05-VC Configuration Examples

- 06-Chassis Profile Configuration Examples

- 07-IB Service Configuration Examples

- 08-Blade Server FIST Configuration Examples

- Related Documents

-

| Title | Size | Download |

|---|---|---|

| 01-FC and FCoE Services Configuration Examples | 4.07 MB |

Contents

Example: Configuring FC (connecting FC switch module to FC switch)

Configuring the mezzanine card

Configuring the Brocade switch

Configuring the interconnect module

Example: Configuring FC (uplinking multiple ports of the FC switch module to FC switch)

Querying the port connection relation between mezzanine cards and interconnect modules

Configuring the mezzanine card

Configuring the interconnect module

Configuring the Brocade switch

Verifying load balancing of ports on FC switch modules

Accessing virtual volumes by the system through multiple paths

Example: Configuring FC (uplinking the aggregation interfaces of FC switch modules to FC switches)

Query the port connection between mezzanine cards and interconnect modules

Configuring the mezzanine card

Configuring the interconnect module

Verifying the configuration of the FC aggregation group

Accessing virtual volumes by the system through multiple paths

Example: Configuring FC (directly connecting FC switch modules to the 3Par storage)

Configuring the mezzanine card

Configuring the interconnect module

Example: Configuring FCoE (connecting aggregation interconnect modules to FC switches)

Configuring the mezzanine card

Configuring the interconnect module

Configuring the Brocade switch

Configuring the mezzanine card

Configuring the aggregation interconnect module

Configuring the H3C S6800 switch

Verifying the configuration of the aggregation group

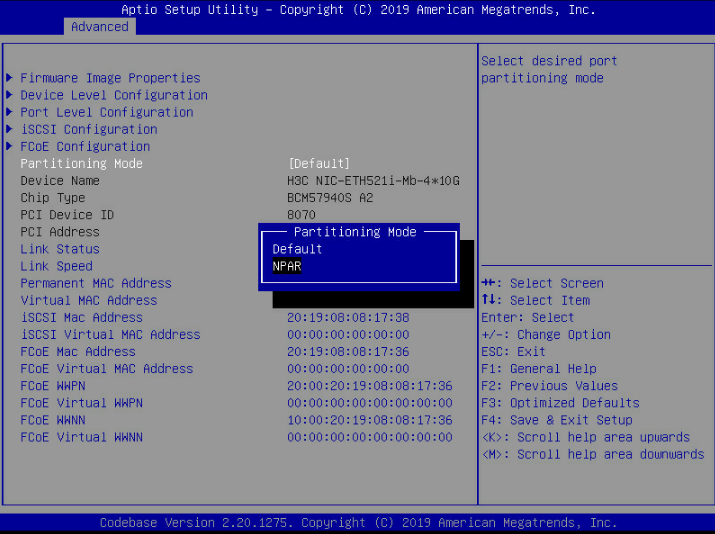

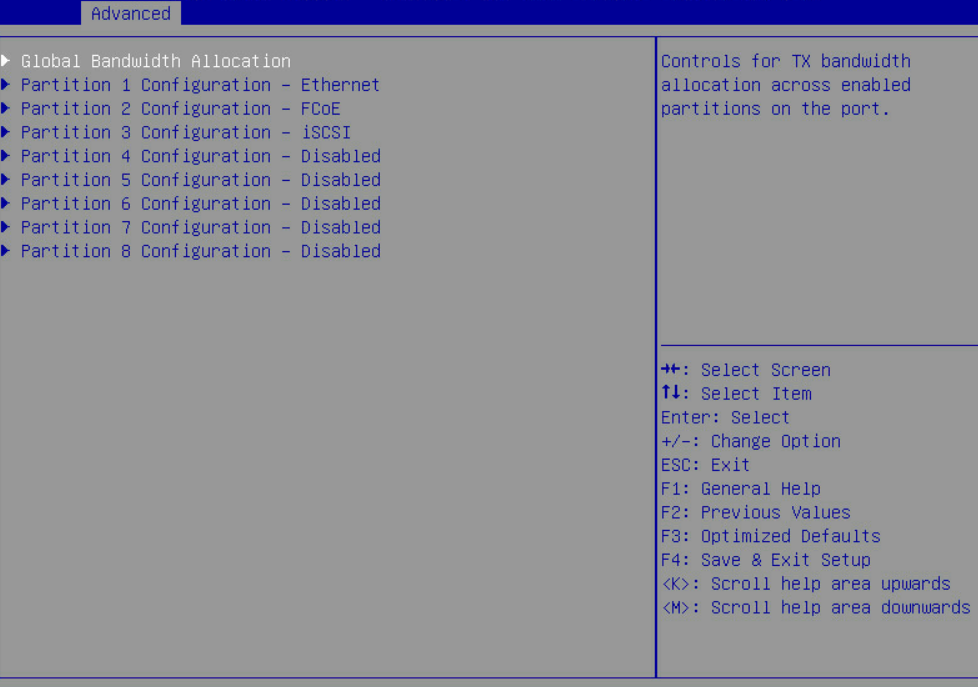

Enabling the NPAR function of the mezzanine card

Configuring the mezzanine card

Configuring storage services of the aggregation switch module

Configuring the Brocade switch

Configuring Ethernet services of the aggregation switch module

Configuring the aggregation switch

Example: Configuring FCoE (directly connecting the aggregation interconnect modules to 3Par storage)

Configuring the mezzanine card

Configuring the interconnect module

Example: Configuring FCoE (pass-through modules)

Configuring the mezzanine card

Example: Configuring FC pass-through modules (connecting FC pass-through module to FC switch)

Configuring the mezzanine card

Configuring the Brocade switch

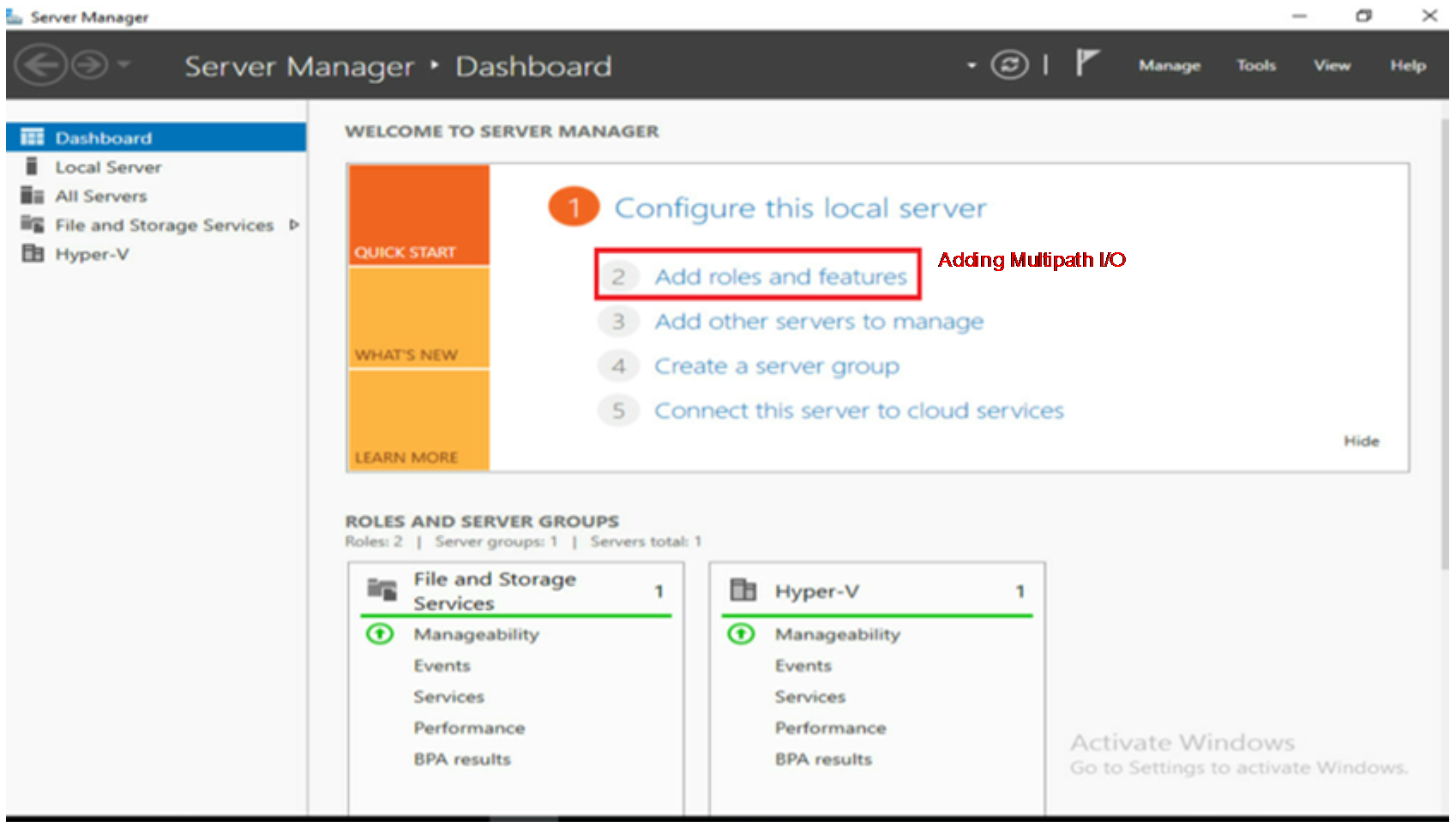

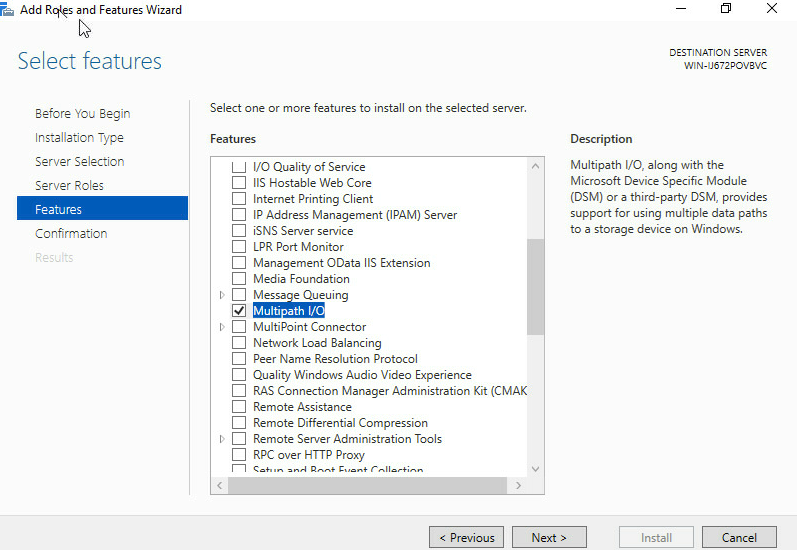

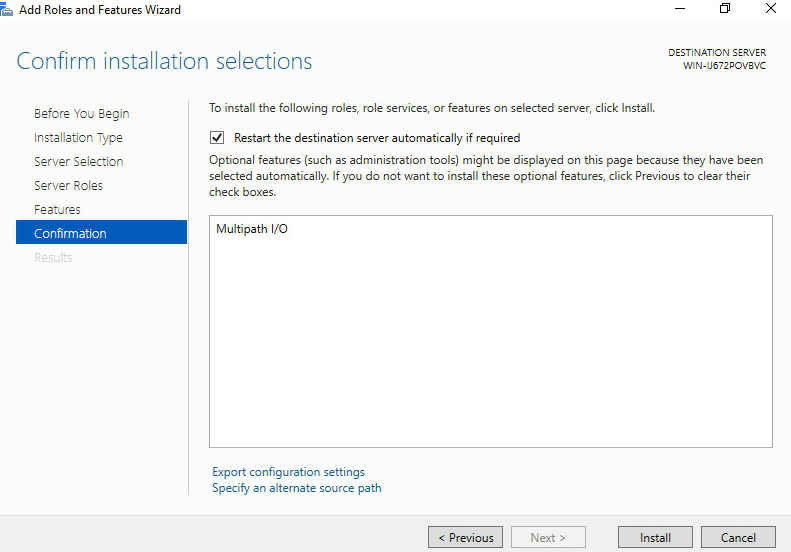

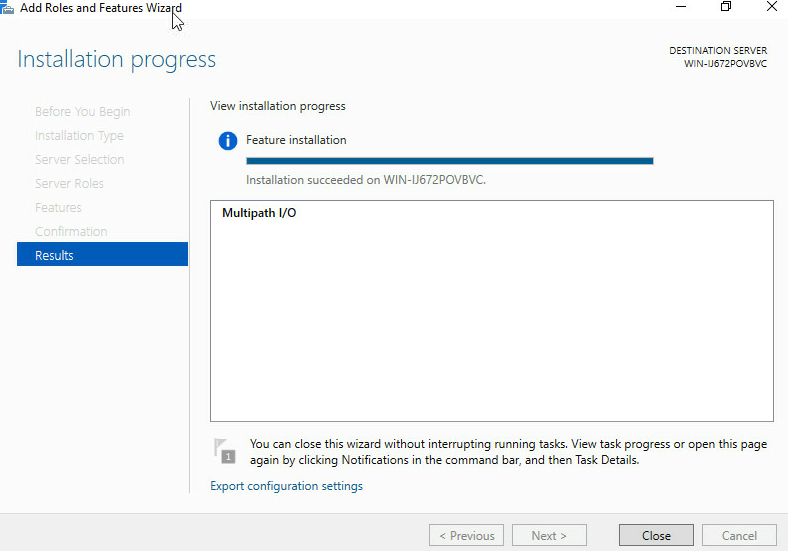

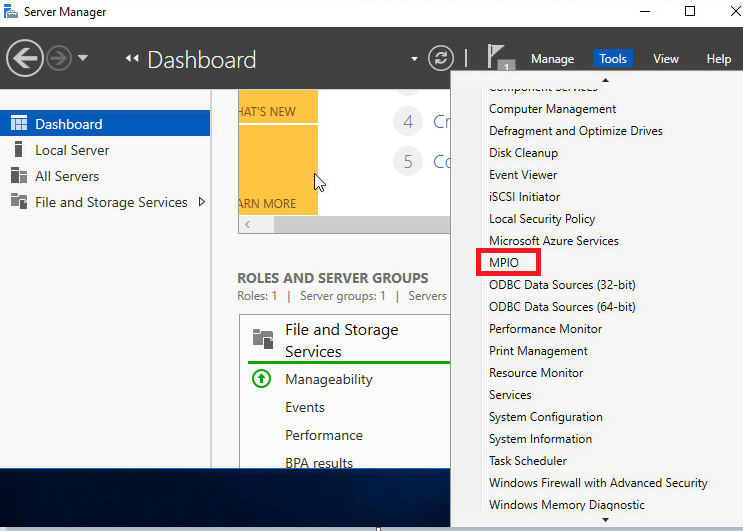

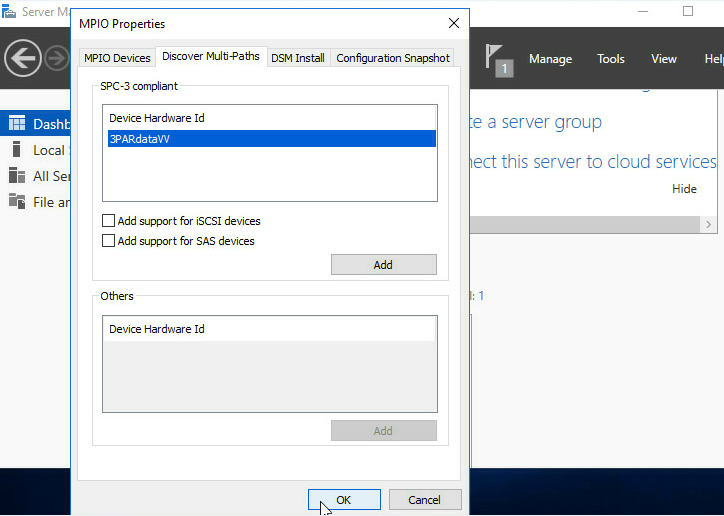

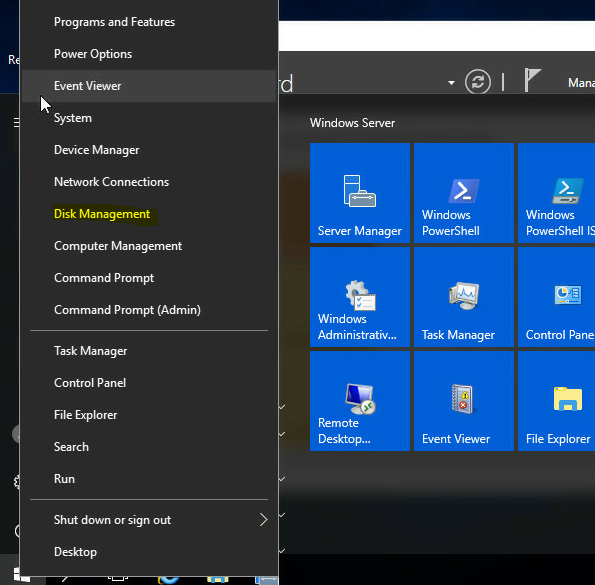

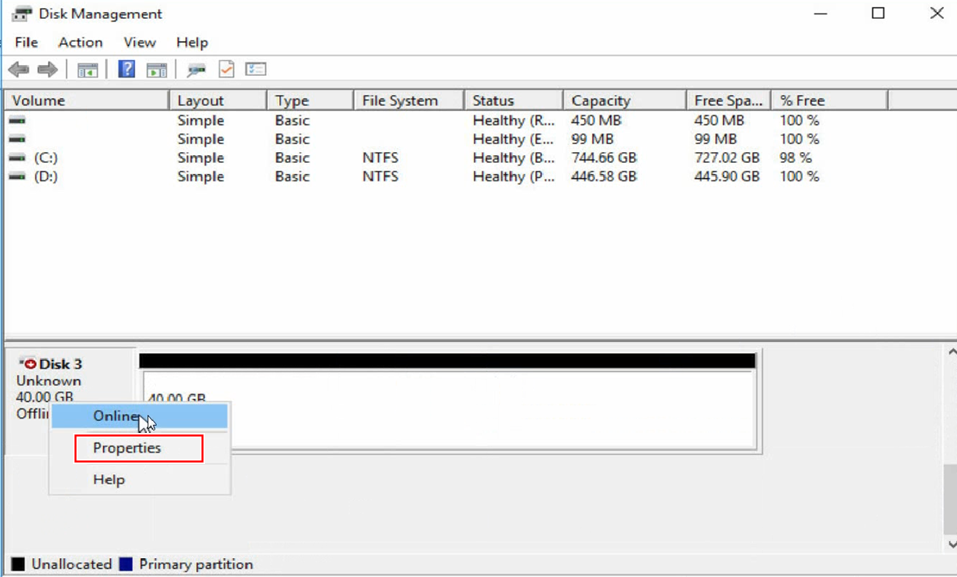

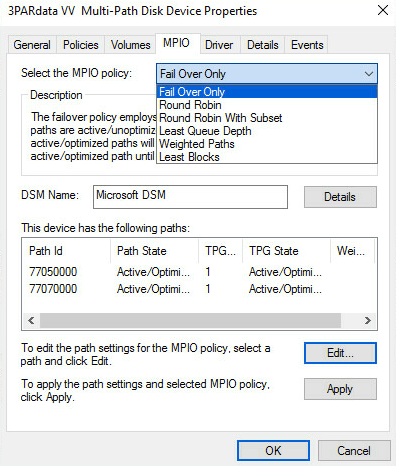

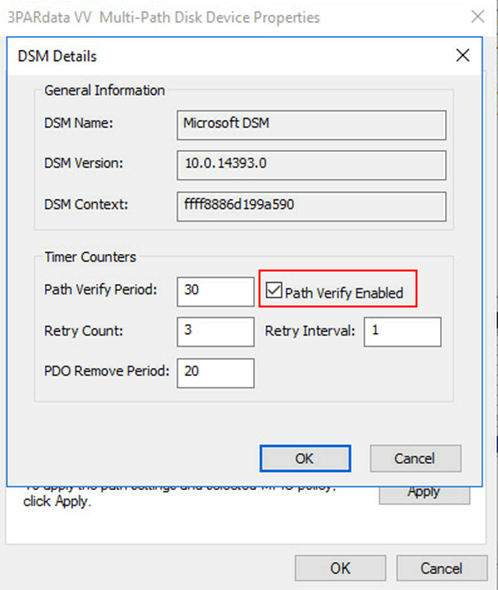

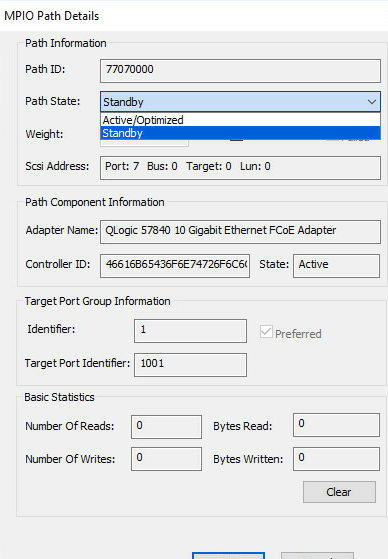

Configuring multipath and adding network disks on Windows Server

Compatibility between the storage of different models and the blade server

Introduction

The following information provides examples for configuring FC and FCoE services for the H3C UniServer B16000 blade server chassis.

Hardware compatibility

Table 1 lists the hardware to be used in typical networking modes. If there are multiple models in a cell, the hardware of these models is appropriate for this configuration.

|

IMPORTANT: Red Hat 8.x has deleted the modules supporting FCoE. Therefore, if Red Hat 8.x is installed on the server as its operating system (OS), the FCoE function of the mezzanine card is unavailable. |

Table 1 Hardware compatibility

Prerequisites

The configuration examples were created and verified in a lab environment, and all the devices were started with the factory default configuration. When you are working on a live network, make sure you understand the potential impact of every command on your network.

The following information is provided based on the assumption that you have basic knowledge of FC, FCoE, port aggregation features, H3C blade servers, interconnect modules, Windows/Linux/ESXi/CAS operating systems, Brocade switches, and 3Par storage.

The following information mainly describes the procedure for configuring the blade server chassis. As a best practice, configure external network settings as needed.

Example: Configuring FC (connecting FC switch module to FC switch)

Network requirement

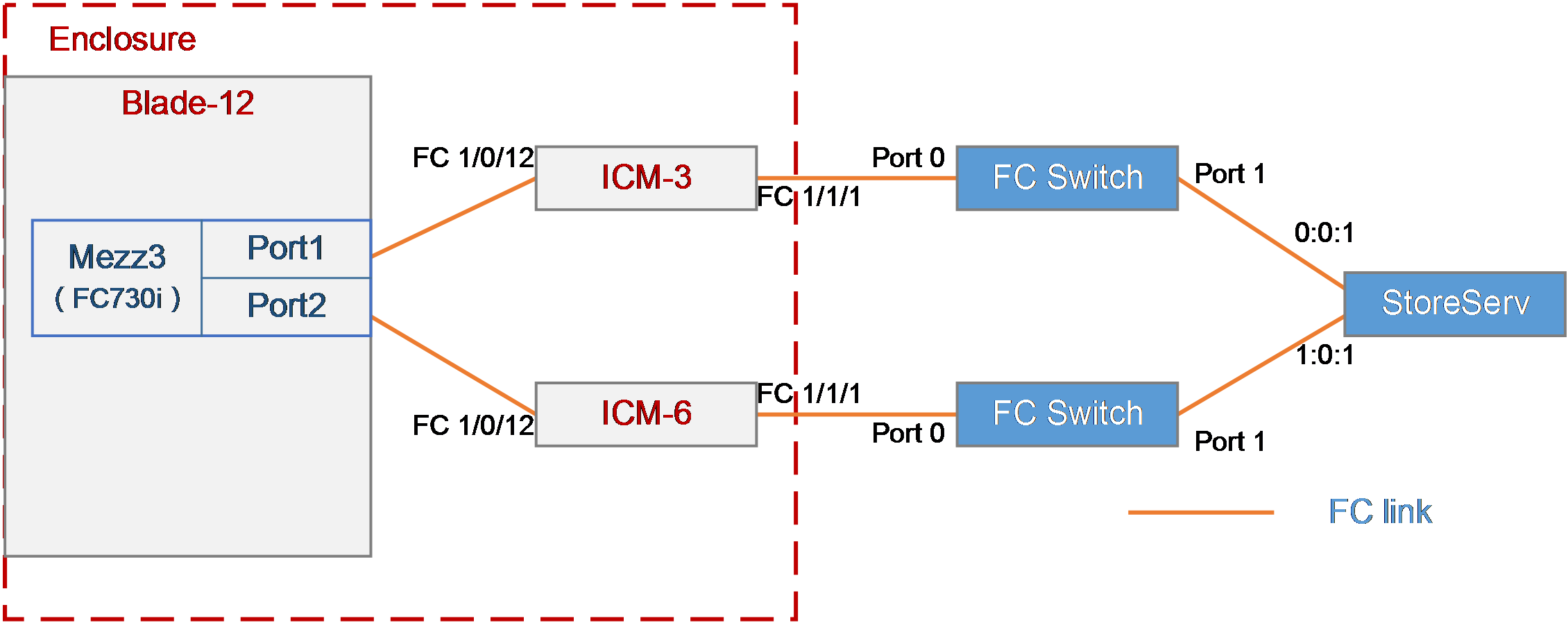

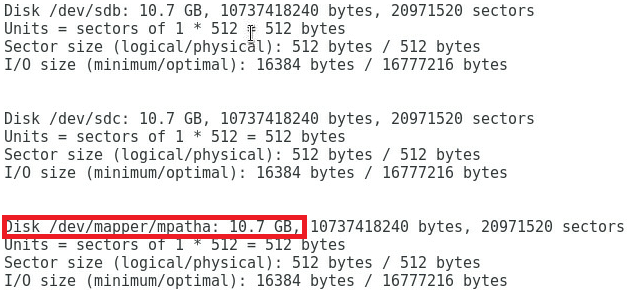

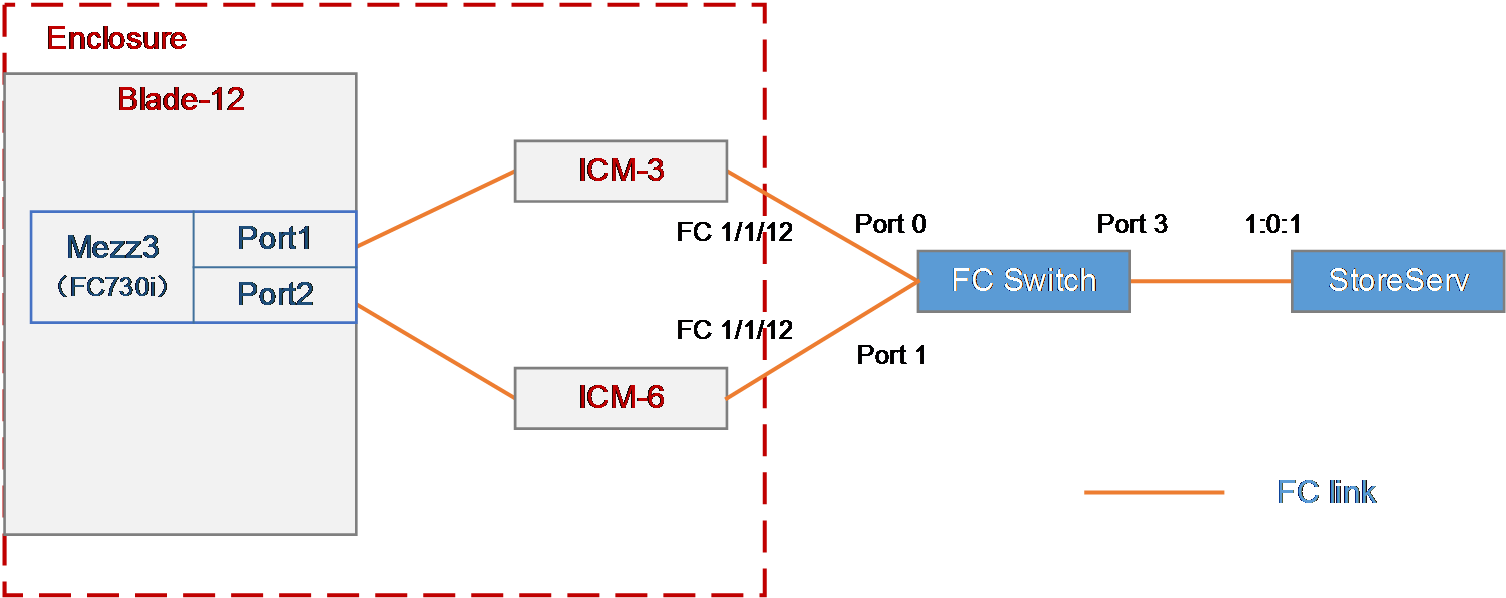

As shown in Figure 1, a blade server and two FC switch modules are installed in the H3C B16000 blade server chassis. The blade server is installed in slot 12, the two FC switch modules are installed in slot 3 and slot 6, and the mezzanine card is installed in the mezzanine card slot 3 of the blade server. The FC 1/1/1 port of each of the two FC switch modules is connected to an FC switch, and the FC switch is connected to the FC storage device through the 0:0:1 port.

In this example, the following devices and modules are used: blade server (H3C UniServer B5700 G3), mezzanine card (NIC-FC730i-Mb-2*32G, "FC730i"), FC switch module (BX608FE), FC switch (Brocade 6510), and FC storage device (H3C CF8840 from the HPE 3Par StoreServ family).

The following requirements are expected to be met:

The OS on the blade server can mount storage volumes on the 3Par to realize remote storage, and data on the 3Par can be accessed through the OS when any interconnect module fails.

Figure 1 Network diagram between FC switch module (NPV mode) and FC switch

Analysis

· To mount a 3Par storage volume under the OS, build an FC link between the blade server and the 3Par storage, and create a storage volume on the 3Par storage. Then, the OS can automatically identify the storage volume.

· In this example, the FC switch module is directly connected to the FC switch, and then connects to the 3Par storage through the FC switch. In this case, you need to configure the mode of the two FC switch modules to NPV.

· To ensure service continuity when any interconnect module fails, you need to configure the multipath function under the OS to improve network reliability.

· As a best practice, connect two interconnect modules to two different FC switches respectively for link redundancy, thus avoiding network disconnection caused by FC switch failure.

Software versions used

This example was created and verified on versions SWITCH_SYS-1.00.11 and OM-1.00.11 of interconnect modules.

Configuration precautions

· Before adding a storage volume, ensure that the blade server has installed the OS and the appropriate network adapter driver, so that the OS can identify the FC730i network adapter correctly. For details about installing the OS, see H3C Servers Operating System Installation Guide. For details about installing the network adapter driver, see FC730i Mezzanine Card Module User Guide.

· After mounting the storage volumes and replacing existing storage volumes on the 3Par with new ones, reboot the OS of the blade server to identify the new volumes.

Procedures

Querying port information

Query the connection relationship between the internal ports of the mezzanine card and interconnect modules according to "Querying port relations."

It can be seen that the FC 1/0/12 ports of the interconnect module 3 and interconnect module 6 are used in this example.

Configuring the mezzanine card

In the FC networking, the FC mezzanine card can be used correctly after being installed in place. There are no other special requirements in this example, so you do not need to configure the mezzanine card.

Configuring the Brocade switch

In this example, the Brocade switch uses the default settings. Ensure that Port 0 and Port 1 reside in the same FC zone.

Configuring the interconnect module

Configuring interconnect module 3

|

IMPORTANT: The internal port of the BX608FE interconnect module is in the shutdown state by default. Therefore, when configuring the module for the first time or restoring the default configuration file, execute the undo shutdown command to enable the corresponding port before configuring. Determine the internal port to which the mezzanine card port is connected according to "Querying port relations." |

# Configure the FCoE mode of the switch to NPV.

<H3C> system-view

[H3C] fcoe-mode npv

# Create the VSAN. In this example, create VSAN 1.

[H3C] vsan 1

[H3C-vsan1] quit

# Create the VLAN to map VSAN. In this example, create VLAN 10, enable the FCoE function on VLAN 10, and map the VLAN to VSAN 1.

[H3C] vlan 10

[H3C-vlan10] fcoe enable vsan 1

[H3C-vlan10] quit

# Configure the port connected to the Brocade switch to operate in the NP mode.

[H3C] interface fc1/1/1

[H3C-Fc1/1/1] fc mode np

[H3C-Fc1/1/1] quit

# Save the configuration.

[H3C] save

The current configuration will be written to the device. Are you sure? [Y/N]:y

Please input the file name(*.cfg)[flash:/startup.cfg]

(To leave the existing filename unchanged, press the enter key):

Configuring interconnect module 6

|

IMPORTANT: Type IMPORTANT text here. The internal port of the BX608FE interconnect module is in the shutdown state by default. Therefore, when configuring for the first time or restoring the default configuration file, execute the undo shutdown command to enable the corresponding port before configuring. Determine the internal port to which the mezzanine card port is connected according to "Querying port relations." |

# Configure the FCoE mode of the switch to NPV.

<H3C> system-view

[H3C] fcoe-mode npv

# Create the VSAN. In this example, create VSAN 2.

[H3C] vsan 2

[H3C-vsan2] quit

# Create the VLAN to map VSAN. In this example, create VLAN 20, enable the FCoE function on VLAN 20, and map the VLAN to VSAN 2.

[H3C] vlan 20

[H3C-vlan20] fcoe enable vsan 2

[H3C-vlan20] quit

# Configure the port connected to BROCADE switches to operate in NP mode.

[H3C] interface fc1/1/1

[H3C-Fc1/1/1] fc mode np

[H3C-Fc1/1/1] access vsan 2

[H3C-Fc1/1/1] quit

# Add the internal port to VSAN 2.

[H3C] interface fc1/1/1

[H3C-Fc1/0/12] access vsan 2

[H3C-Fc1/1/1] quit

# Save the configuration.

[H3C] save

The current configuration will be written to the device. Are you sure? [Y/N]:y

Please input the file name(*.cfg)[flash:/startup.cfg]

(To leave the existing filename unchanged, press the enter key):

Querying the WWN number of the mezzanine card

Execute the display npv login command on the FC switch module to view the registration information about the node device (mezzanine card) connected to the downlink port of the FC switch module and the mapped uplink port. Here we use viewing the FC switch module 3 as an example:

<H3C>display npv login

Server External

Interface VSAN FCID Node WWN Port WWN Interface

Fc1/0/12 1 0x020101 20:00:f4:e9:D4:53:f1:c5 21:00:f4:e9:D4:53:f1:c5 Fc1/1/1

Table 2 Description of the information displayed by executing the display npv login command

|

Field |

Description |

|

Server Interface |

The name of the downlink port |

|

VSAN |

The VSAN number |

|

FCID |

The FC address of the node |

|

Node WWN |

The WWN of the node |

|

Port WWN |

The WWN of the node port |

|

External Interface |

The name of the uplink port to which the downlink port is mapped |

Configuring 3Par storage

See "Configuring 3Par storage" for the process of configuring 3Par storage.

Configuring the OS

|

IMPORTANT: · The following information provides the procedure for configuring multipath under Red Hat 7.5. Multipath can realize the loading balancing and reliability of the traffic between hosts and storage devices. For the procedure for configuring multipath and verifying the configuration under Windows, see "Configuring multipath and adding network disks on Windows Server." · Before configuration, ensure that the OS has installed the multipath tool, a built-in tool of most Linux systems. |

Prerequisites

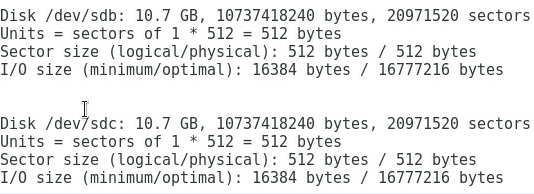

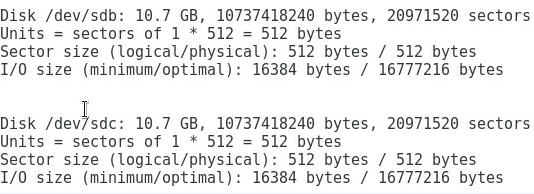

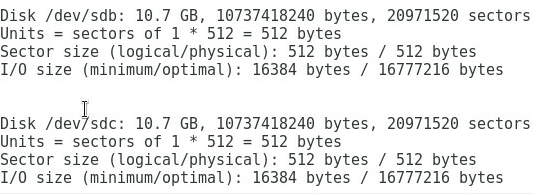

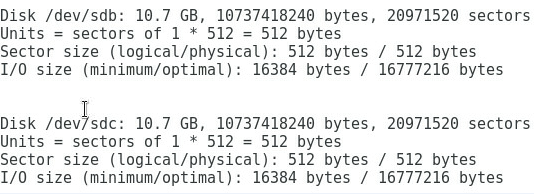

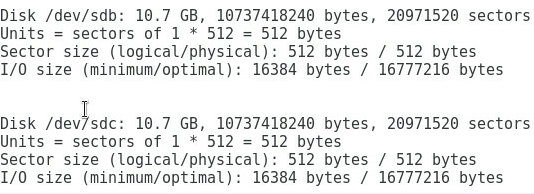

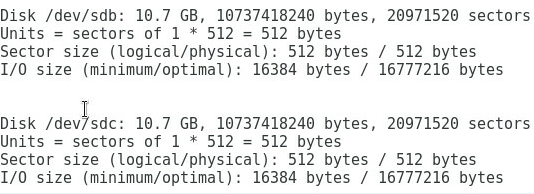

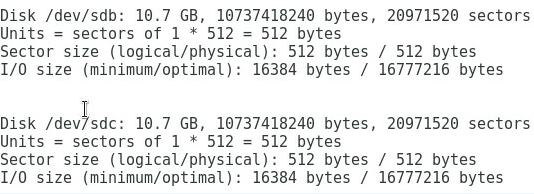

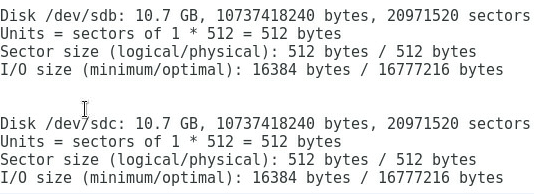

Execute the fdisk -l command under the OS to view the two virtual volumes identified by the host, as shown in Figure 2.

Figure 2 Identifying the virtual volumes

|

|

NOTE: If no disk is found, execute the echo 1 > /sys/class/fc_host/hostnum/issue_lip command to manually refresh fc_host, where hostnum indicates the host number under the /sys/class/fc_host directory, such as host 1. |

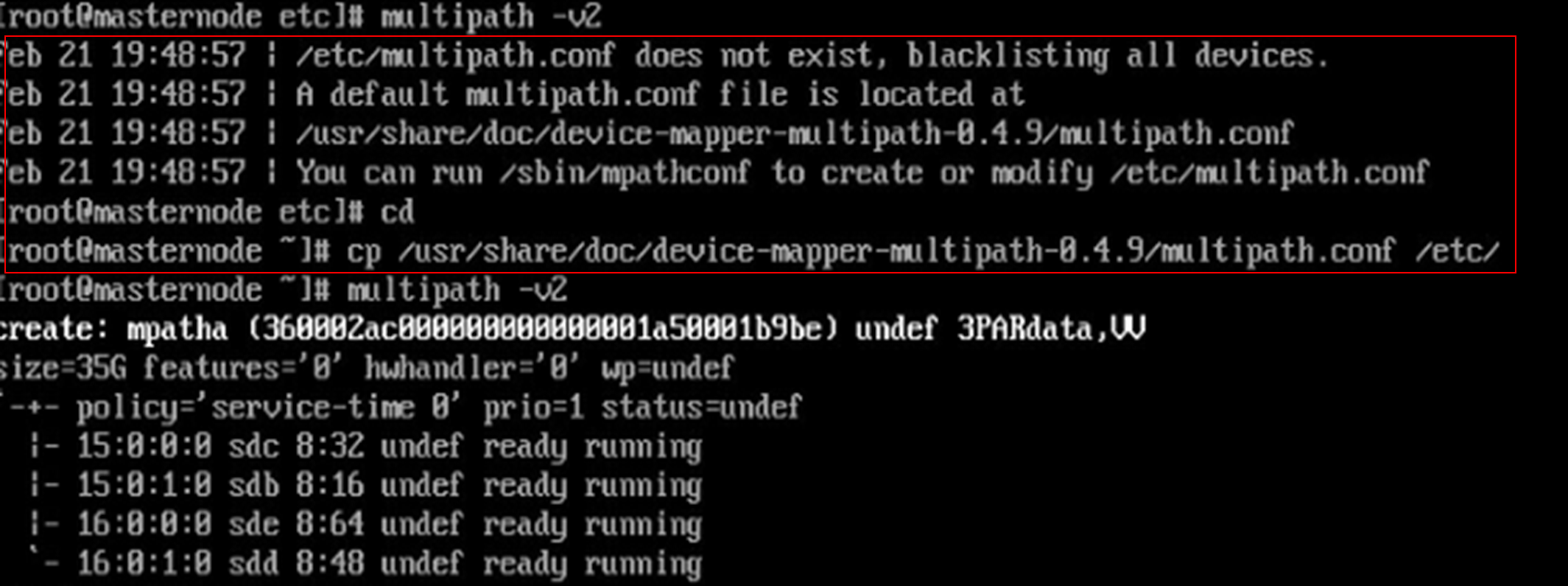

Configuring multipath

1. Execute the following commands in turn to load the multipath server, set the polling mode, enable the multipath service, and format the path.

# modprobe dm-multipath

# modprobe dm-round-robin

# systemctl start multipathd.service

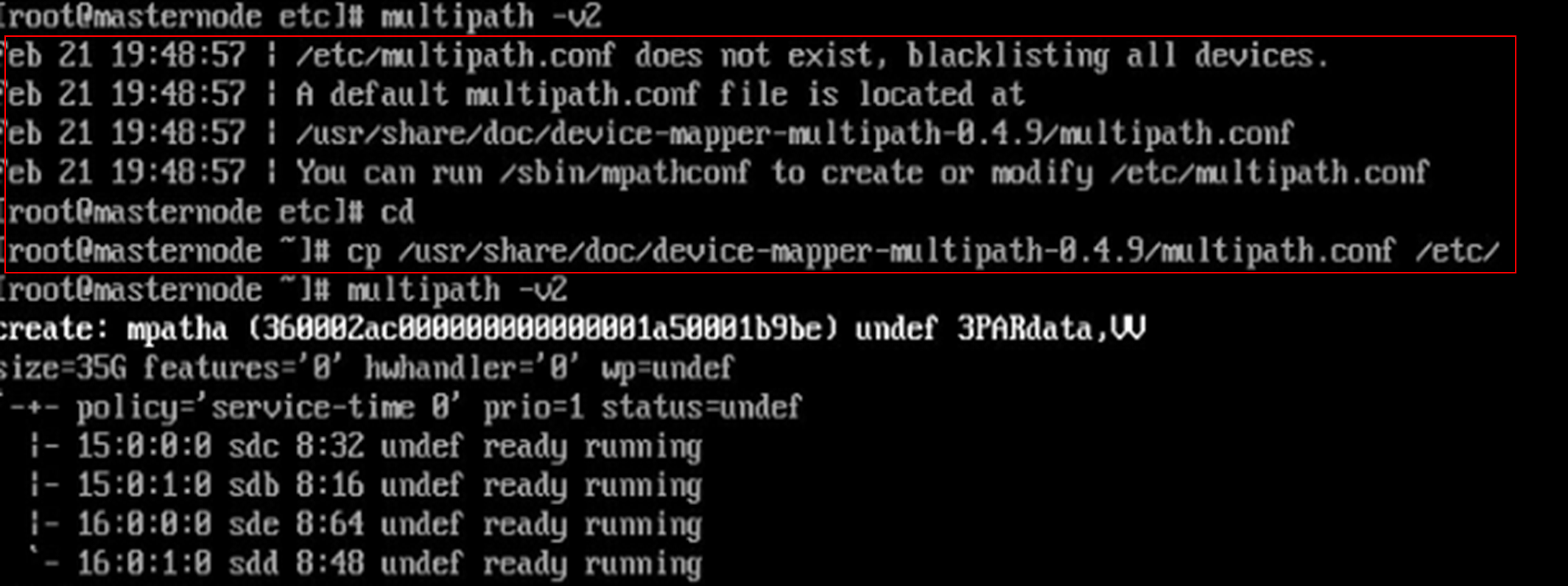

# multipath –v2

|

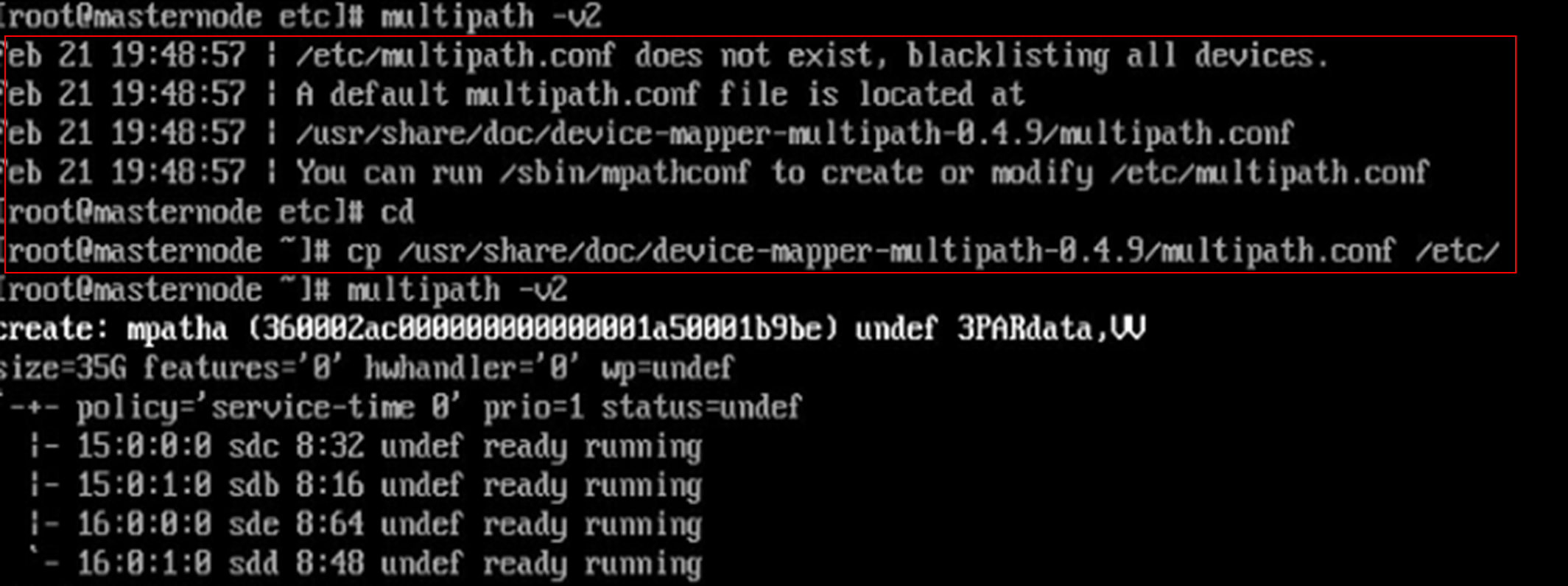

|

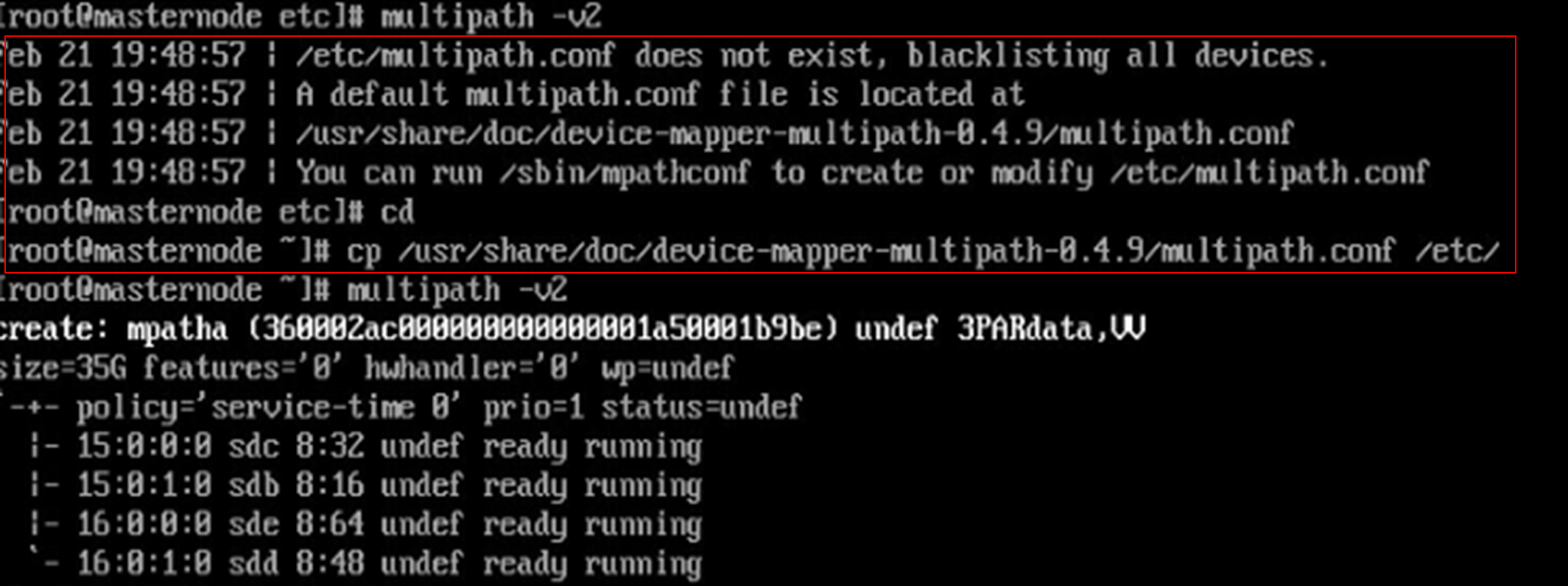

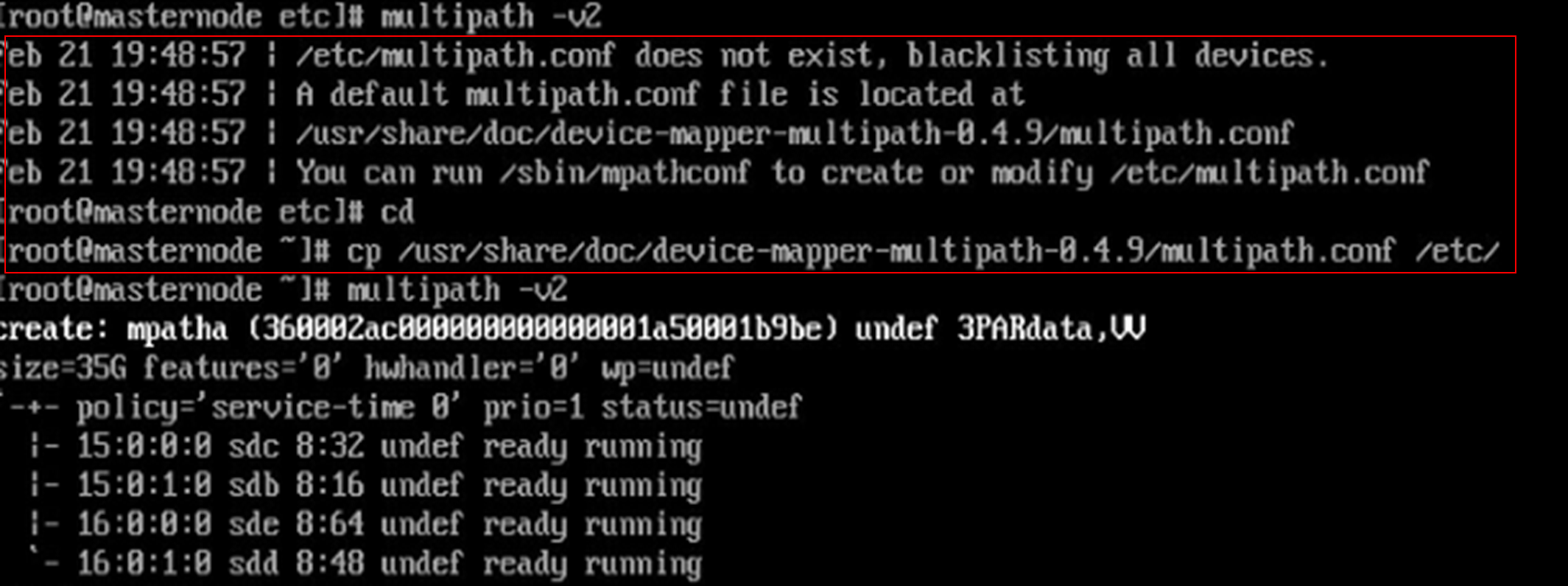

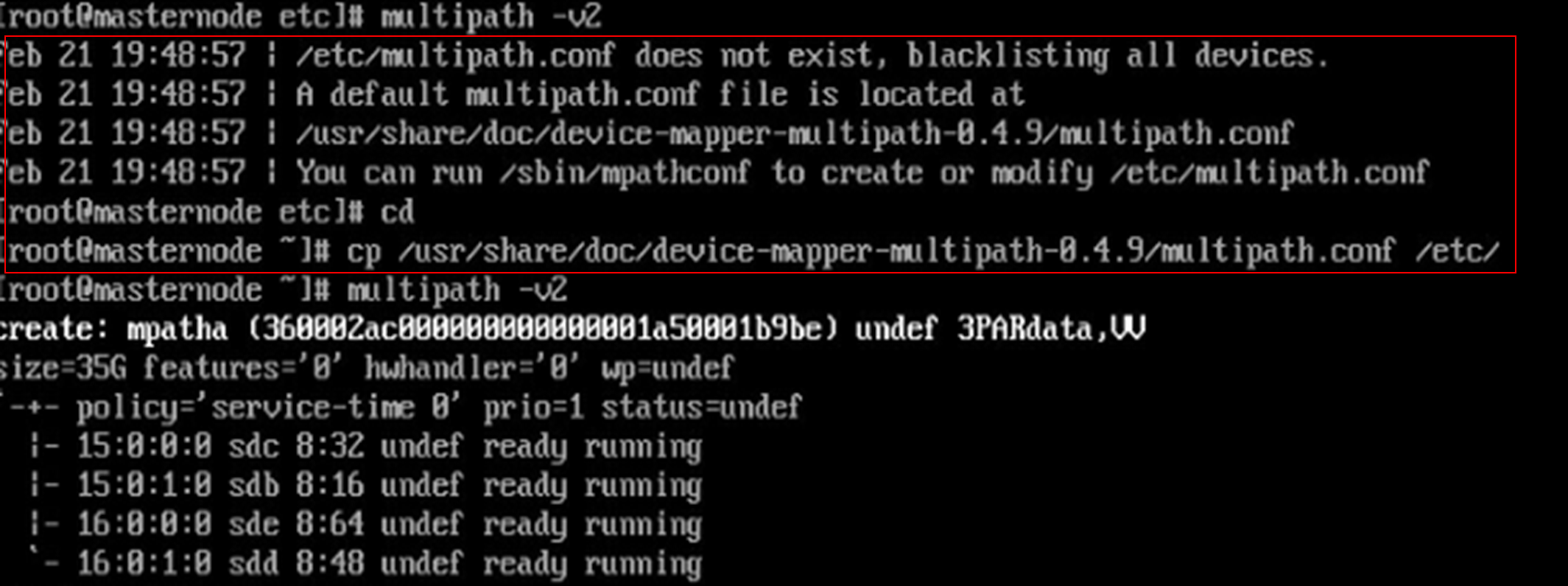

NOTE: If it is prompted that the multipath.conf file does not exist under the Red Hat operating system, you can copy the file from another location and deliver it again, as shown in Figure 3. |

Figure 3 Handling the case when the multipath.conf file does not exist

2. Execute the following command to reboot the multipath server.

# systemctl restart multipathd.service

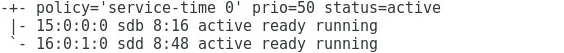

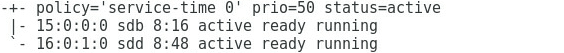

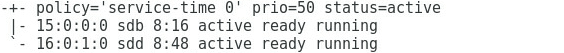

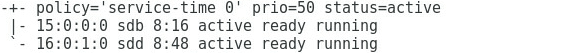

3. Run the following command to view the multipath link status, and you can see that the two ports are in the active state, as shown in Figure 4.

# multipath –ll

Figure 4 Viewing the multipath link status

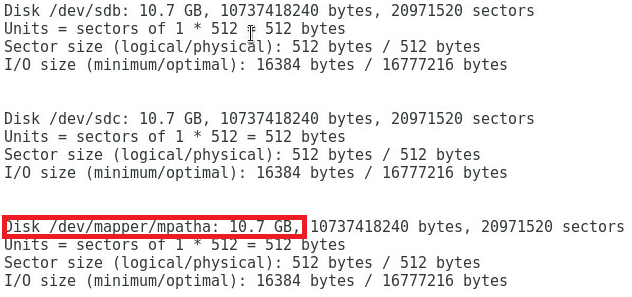

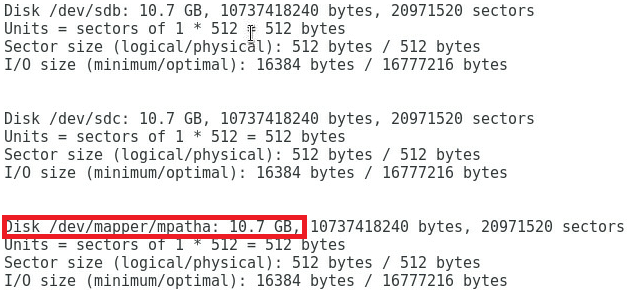

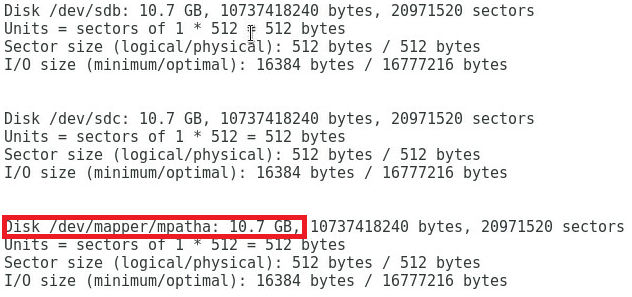

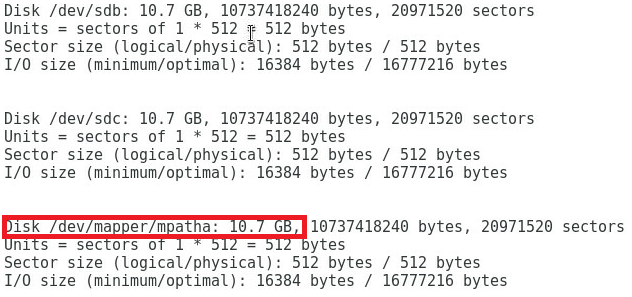

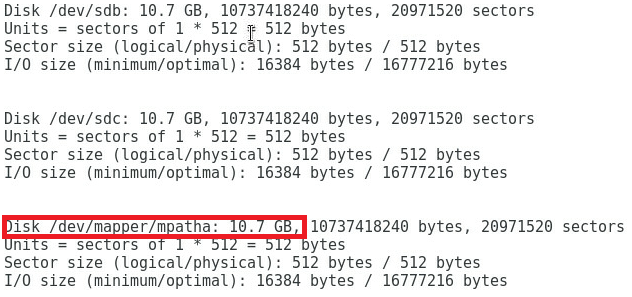

Verifying the configuration

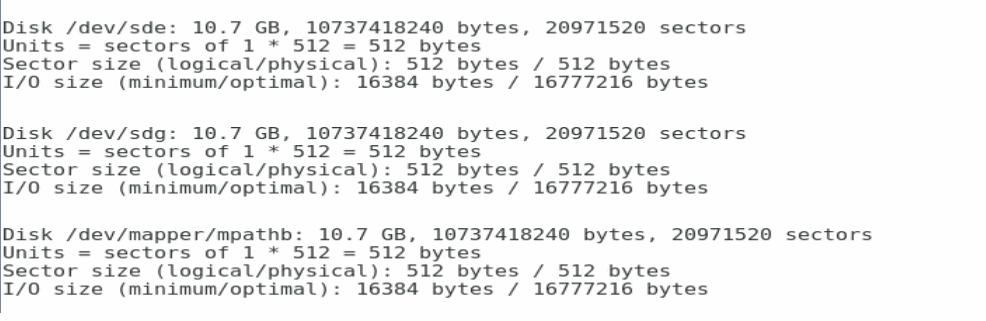

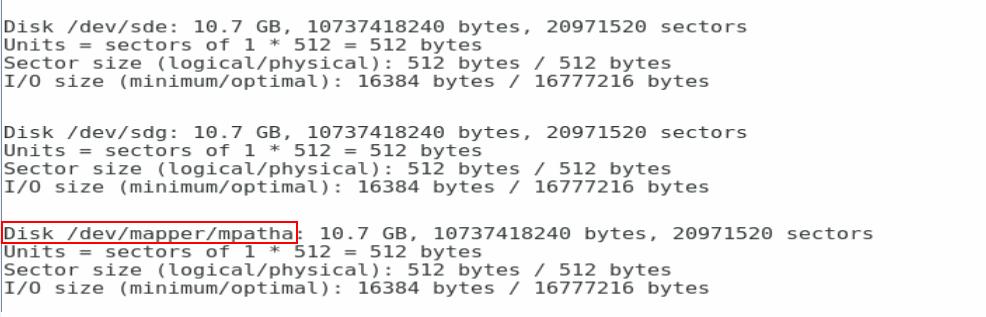

After configuring the multipath, execute the fdisk -l command again. You can see a new mpatha volume, indicating that the system can access virtual volumes through multiple paths, as shown in Figure 5.

Figure 5 Viewing the mpatha volume

Example: Configuring FC (uplinking multiple ports of the FC switch module to FC switch)

Network requirement

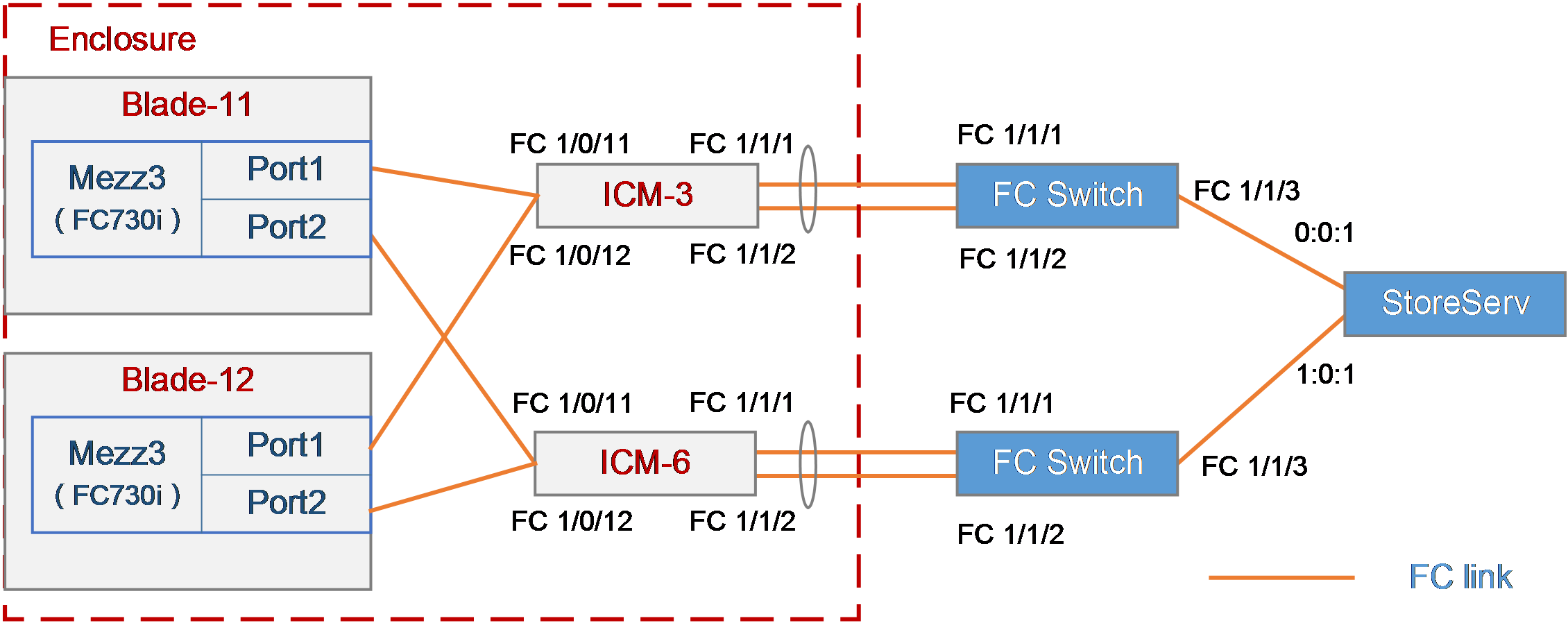

As shown in Figure 6, two blade servers and two FC switch modules are installed in the H3C B16000 blade server chassis.

· The two blade servers are installed in slot 11 and slot 12, respectively.

· The two FC switch modules are installed in slot 3 and slot 6, respectively.

· An FC mezzanine card is installed in the mezzanine card slot 3 of the blade server.

· The FC 1/1/1 and FC 1/1/2 ports of the two FC switch modules are directly connected to Port 0 and Port 1 of the two FC switches.

· Port 2 of each of the two FC switches is directly connected to the 0:0:1 or 1:0:1 port of the FC storage device.

In this example, the following devices and modules are used: blade server (H3C UniServer B5700 G3), mezzanine card (NIC-FC730i-Mb-2*32G, "FC730i"), FC switch module (BX608FE), FC switch (Brocade 6510), and FC storage device (H3C CF8840 from the HPE 3Par StoreServ family).

The following requirements are expected to be met:

· Mounts storage volumes on the 3Par storage to the blade server to realize remote storage.

· Realizes the load balancing of the traffic from multiple ports of the FC switch modules.

· Ensures network reliability so the service is not affected when any FC switch or FC switch module fails.

Figure 6 Network diagram of uplinking multiple ports of FC switch modules in NPV mode to SAN

Key steps

· To mount 3Par storage volumes to the blade server, you need to build an FC link between the blade server and the 3Par storage to enable the transmission of FC traffic, which requires the following settings:

¡ Configure FC-related settings on the blade server and mezzanine card.

¡ Configure the FC function on the FC switch module.

¡ Configure the FC function on the FC switch to ensure FC link connectivity.

¡ Create a storage volume on the 3Par so that the blade server's OS can automatically recognize the storage volume.

· To balance the multi-port traffic load of FC switch modules, you need to configure the ports to operate in the NPV mode to balance the load of multiple FC links.

· As a best practice, connect each interconnect module to two FC switches in the network for link redundancy, thus avoiding network disconnection caused by FC switch failure.

· To ensure service continuity when any interconnect module fails, you need to configure the multipath function under the OS of the blade server to improve network reliability.

Software versions used

This example was configured and verified on the SWITCH_SYS-1.02.04 version of the interconnect module.

Configuration precautions

· Before adding a storage volume, ensure that the blade server has installed the OS and the appropriate network adapter driver, so that the OS can identify the FC730i network adapter correctly. For details about installing the OS, see H3C Servers Operating System Installation Guide. For details about installing the network adapter driver, see FC730i Mezzanine Card Module User Guide.

· After mounting the storage volumes and replacing existing storage volumes on the 3Par with new ones, reboot the OS of the blade server to identify the new volumes.

Configuration procedure

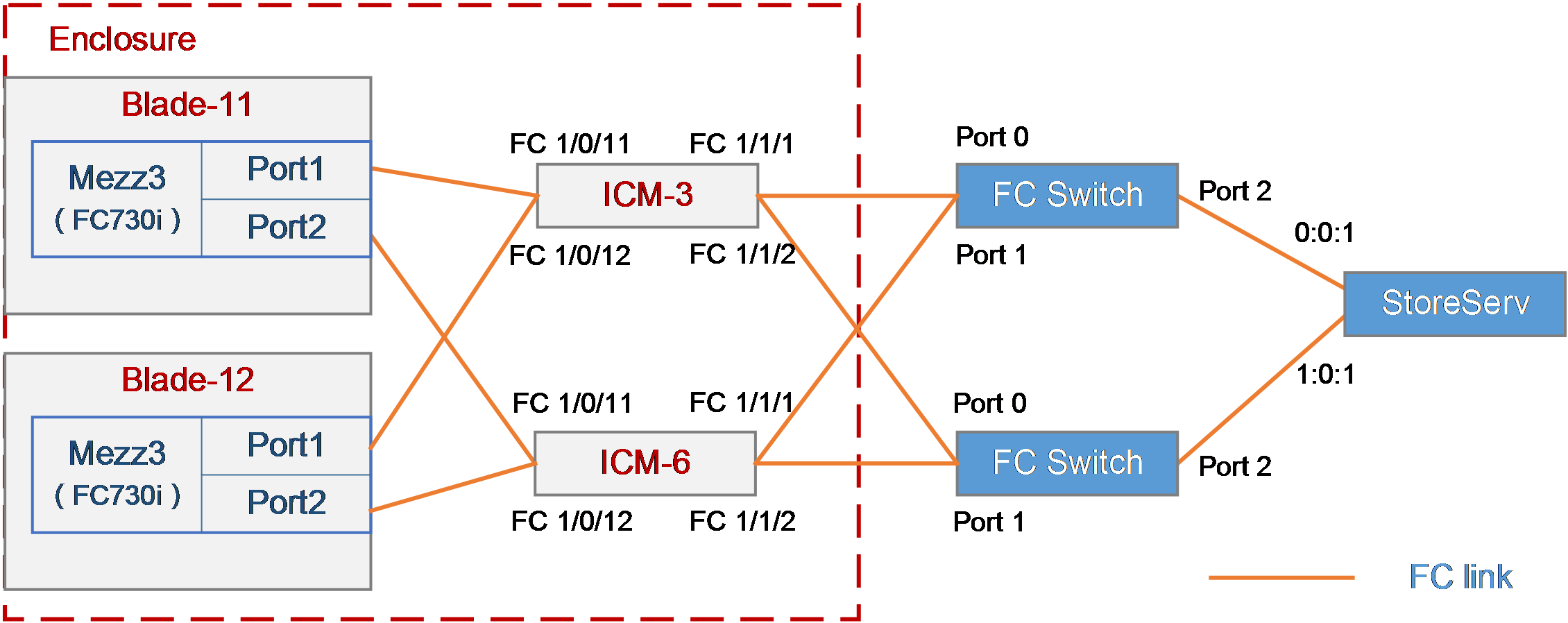

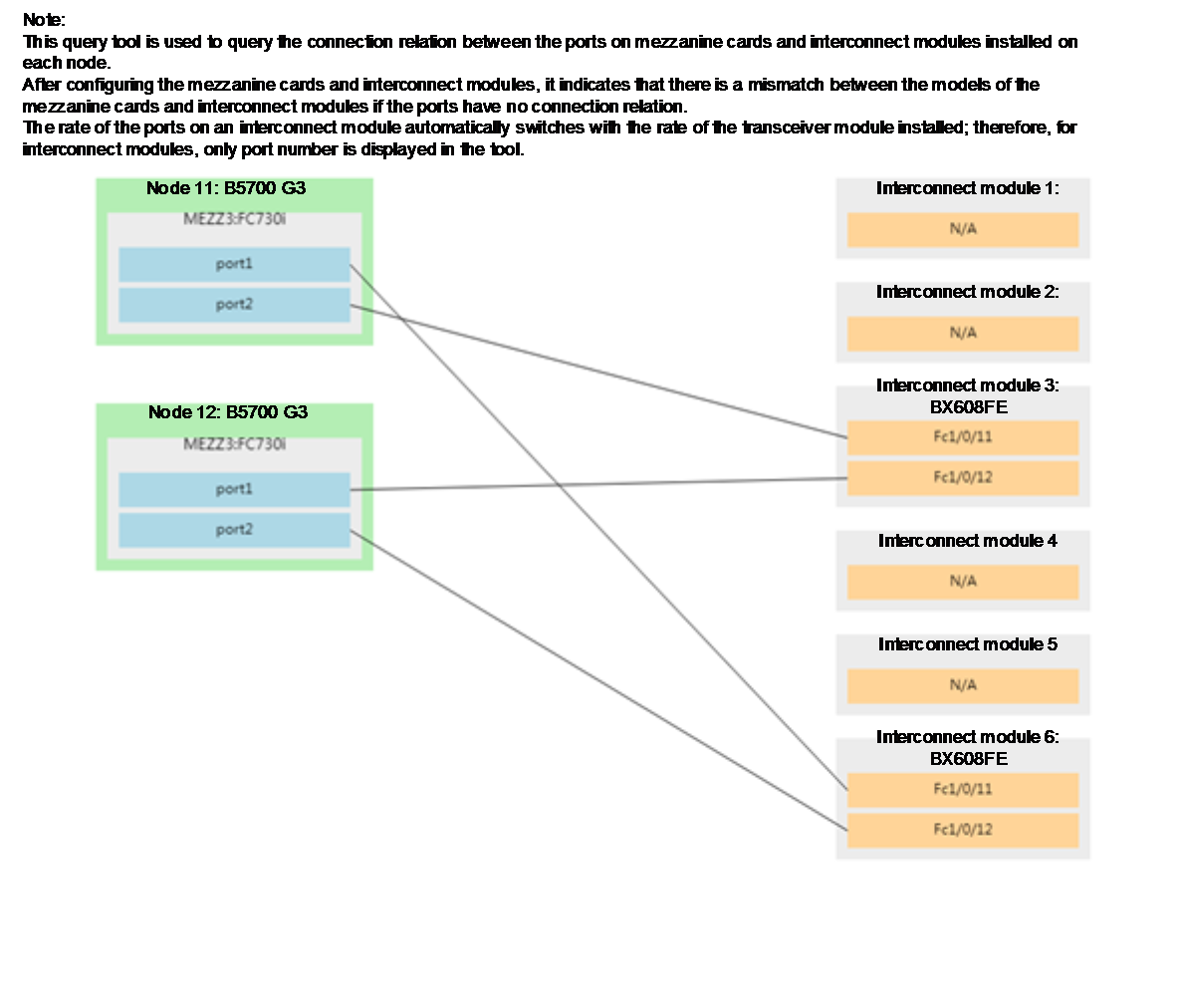

Querying the port connection relation between mezzanine cards and interconnect modules

Please use the H3C networking query tool on the official website to view the port connection relation between mezzanine cards and interconnect modules.

As shown in Figure 7, according to the query result, the mezzanine card of the blade server 11 is connected to FC 1/0/11 ports of interconnect modules 3 and 6, and the mezzanine card of blade server 12 is connected to FC 1/0/12 ports of interconnect modules 3 and 6.

Figure 7 Port connection relation between mezzanine cards and interconnect modules

Configuring the mezzanine card

In the FC networking, the FC mezzanine card can be used correctly after being installed in place. There are no other special requirements in this example, so you do not need to configure the mezzanine card.

Configuring the interconnect module

Configuring the interconnect module 3

|

IMPORTANT: The internal port of the BX608FE interconnect module is in the shutdown state by default. Therefore, when configuring the module for the first time or restoring the default configuration file, execute the undo shutdown command to enable the corresponding port before configuring. Determine the internal port to which the mezzanine card port is connected according to "Querying port relations." |

# Configure the FCoE mode of the FC switch module to NPV.

<H3C> system-view

[H3C] fcoe-mode npv

# Create the VSAN. In this example, create VSAN 1.

[H3C] vsan 1

[H3C-vsan1] quit

# Create the VLAN to map VSAN. In this example, create VLAN 10, enable the FCoE function on VLAN 10, and map the VLAN to VSAN 1.

[H3C] vlan 10

[H3C-vlan10] fcoe enable vsan 1

[H3C-vlan10] quit

# Configure the mode of FC 1/1/1 and FC 1/1/2 ports connected to Brocade switches to NP, and add them to VSAN 1 using access.

[H3C] interface fc1/1/1

[H3C-Fc1/1/1] fc mode np

[H3C-Fc1/1/1] port access vsan 1

[H3C-Fc1/1/1] quit

[H3C] interface fc1/1/1

[H3C-Fc1/1/1] fc mode np

[H3C-Fc1/1/1] port access vsan 1

[H3C-Fc1/1/1] quit

# Configure the mode of FC 1/0/11 and FC 1/0/12 ports connected to mezzanine cards to F, and add them to VSAN 1 using access.

[H3C] interface fc1/1/1

[H3C-Fc1/0/11] fc mode f

[H3C-Fc1/1/1] port access vsan 1

[H3C-Fc1/1/1] quit

[H3C] interface fc1/1/1

[H3C-Fc1/0/11] fc mode f

[H3C-Fc1/1/1] port access vsan 1

[H3C-Fc1/1/1] quit

# Enter the VSAN view and initiate a manual load balancing.

[H3C] vsan 1

[H3C-vsan1] npv load-balance disruptive

The command may cause traffic interruption. Continue? [Y/N]:y

[H3C-vsan1] quit

|

CAUTION: If you want to restore load balancing after the uplink port gets down/up, you need to initiate a manual load balancing. Conduct link initialization on some downlink ports again. This operation will cause traffic interruption and affect the use of services. Please use it with caution. |

# Save the configuration.

[H3C] save

The current configuration will be written to the device. Are you sure? [Y/N]:y

Please input the file name(*.cfg)[flash:/startup.cfg]

(To leave the existing filename unchanged, press the enter key):

Configuring the interconnect module 6

|

IMPORTANT: The internal port of the BX608FE interconnect module is in the shutdown state by default. Therefore, when configuring the module for the first time or restoring the default configuration file, execute the undo shutdown command to enable the corresponding port before configuring. Determine the internal port to which the mezzanine card port is connected according to "Querying port relations." |

# Configure the FCoE mode of the FC switch module to NPV.

<H3C> system-view

[H3C] fcoe-mode npv

# Create the VSAN. In this example, create VSAN 2.

[H3C] vsan 2

[H3C-vsan2] quit

# Create the VLAN to map VSAN. In this example, create VLAN 20, enable the FCoE function on VLAN 20, and map the VLAN to VSAN 2.

[H3C] vlan 20

[H3C-vlan20] fcoe enable vsan 2

[H3C-vlan20] quit

# Configure the mode of FC 1/1/1 and FC 1/1/2 ports connected to Brocade switches to NP, and add them to VSAN 2 using access.

[H3C] interface fc1/1/1

[H3C-Fc1/1/1] fc mode np

[H3C-Fc1/1/1] port access vsan 1

[H3C-Fc1/1/1] quit

[H3C] interface fc1/1/1

[H3C-Fc1/1/1] fc mode np

[H3C-Fc1/1/1] port access vsan 1

[H3C-Fc1/1/1] quit

# Configure the mode of FC 2/0/11 and FC 1/0/12 ports connected to mezzanine cards to F, and add them to VSAN 1 using access.

[H3C] interface fc1/1/1

[H3C-Fc1/1/1] port access vsan 1

[H3C-Fc1/1/1] quit

[H3C] interface fc1/1/1

[H3C-Fc1/1/1] port access vsan 1

[H3C-Fc1/1/1] quit

# Enter the VSAN view and initiate a manual load balancing.

[H3C] vsan 2

[H3C-vsan1] npv load-balance disruptive

The command may cause traffic interruption. Continue? [Y/N]:y

[H3C-vsan1] quit

# Save the configuration.

[H3C] save

The current configuration will be written to the device. Are you sure? [Y/N]:y

Please input the file name(*.cfg)[flash:/startup.cfg]

(To leave the existing filename unchanged, press the enter key):

Querying the WWN number of the mezzanine card

Execute the display npv login command on the FC switch module to view the registration information about the node device (mezzanine card) connected to the downlink port of the FC switch module and the mapped uplink port. Here we use viewing the FC switch module 3 as an example:

<H3C>display npv login

Server External

Interface VSAN FCID Node WWN Port WWN Interface

Fc1/0/12 1 0x020101 20:00:f4:e9:D4:53:f1:c5 21:00:f4:e9:D4:53:f1:c4 Fc1/1/1

Fc1/0/11 1 0xc60004 20:00:00:10:9b:90:b2:99 10:00:00:10:9b:90:b2:99 Fc1/1/2

Configuring the Brocade switch

In this example, make sure that the two Brocade switches have different domain IDs, and Port 0, Port 1 and Port 2 of each switch reside in the same FC zone. For specific configuration methods, see relevant configuration manuals of Brocade switches.

Configuring 3Par storage

See "Configuring 3Par storage" for the process of configuring 3Par storage.

Configuring the OS

|

IMPORTANT: · The following information provides the procedure for configuring multipath under Red Hat 7.5. Multipath can realize the loading balancing and reliability of the traffic between hosts and storage devices. For the procedure for configuring multipath and verifying the configuration under Windows, see "Configuring multipath and adding network disks on Windows Server." · Before configuration, ensure that the OS has installed the multipath tool, a built-in tool of most Linux systems. |

Preparation

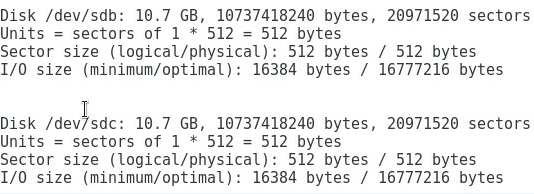

Execute the fdisk -l command under the OS to view the two virtual volumes identified by the host, as shown in Figure 8.

Figure 8 Identifying the virtual volumes

|

|

NOTE: If no disk is found, execute the echo 1 > /sys/class/fc_host/hostnum/issue_lip command to manually refresh fc_host, where hostnum indicates the host number under the /sys/class/fc_host directory, such as host 1. |

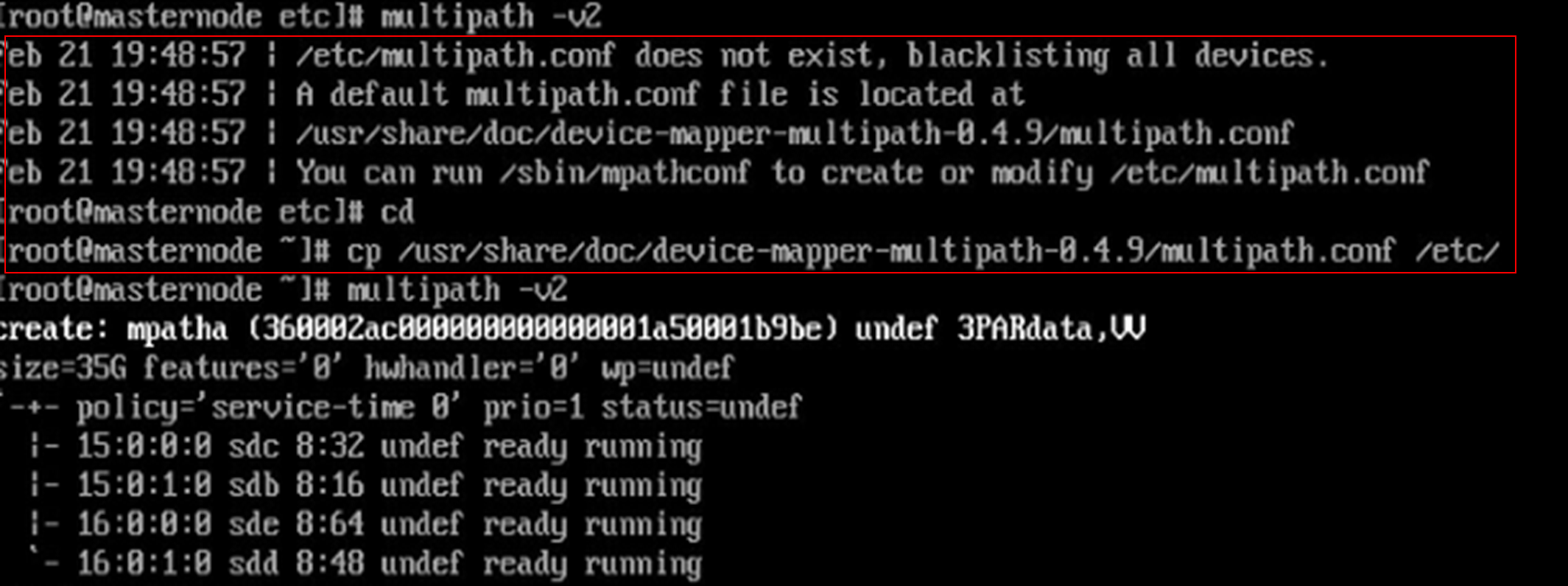

Configuring multipath

1. Execute the following commands in turn to load the multipath server, set the polling mode, enable the multipath service, and format the path.

# modprobe dm-multipath

# modprobe dm-round-robin

# systemctl start multipathd.service

# multipath -v2

|

|

NOTE: If it is prompted that the multipath.conf file does not exist under the Red Hat operating system, you can copy the file from another location and deliver it again, as shown in Figure 9. |

Figure 9 Handling the case when the multipath.conf file does not exist

2. Execute the following command to reboot the multipath server.

# systemctl restart multipathd.service

3. Run the following command to view the multipath link status, and you can see that the two ports are in the active state, as shown in Figure 10.

# multipath –ll

Figure 10 Viewing the multipath link status

Verifying the configuration

Verifying load balancing of ports on FC switch modules

Before and after load balancing, the mapping relation between the downlink ports (connected to the ports of mezzanine cards on blade servers) and the uplink ports (connected to the ports of FC switches) on the FC switch modules changes. Here we use the FC switch module 3 as an example.

1. Before load balancing, execute the display npv login command on the FC switch module. You can see the mapping relation between the uplink and downlink ports of the FC switch module, as follows:

<H3C>display npv login

Server External

Interface VSAN FCID Node WWN Port WWN Interface

Fc1/0/12 1 0x020101 20:00:f4:e9:D4:53:f1:c5 21:00:f4:e9:D4:53:f1:c4 Fc1/1/1

Fc1/0/11 1 0xc60004 20:00:00:10:9b:90:b2:99 10:00:00:10:9b:90:b2:99 Fc1/1/1

2. After load balancing, execute the display npv login command on the FC switch module again. You can see the changed mapping relation between the uplink and downlink ports of the FC switch module, as follows:

<H3C>display npv login

Server External

Interface VSAN FCID Node WWN Port WWN Interface

Fc1/0/12 1 0x020101 20:00:f4:e9:D4:53:f1:c5 21:00:f4:e9:D4:53:f1:c4 Fc1/1/1

Fc1/0/11 1 0xc60004 20:00:00:10:9b:90:b2:99 10:00:00:10:9b:90:b2:99 Fc1/1/2

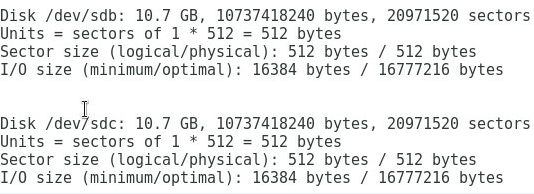

Identifying storage volumes

As shown in Figure 11, execute the fdisk -l command under the OS to view the two virtual volumes identified by the host.

Figure 11 Identifying the virtual volumes

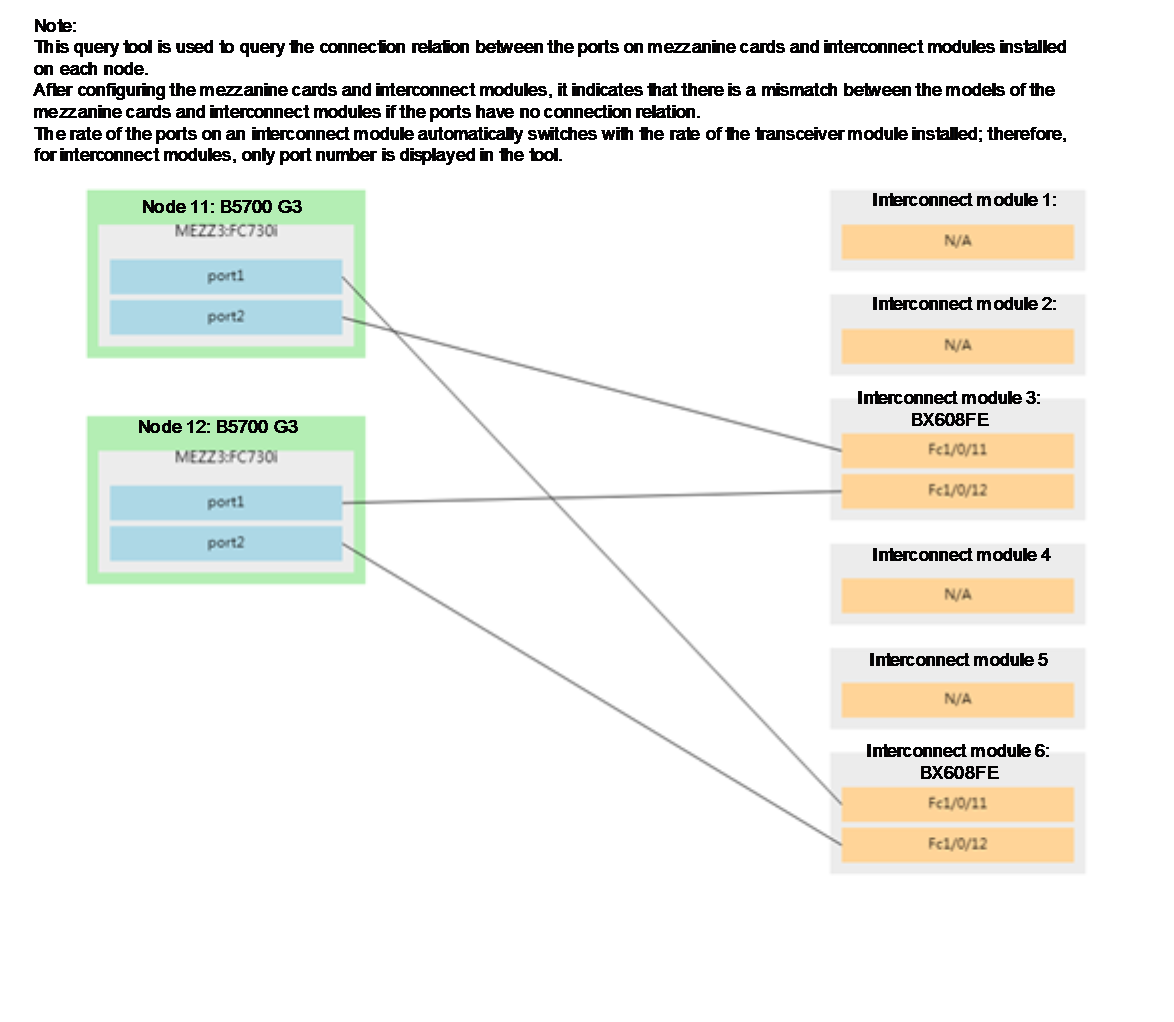

Accessing virtual volumes by the system through multiple paths

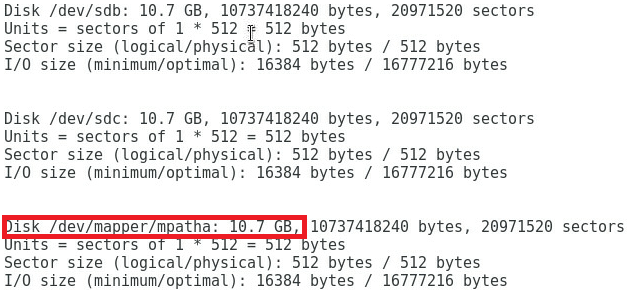

After multiple paths are configured, execute the fdisk -l command again to view the new mpatha volume, indicating that the system can access virtual volumes through multiple paths, as shown in Figure 12.

Figure 12 Viewing the mpatha volume

Example: Configuring FC (uplinking the aggregation interfaces of FC switch modules to FC switches)

Network requirement

As shown in Figure 13, two blade servers and two FC switch modules are installed in the H3C B16000 blade server chassis.

· The two blade servers are installed in slot 11 and slot 12, respectively.

· The two FC switch modules are installed in slot 3 and slot 6, respectively.

· An FC mezzanine card is installed in the mezzanine card slot 3 of the blade server.

· The FC 1/1/1 and FC 1/1/2 ports of the two FC switch modules are directly connected to the FC 1/1/1 and FC 1/1/2 ports of the two FC switches.

· The FC 1/1/3 ports of the two FC switches are directly connected to the 0:0:1 and 1:0:1 ports of the FC storage device.

In this example, the following devices and modules are used: blade server (H3C UniServer B5700 G3), mezzanine card (NIC-FC730i-Mb-2*32G, "FC730i"), FC switch module (BX608FE), FC switch (H3C S6800), and FC storage device (H3C CF8840 from the HPE 3Par StoreServ family).

The following requirements are expected to be met:

· Mounts storage volumes on the 3Par storage to the blade server to realize remote storage.

· Breaks through the single-port traffic bottleneck of FC switch modules.

· Ensures network reliability so the service is not affected when any FC switch or FC switch module fails.

Figure 13 Network diagram of uplinking multiple ports of FC switch modules in FCF mode to SAN

Analysis

· To mount 3Par storage volumes to the blade server, you need to build an FC link between the blade server and the 3Par storage to enable the transmission of FC traffic, which requires the following settings:

¡ Configure FC-related settings on the blade server and mezzanine card.

¡ Configure the FC function on the FC switch module.

¡ Configure the FC function on the FC switch to ensure FC link connectivity.

¡ Create a storage volume on the 3Par so that the blade server's OS can automatically recognize the storage volume.

· To break through the single-port traffic bottleneck of FC switch modules, you need to aggregate multiple physical ports of FC switch modules to form an FC aggregation interface, to realize bandwidth aggregation and load sharing. You need to aggregate multiple ports in the FCF mode. Therefore, you need to configure the ports of the FC switch module to operate in the FCF mode.

· As a best practice, connect each interconnect module to an FC switch for link redundancy, thus avoiding network disconnection caused by FC switch failure.

· To ensure service continuity when any FC interconnect module fails, you need to configure the multipath function under the OS of the blade server to improve network reliability.

Software versions used

This example was configured and verified on the SWITCH_SYS-1.02.04 version of the FC interconnect module.

Configuration precautions

· Before adding a storage volume, ensure that the blade server has installed the OS and the appropriate network adapter driver, so that the OS can identify the FC730i network adapter correctly. For details about installing the OS, see H3C Servers Operating System Installation Guide. For details about installing the network adapter driver, see FC730i Mezzanine Card Module User Guide.

· After mounting the storage volumes and replacing existing storage volumes on the 3Par with new ones, reboot the OS of the blade server to identify the new volumes.

Configuration procedure

Query the port connection between mezzanine cards and interconnect modules

Please use the H3C networking query tool on the official website to view the port connection relation between mezzanine cards and interconnect modules.

As shown in Figure 14, according to the query result, the mezzanine card of the blade server 11 is connected to FC 1/0/11 ports of interconnect modules 3 and 6, and the mezzanine card of blade server 12 is connected to FC 1/0/12 ports of interconnect modules 3 and 6.

Figure 14 Port connection relation between mezzanine cards and interconnect modules

Configuring the mezzanine card

In the FC networking, the FC mezzanine card can be used correctly after being installed in place. There are no other special requirements in this example, so you do not need to configure the mezzanine card.

Configuring the interconnect module

Configuring interconnect module 3

|

IMPORTANT: The internal port of the BX608FE interconnect module is in the shutdown state by default. Therefore, when configuring the module for the first time or restoring the default configuration file, execute the undo shutdown command to enable the corresponding port before configuring. Determine the internal port to which the mezzanine card port is connected according to "Querying port relations." |

# Configure the mode of the FC switch module to FCF.

<H3C> system-view

[H3C] fcoe-mode fcf

# Create the VSAN. In this example, create VSAN 1 and allow members in the default zone to access each other.

[H3C] vsan 1

[H3C-vsan1] zone default-zone permit

[H3C-vsan1] quit

# Create the VLAN to map VSAN. In this example, create VLAN 10, enable the FCoE function on VLAN 10, and map the VLAN to VSAN 1.

[H3C] vlan 10

[H3C-vlan10] fcoe enable vsan 1

[H3C-vlan10] quit

# Add FC 1/0/11 and FC 1/0/12 ports to VSAN 1 and set them to operate in the F mode.

[H3C] interface fc1/1/1

[H3C-Fc1/0/11] fc mode f

[H3C-Fc1/1/1] port access vsan 1

[H3C-Fc1/1/1] quit

[H3C] interface fc1/1/1

[H3C-Fc1/0/12] fc mode f

[H3C-Fc1/1/1] port access vsan 1

[H3C-Fc1/1/1] quit

# Create FC aggregation interface 1 and generate FC aggregation group 1.

[H3C] interface san-aggregation 1

# Configure FC aggregation interface 1 to operate in the E mode.

[H3C-SAN-Aggregation1] fc mode e

# Add FC aggregation interface 1 to VSAN 1 through the access mode, set the trunk mode of FC aggregation interface 1 to On, and add the port to the VSAN 1 through the trunk mode, that is, permit the VSAN 1 packets to pass.

[H3C-SAN-Aggregation1] port access vsan 1

[H3C-SAN-Aggregation1] port trunk mode on

[H3C-SAN-Aggregation1] port trunk vsan 1

[H3C-SAN-Aggregation1] quit

# Add the FC 1/1/1 and FC 1/1/2 ports to FC aggregation group 1.

[H3C] interface fc 1/1/1

[H3C-Fc1/1/1] shutdown

[H3C-Fc1/1/1] san-aggregation group 1

The FC mode, trunk mode, trunk VSAN, and access VSAN settings of the FC interface will be lost. Continue? [Y/N]:y

[H3C-Fc1/1/1] undo shutdown

[H3C-Fc1/1/1] quit

[H3C] interface fc 1/1/2

[H3C-Fc1/1/2] shutdown

[H3C-Fc1/1/2] san-aggregation group 1

The FC mode, trunk mode, trunk VSAN, and access VSAN settings of the FC interface will be lost. Continue? [Y/N]:y

[H3C-Fc1/1/2] undo shutdown

[H3C-Fc1/1/1] quit

# Save the configuration.

[H3C] save

The current configuration will be written to the device. Are you sure? [Y/N]:y

Please input the file name(*.cfg)[flash:/startup.cfg]

(To leave the existing filename unchanged, press the enter key):

Configuring interconnect module 6

|

IMPORTANT: The internal port of the BX608FE interconnect module is in the shutdown state by default. Therefore, when configuring the module for the first time or restoring the default configuration file, execute the undo shutdown command to enable the corresponding port before configuring. Determine the internal port to which the mezzanine card port is connected according to "Querying port relations." |

# Configure the mode of the FC switch module to FCF.

<H3C> system-view

[H3C] fcoe-mode fcf

# Create the VSAN. In this example, create VSAN 2 and allow members in the default zone to access each other.

[H3C] vsan 2

[H3C-vsan2] zone default-zone permit

[H3C-vsan2] quit

# Create the VLAN to map VSAN. In this example, create VLAN 20, enable the FCoE function on VLAN 20, and map the VLAN to VSAN 2.

[H3C] vlan 20

[H3C-vlan20] fcoe enable vsan 2

[H3C-vlan20] quit

# Add FC 1/0/11 and FC 1/0/12 ports to VSAN 2 and set to the F mode.

[H3C] interface fc1/1/1

[H3C-Fc1/0/11] fc mode f

[H3C-Fc1/1/1] port access vsan 1

[H3C-Fc1/1/1] quit

[H3C] interface fc1/1/1

[H3C-Fc1/0/12] fc mode f

[H3C-Fc1/1/1] port access vsan 1

[H3C-Fc1/1/1] quit

# Create FC aggregation interface 1 and generate FC aggregation group 1.

[H3C] interface san-aggregation 1

# Configure FC aggregation interface 1 to operate in the E mode.

[H3C-SAN-Aggregation1] fc mode e

# Add FC aggregation interface 1 to VSAN 1 through the access mode, set the trunk mode of FC aggregation interface 1 to On, and add the port to the VSAN 2 through the trunk mode, that is, permit the VSAN 1 packets to pass.

[H3C-SAN-Aggregation1] port access vsan 2

[H3C-SAN-Aggregation1] port trunk mode on

[H3C-SAN-Aggregation1] port trunk vsan 2

[H3C-SAN-Aggregation1] quit

# Add the FC 1/1/1 and FC 1/1/2 ports to FC aggregation group 1.

[H3C] interface fc 1/1/1

[H3C-Fc1/1/1] shutdown

[H3C-Fc1/1/1] san-aggregation group 1

The FC mode, trunk mode, trunk VSAN, and access VSAN settings of the FC interface will be lost. Continue? [Y/N]:y

[H3C-Fc1/1/1] undo shutdown

[H3C-Fc1/1/1] quit

[H3C] interface fc 1/1/2

[H3C-Fc1/1/2] shutdown

[H3C-Fc1/1/2] san-aggregation group 1

The FC mode, trunk mode, trunk VSAN, and access VSAN settings of the FC interface will be lost. Continue? [Y/N]:y

[H3C-Fc1/1/2] undo shutdown

[H3C-Fc1/1/1] quit

# Save the configuration.

[H3C] save

The current configuration will be written to the device. Are you sure? [Y/N]:y

Please input the file name(*.cfg)[flash:/startup.cfg]

(To leave the existing filename unchanged, press the enter key):

Configuring an FC switch

Configuring uplink SAN switch A

# Configure the FC switch to operate in the FCF mode.

<H3C> system-view

[H3C] fcoe-mode fcf

# Create the VSAN. In this example, create VSAN 1 and allow members in the default zone to access each other.

[H3C] vsan 1

[H3C-vsan1] zone default-zone permit

[H3C-vsan1] quit

# Create the VLAN to map VSAN. In this example, create VLAN 10, enable the FCoE function on VLAN 10, and map the VLAN to VSAN 1.

[H3C] vlan 10

[H3C-vlan10] fcoe enable vsan 1

[H3C-vlan10] quit

# Create FC aggregation interface 1 and generate FC aggregation group 1.

[H3C] interface san-aggregation 1

# Configure FC aggregation interface 1 to operate in the E mode.

[H3C -SAN-Aggregation1] fc mode e

# Add FC aggregation interface 1 to VSAN 1 through the access mode, set the trunk mode of FC aggregation interface 1 to On, and add the port to the VSAN 10 through the trunk mode, that is, permit the VSAN 10 packets to pass.

[H3C-SAN-Aggregation1] port access vsan 1

[H3C-SAN-Aggregation1] port trunk mode on

[H3C-SAN-Aggregation1] port trunk vsan 1

[H3C-SAN-Aggregation1] quit

# Add the FC 1/1/1 and FC 1/1/2 ports to FC aggregation group 1.

[H3C] interface fc 1/1/1

[H3C-Fc1/1/1] shutdown

[H3C-Fc1/1/1] san-aggregation group 1

The FC mode, trunk mode, trunk VSAN, and access VSAN settings of the FC interface will be lost. Continue? [Y/N]:y

[H3C-Fc1/1/1] undo shutdown

[H3C-Fc1/1/1] quit

[H3C] interface fc 1/1/2

[H3C-Fc1/1/2] shutdown

[H3C-Fc1/1/2] san-aggregation group 1

The FC mode, trunk mode, trunk VSAN, and access VSAN settings of the FC interface will be lost. Continue? [Y/N]:y

[H3C-Fc1/1/2] undo shutdown

[H3C-Fc1/1/1] quit

# Set the FC 1/1/3 port connected to the 3Par storage to operate in the F mode.

[H3C] interface fc1/1/1

[H3C-Fc1/1/3] fc mode f

[H3C-Fc1/1/1] quit

# Save the configuration.

[H3C] save

The current configuration will be written to the device. Are you sure? [Y/N]:y

Please input the file name(*.cfg)[flash:/startup.cfg]

(To leave the existing filename unchanged, press the enter key):

Configuring uplink SAN switch B

# Configure the FC switch to operate in the FCF mode.

<H3C> system-view

[H3C] fcoe-mode fcf

# Create the VSAN. In this example, create VSAN 2 and allow members in the default zone to access each other.

[H3C] vsan 2

[H3C-vsan2] zone default-zone permit

[H3C-vsan2] quit

# Create the VLAN to map VSAN. In this example, create VLAN 20, enable the FCoE function on VLAN 20, and map the VLAN to VSAN 2.

[H3C] vlan 20

[H3C-vlan20] fcoe enable vsan 2

[H3C-vlan20] quit

# Create FC aggregation interface 1 and generate FC aggregation group 1.

[H3C] interface san-aggregation 1

# Configure FC aggregation interface 1 to operate in the E mode.

[H3C -SAN-Aggregation1] fc mode e

# Add FC aggregation interface 1 to VSAN 1 through the access mode, set the trunk mode of FC aggregation interface 1 to On, and add the port to the VSAN 10 through the trunk mode, that is, permit the VSAN 10 packets to pass.

[H3C-SAN-Aggregation1] port access vsan 2

[H3C-SAN-Aggregation1] port trunk mode on

[H3C-SAN-Aggregation1] port trunk vsan 2

[H3C-SAN-Aggregation1] quit

# Add the FC 1/1/1 and FC 1/1/2 ports to FC aggregation group 1.

[H3C] interface fc 1/1/1

[H3C-Fc1/1/1] shutdown

[H3C-Fc1/1/1] san-aggregation group 1

The FC mode, trunk mode, trunk VSAN, and access VSAN settings of the FC interface will be lost. Continue? [Y/N]:y

[H3C-Fc1/1/1] undo shutdown

[H3C-Fc1/1/1] quit

[H3C] interface fc 1/1/2

[H3C-Fc1/1/2] shutdown

[H3C-Fc1/1/2] san-aggregation group 1

The FC mode, trunk mode, trunk VSAN, and access VSAN settings of the FC interface will be lost. Continue? [Y/N]:y

[H3C-Fc1/1/2] undo shutdown

[H3C-Fc1/1/1] quit

# Add the FC 1/1/3 port connected to the 3Par storage to VSAN 2 and configure it to operate in the F mode.

[H3C] interface fc1/1/1

[H3C-Fc1/1/3] fc mode f

[H3C-Fc1/1/3] port access vsan 2

[H3C-Fc1/1/1] quit

# Save the configuration.

[H3C] save

The current configuration will be written to the device. Are you sure? [Y/N]:y

Please input the file name(*.cfg)[flash:/startup.cfg]

(To leave the existing filename unchanged, press the enter key):

Querying the WWN of the node connected to the FC switch module

Execute the display fc login command on the FC switch module to view information about the node connected to the module. Here we use viewing the FC switch module 3 as an example:

<H3C> display fc login

Interface VSAN FCID Node WWN Port WWN

Fc1/0/11 1 0x760000 20:00:f4:e9:d4:53:f1:c5 21:00:f4:e9:d4:53:f1:c5

Fc1/0/12 1 0x760001 20:00:f4:e9:d4:58:6a:06 21:00:f4:e9:d4:58:6a:06

Table 3 Description of the information displayed by executing the display fc login command

|

Field |

Description |

|

Interface |

The port through which the switch and the node are connected |

|

VSAN |

VSAN ID |

|

FCID |

The FC address of the node assigned by the switch |

|

Node WWN |

The WWN of the node |

|

Port WWN |

The WWN of the port through which the node and the switch are connected |

Configuring 3Par storage

See "Configuring 3Par storage" for the process of configuring 3Par storage.

Configuring the OS

|

IMPORTANT: · The following information provides the procedure for configuring multipath under Red Hat 7.5. Multipath can realize the loading balancing and reliability of the traffic between hosts and storage devices. For the procedure for configuring multipath and verifying the configuration under Windows, see "Configuring multipath and adding network disks on Windows Server." · Before configuration, ensure that the OS has installed the multipath tool, a built-in tool of most Linux systems. |

Preparation

Execute the fdisk -l command under the OS to view the two virtual volumes identified by the host, as shown in Figure 15.

Figure 15 Identifying the virtual volumes

|

|

NOTE: If no disk is found, execute the echo 1 > /sys/class/fc_host/hostnum/issue_lip command to manually refresh fc_host, where hostnum indicates the host number under the /sys/class/fc_host directory, such as host 1. |

Configuring multipath

1. Execute the following commands in turn to load the multipath server, set the polling mode, enable the multipath service, and format the path.

# modprobe dm-multipath

# modprobe dm-round-robin

# systemctl start multipathd.service

# multipath -v2

|

|

NOTE: If it is prompted that the multipath.conf file does not exist under the Red Hat operating system, you can copy the file from another location and deliver it again, as shown in Figure 16. |

Figure 16 Handling the case when the multipath.conf file does not exist

2. Execute the following command to reboot the multipath server.

# systemctl restart multipathd.service

3. Run the following command to view the multipath link status, and you can see that the two ports are in the active state, as shown in Figure 17.

# multipath –ll

Figure 17 Viewing the multipath link status

Verifying the configuration

Identifying storage volumes

As shown in Figure 18, execute the fdisk -l command under the OS to view the two virtual volumes identified by the host, indicating that the server-to-storage FC link is connected and the storage volumes are mounted.

Figure 18 Identifying the virtual volumes

Verifying the configuration of the FC aggregation group

Before and after FC aggregation, the mapping relation between the downlink ports (connected to the ports of mezzanine cards on blade servers) and the uplink ports (connected to the ports of FC switches) on the FC switch modules changes. Here we use the FC switch module 3 as an example.

# Display the brief information about FC aggregation group 1 on the FC switch module.

[H3C] display san-aggregation interface san-aggregation 1

* indicates the member port is selected.

Interface State Mode Speed Member port

SAGG1 UP E 16Gbps *Fc1/1/1

*Fc1/1/2

# Display the details of FC aggregation group 1 on the FC switch module.

[H3C] display san-aggregation verbose interface san-aggregation 1

Interface SAN-Aggregation1:

State : UP

Mode : E

Speed : 32Gbps

Member port number : 2

Selected port number : 2

Member port State Mode Speed Selected

Fc1/1/1 UP E 16Gbps Y

Fc1/1/2 UP E 16Gbps Y

The above information indicates that both the FC 1/1/1 and FC 1/1/2 ports are selected for sharing traffic load. The FC aggregation interface has a speed of 32 Gbps that is the sum of the speeds of two FC ports. When one FC port fails, the other FC port can transmit the traffic, thus improving the reliability of link connection.

Accessing virtual volumes by the system through multiple paths

After multiple paths are configured, execute the fdisk -l command again to view the new mpatha volume, indicating that the system can access virtual volumes through multiple paths, as shown in Figure 19. This ensures service continuity when any FC switch or FC switch module fails.

Figure 19 Viewing the mpatha volume

Example: Configuring FC (directly connecting FC switch modules to the 3Par storage)

Network requirement

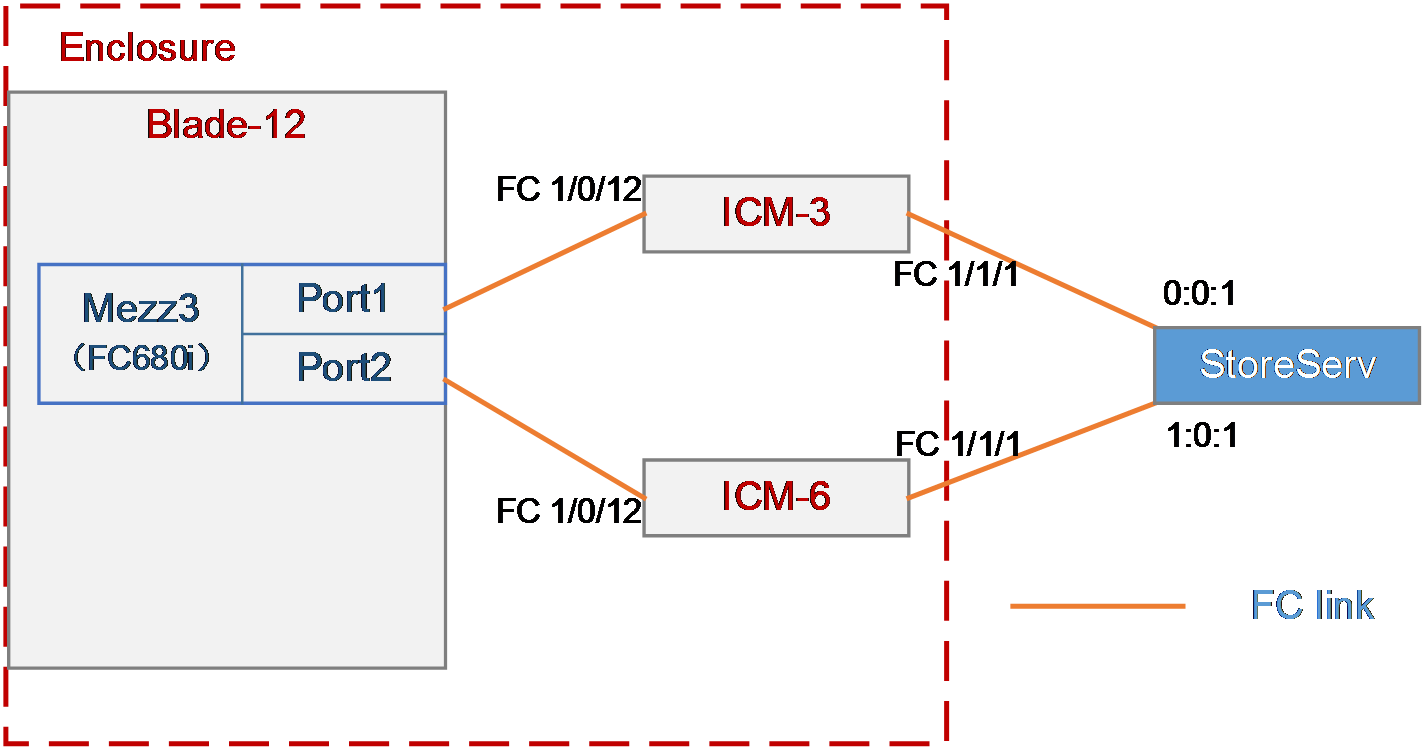

As shown in Figure 20, a blade server and two FC switch modules are installed in the H3C B16000 blade server chassis. The blade server is installed in slot 12, the two FC switch modules are installed in slot 3 and slot 6, and the mezzanine card is installed in the mezzanine card slot 3 of the blade server. The FC 1/1/1 port of each of the two FC switch modules is directly connected to the 0:0:1 or 1:0:1 port of the 3Par storage.

In this example, the following devices and modules are used: blade server (H3C UniServer B5700 G3), mezzanine card (NIC-FC680i-Mb-2*16G, "FC680i"), FC switch module (BX608FE), and FC storage device (H3C CF8840 from the HPE 3Par StoreServ family).

The following requirements are expected to be met:

The OS on the blade server can mount storage volumes on the 3Par to realize remote storage, and data on the 3Par can be accessed through the OS when any interconnect module fails.

Figure 20 Network diagram between FC switch modules (FCF mode) and the FC storage device

Analysis

· To mount a 3Par storage volume under the OS, build an FC link between the blade server and the 3Par storage, and create a storage volume on the 3Par storage. Then, the OS can automatically identify the storage volume.

· In this example, the FC switch modules are directly connected to the FC storage device. You need to configure the mode of the two FC switch modules to FCF.

· To ensure service continuity when any interconnect module fails, you need to configure the multipath function under the OS to improve network reliability.

· As a best practice, connect two interconnect modules to two different FC switches respectively for link redundancy, thus avoiding network disconnection caused by FC switch failure.

Software versions used

This example was created and verified on versions SWITCH_SYS-1.00.11 and OM-1.00.11 of interconnect modules.

Configuration precautions

· Before adding a storage volume, ensure that the blade server has installed the OS and the appropriate network adapter driver, so that the OS can identify the FC680i network adapter correctly. For details about installing the OS, see H3C Servers Operating System Installation Guide. For details about installing the network adapter driver, see FC680i Mezzanine Card Module User Guide.

· After mounting the storage volumes and replacing existing storage volumes on the 3Par with new ones, reboot the OS of the blade server to identify the new volumes.

Configuration procedure

Querying port information

Query the connection relation between the internal ports of mezzanine cards and interconnect modules according to "Querying port relations."

It can be seen that the FC 1/0/12 ports of the interconnect module 3 and interconnect module 6 are used in this example.

Configuring the mezzanine card

In the FC networking, the FC mezzanine card can be used correctly after being installed in place. There are no other special requirements in this example, so you do not need to configure the mezzanine card.

Configuring the interconnect module

Configuring interconnect module 3

|

|

NOTE: The internal port of the BX608FE interconnect module is in the shutdown state by default. Therefore, when configuring the module for the first time or restoring the default configuration file, execute the undo shutdown command to enable the corresponding port before configuring. Determine the internal port to which the mezzanine card port is connected according to "Querying port relations." |

# Configure the FCoE mode of the switch to FCF.

<H3C> system-view

[H3C] fcoe-mode fcf

# Create the VSAN. In this example, create VSAN 1 and allow members in the default zone to access each other.

[H3C] vsan 1

[H3C-vsan1] zone default-zone permit

[H3C-vsan1] quit

# Create the VLAN to map VSAN. In this example, create VLAN 10, enable the FCoE function on VLAN 10, and map the VLAN to VSAN 1.

[H3C] vlan 10

[H3C-vlan10] fcoe enable vsan 1

[H3C-vlan10] quit

# Configure the port connected to the 3Par storage server to operate in the F mode.

[H3C] interface fc1/1/1

[H3C-Fc1/1/1] fc mode f

[H3C-Fc1/1/1] quit

# Save the configuration.

[H3C] save

The current configuration will be written to the device. Are you sure? [Y/N]:y

Please input the file name(*.cfg)[flash:/startup.cfg]

(To leave the existing filename unchanged, press the enter key):

Configuring interconnect module 6

|

|

NOTE: The internal port of the BX608FE interconnect module is in the shutdown state by default. Therefore, when configuring the module for the first time or restoring the default configuration file, execute the undo shutdown command to enable the corresponding port before configuring. Determine the internal port to which the mezzanine card port is connected according to. |

# Configure the FCoE mode of the switch to FCF.

<H3C> system-view

[H3C] fcoe-mode fcf

# Create the VSAN. In this example, create VSAN 2 and allow members in the default zone to access each other.

[H3C] vsan 2

[H3C-vsan2] zone default-zone permit

[H3C-vsan2] quit

# Create the VLAN to map VSAN. In this example, create VLAN 20, enable the FCoE function on VLAN 20, and map the VLAN to VSAN 2.

[H3C] vlan 20

[H3C-vlan20] fcoe enable vsan 2

[H3C-vlan20] quit

# Configure the port connected to the 3Par storage server to operate in the F mode.

[H3C] interface fc1/1/1

[H3C-Fc1/1/1] fc mode f

[H3C-Fc1/1/1] access vsan 2

[H3C-Fc1/1/1] quit

# Add the internal port to VSAN 2.

[H3C] interface fc1/1/1

[H3C-Fc1/0/12] access vsan 2

[H3C-Fc1/1/1] quit

# Save the configuration.

[H3C] save

The current configuration will be written to the device. Are you sure? [Y/N]:y

Please input the file name(*.cfg)[flash:/startup.cfg]

(To leave the existing filename unchanged, press the enter key):

Querying the WWN of the node connected to the FC switch module

Execute the display fc login command on the FC switch module to view information about the node connected to the module. Here we use viewing the FC switch module 3 as an example:

<H3C> display fc login

Interface VSAN FCID Node WWN Port WWN

Fc1/0/12 1 0x760000 20:00:f4:e9:d4:53:f1:c5 21:00:f4:e9:d4:53:f1:c5

Fc1/1/1 1 0x760001 2f:f7:00:02:ac:02:28:66 21:01:00:02:ac:02:28:66

Table 4 Description of the information displayed by executing the display fc login command

|

Field |

Description |

|

Interface |

The port through which the switch and the node are connected |

|

VSAN |

VSAN ID |

|

FCID |

The FC address of the node assigned by the switch |

|

Node WWN |

The WWN of the node |

|

Port WWN |

The WWN of the port through which the node and the switch are connected |

Configuring 3Par storage

See "Configuring 3Par storage" for the process of configuring the 3Par storage server.

Configuring the OS

|

|

NOTE: · The following information provides the procedure for configuring multipath under Red Hat 7.5. Multipath can realize the loading balancing and reliability of the traffic between hosts and storage devices. For the procedure for configuring multipath and verifying the configuration under Windows, see "Configuring multipath and adding network disks on Windows Server." · Before configuration, ensure that the OS has installed the multipath tool, a built-in tool of most Linux systems. |

Preparation

Execute the fdisk -l command under the OS to view the two virtual volumes identified by the host, as shown in Figure 21.

Figure 21 Identifying the virtual volumes

|

|

NOTE: If no disk is found, execute the echo 1 > /sys/class/fc_host/hostnum/issue_lip command to manually refresh fc_host, where hostnum indicates the host number under the /sys/class/fc_host directory, such as host 1. |

Configuring multipath

1. Execute the following commands in turn to load the multipath server, set the polling mode, enable the multipath service, and format the path.

# modprobe dm-multipath

# modprobe dm-round-robin

# service multipathd start

# multipath –v2

|

|

NOTE: If it is prompted that the multipath.conf file does not exist under the Red Hat operating system, you can copy the file from another location and deliver it again, as shown in Figure 22. |

Figure 22 Handling the case when the multipath.conf file does not exist

2. Execute the following command to reboot the multipath server.

# systemctl restart multipathd.service

Verifying the configuration

After configuring the multipath, execute the fdisk -l command again. You can see a new mpatha volume, indicating that the system can access virtual volumes through multiple paths, as shown in Figure 23.

Figure 23 Viewing the mpatha volume

Example: Configuring FCoE (connecting aggregation interconnect modules to FC switches)

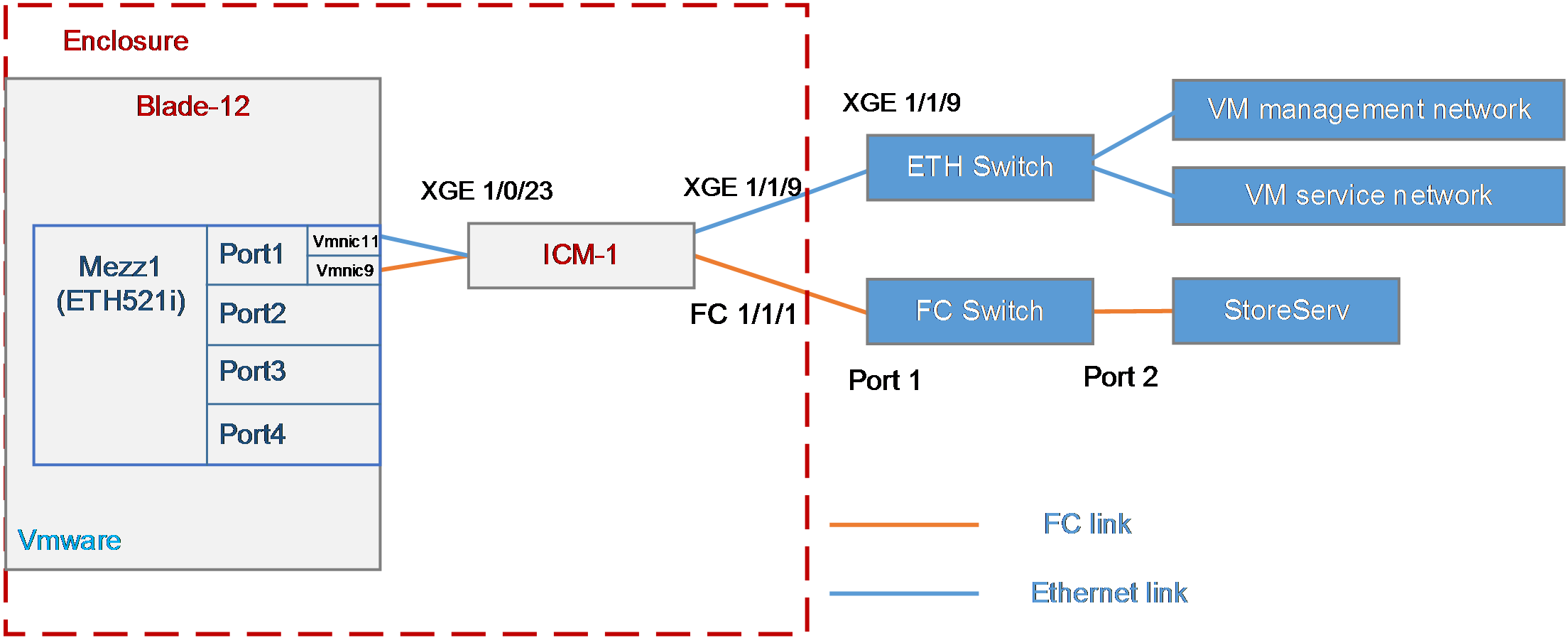

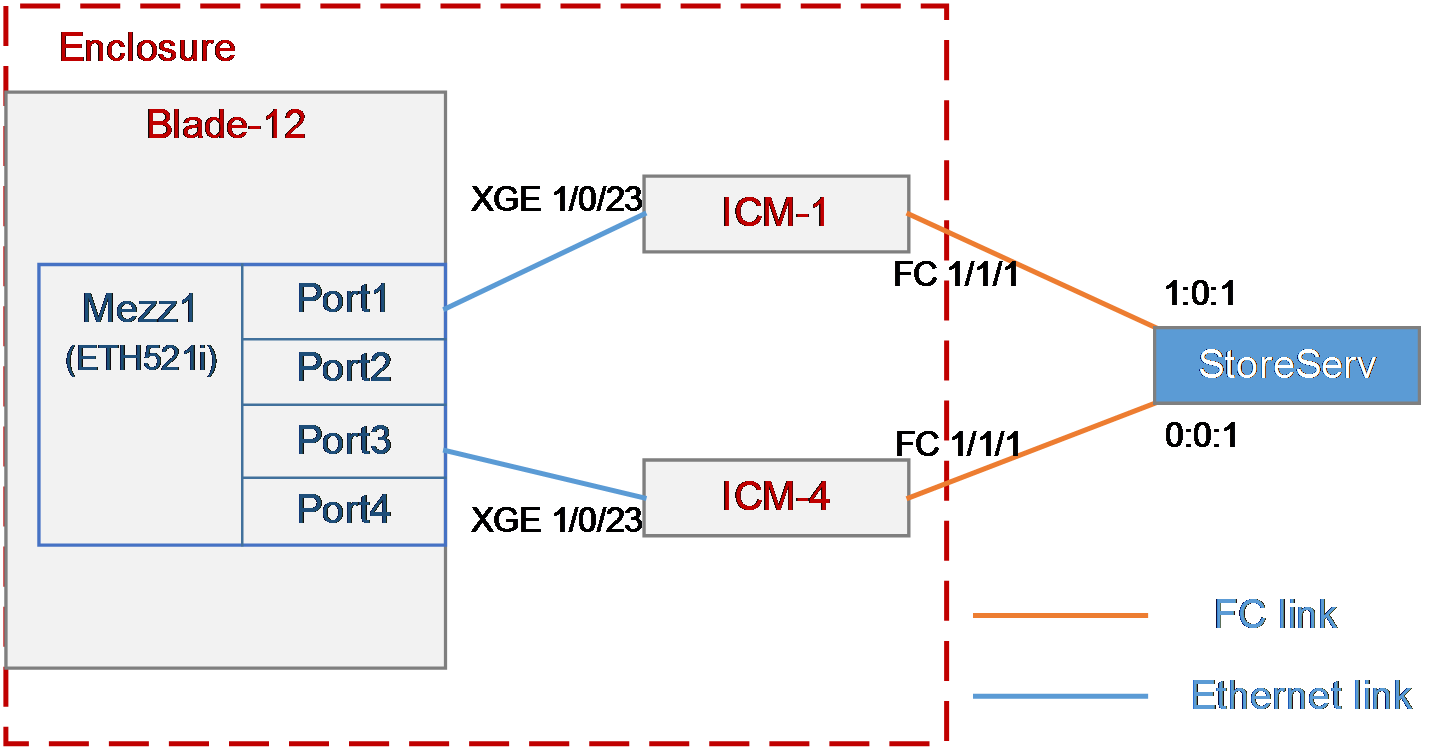

Network requirement

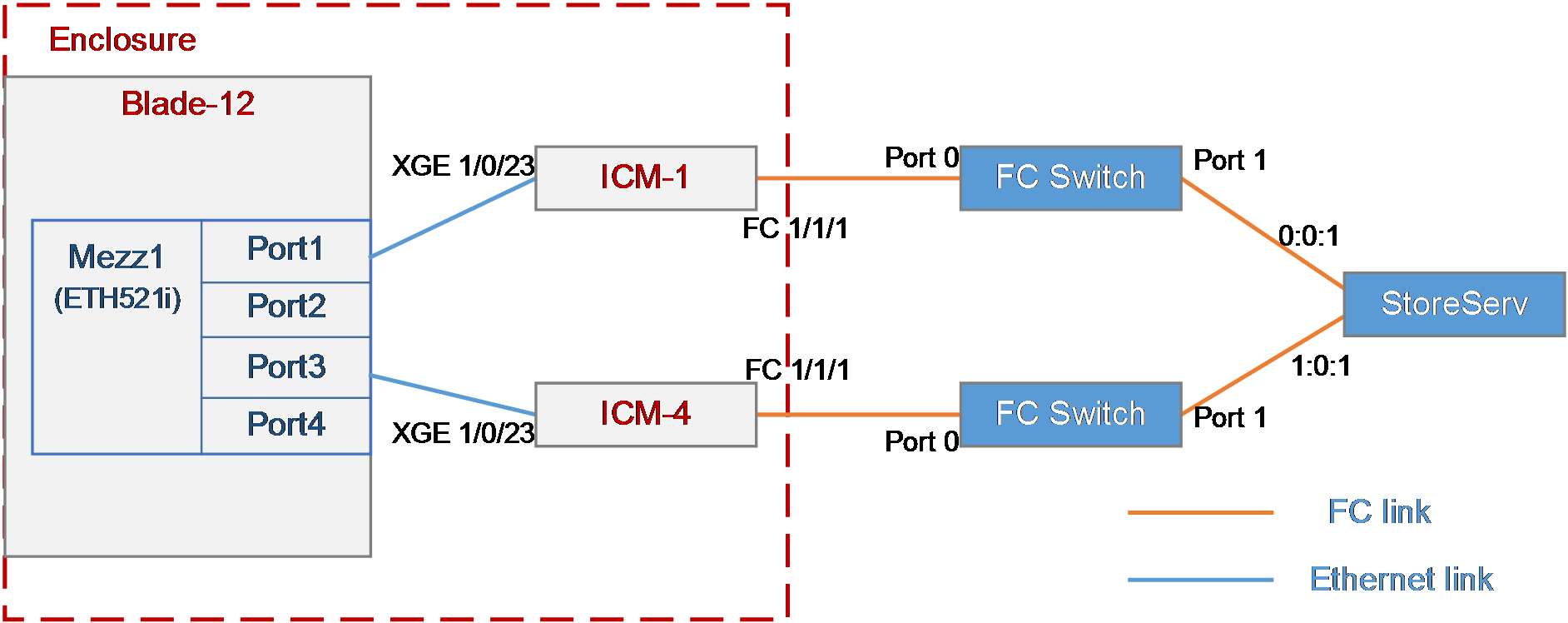

As shown in Figure 24, a blade server and two aggregation interconnect modules are installed in the H3C B16000 blade server chassis. The blade server is installed in slot 12, two aggregation interconnect modules are installed in slot 1 and slot 4, and the mezzanine card is installed in the mezzanine card slot 1 of the blade server. The FC 1/1/1 port of each of the two aggregation interconnect modules is connected to the FC switch, and the FC switch is connected to the 0:0:1 port of the FC storage device.

In this example, the following devices and modules are used: blade server (H3C UniServer B5700 G3), mezzanine card (NIC-ETH521i-Mb-4*10G, "ETH521i"), aggregation interconnect module (BX720EF), FC switch (Brocade 6510), and FC storage device (H3C CF8840 from the HPE 3Par StoreServ family).

The following requirements are expected to be met:

The OS on the blade server can mount storage volumes on the 3Par to realize lossless remote storage, and you can still access data on the 3Par through the OS when any interconnect module fails.

Analysis

· To mount a 3Par storage volume under the OS, build an FC link between the blade server and the 3Par storage, and create a storage volume on the 3Par storage. Then, the OS can automatically identify the storage volume.

· In this example, aggregation interconnect modules are used, and the blade server and interconnect modules are connected through Ethernet links. Therefore, you need to configure the FCoE function on mezzanine cards and interconnect modules to transmit FC traffic.

· The first eight external ports on the BX720EF aggregation interconnect module support FC/Ethernet handoff and need to be switched to the FC mode.

· In this example, the aggregation interconnect modules are directly connected to FC switches. You need to configure the mode of the two interconnect modules to NPV.

· To ensure service continuity when any interconnect module fails, you need to configure the multipath function under the OS to improve network reliability.

· As a best practice, configure the PFC and ETS functions of DCBX and auto-negotiation modes on the internal ports of the interconnect modules, to transmit storage data traffic of a SAN network over Ethernet links without losses.

Software versions used

This example was created and verified on versions SWITCH_SYS-1.00.11 and OM-1.00.11 of interconnect modules.

Configuration precautions

· Before adding a storage volume, ensure that the blade server has installed the OS and the appropriate network adapter driver, so that the OS can identify the ETH521i network adapter correctly. For details about installing the OS, see H3C Servers Operating System Installation Guide. For details about installing the network adapter driver, see ETH521i Mezzanine Card Module User Guide.

· After mounting the storage volumes and replacing existing storage volumes on the 3Par with new ones, reboot the OS of the blade server to identify the new volumes.

· As a best practice, disable the STP function of the internal ports on the interconnect modules, to prevent the rebooting or plugging in/out of the blade server from causing the STP of the interconnect modules to start calculating and resulting in short disconnection of external ports on the interconnect modules.

· Some packets are transmitted through VLAN 1 during the negotiation of the FCoE protocol. To ensure correct protocol negotiation, do not modify the relevant default settings of VLAN 1 to ensure normal communication of VLAN 1 traffic between the interconnect modules and blade servers.

· If you configure both FC ports and VFC ports on your devices, execute the qos trust dot1p command to enable normal PFC of the VFC ports.

Configuration procedure

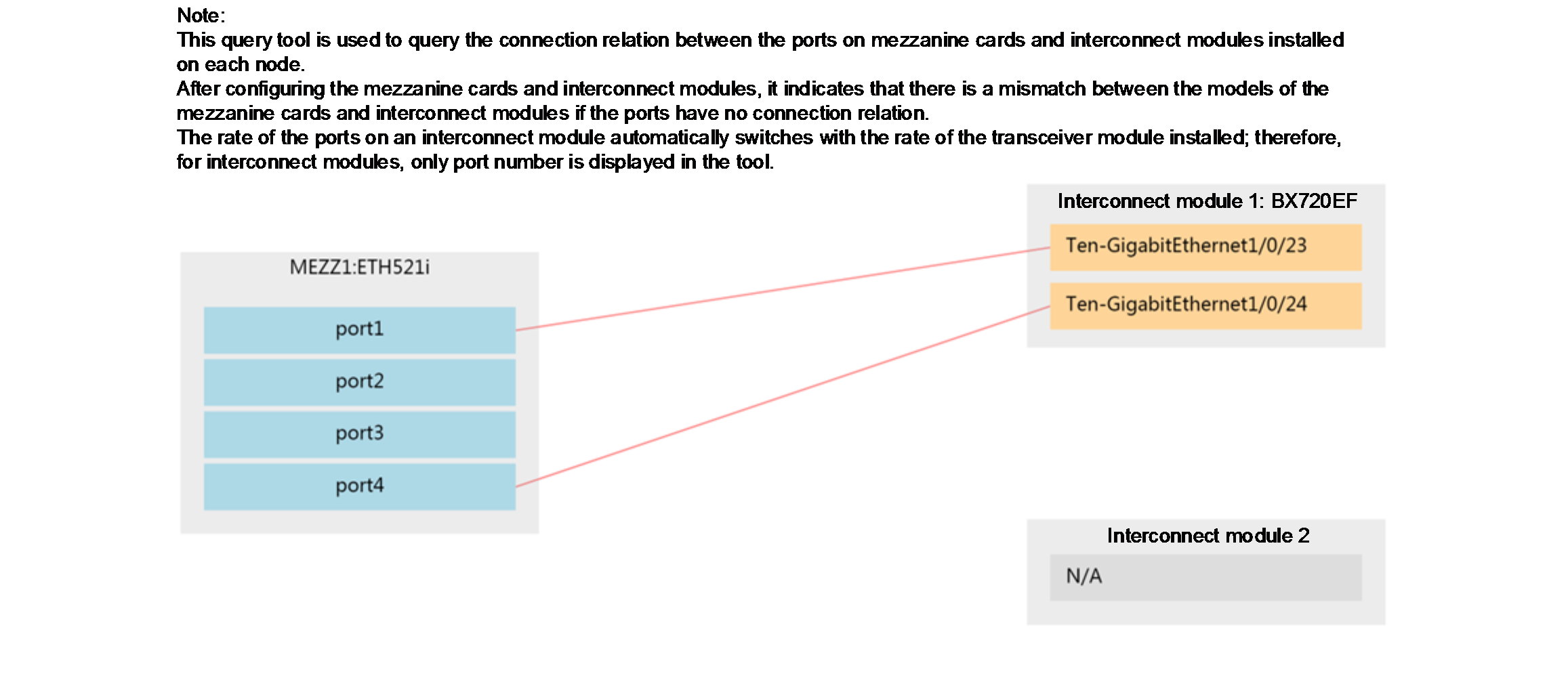

Querying port information

Query the correspondence among mezzanine cards, internal ports of interconnect modules, and network adapters under the OS according to "Querying port relations."

It can be learned that:

· In this example, Port 1 and Port 3 of mezzanine card 3 are used and are named ens5f0 and ens5f2 under the OS, respectively.

· Ten-gigabitethernet 1/0/23 ports of interconnect modules 1 and 4 are used in this example.

Configuring the mezzanine card

|

|

NOTE: · In this example, Red Hat 7.5 is installed on the blade server and used as an example in the following steps. · When Windows Server is installed on a blade server, there is no need to configure the mezzanine cards under the system. |

1. Configure the FCoE function of ens5f0 and ens5f2 ports:

a. Under the /etc/fcoe directory, execute the cp cfg-ethX cfg-ethM command to copy and create the FCoE port configuration file. Where, cfg-ethM indicates the port name for FCoE connection. In this example, the names are cfg-ens5f0 and cfg-ens5f2. The following contents use cfg-ens5f0 as an example to describe the configuration steps.

[root@localhost]# cd /etc/fcoe/

[root@localhost fcoe]# ls

cfg-ethx

[root@localhost fcoe]# cp cfg-ethx cfg-ens5f0

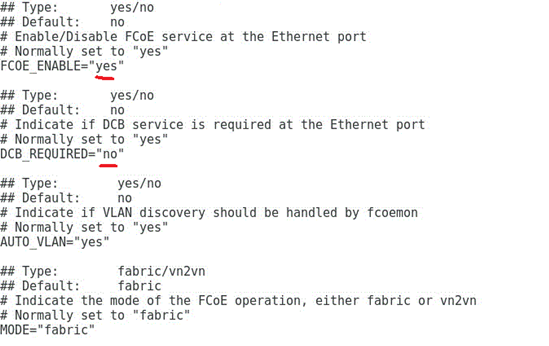

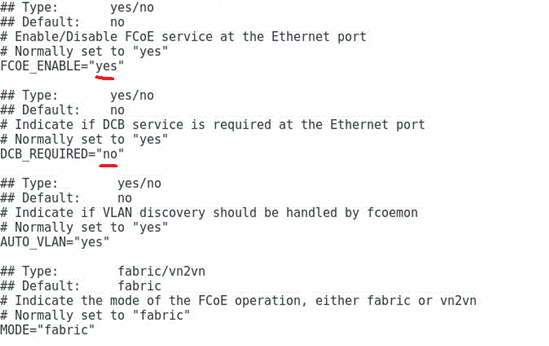

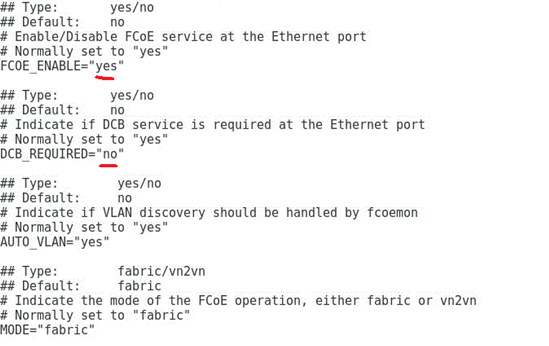

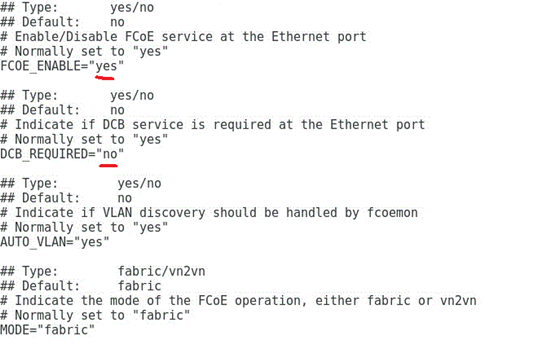

b. Execute the vi cfg-ethM command, edit and save the FCoE port configuration file, and ensure that FCOE_ENABLE = yes, and DCB_REQUIRED = no, as shown in Figure 25.

[root@localhost fcoe]# vi cfg-ens5f0

Figure 25 Editing the FCoE port configuration file

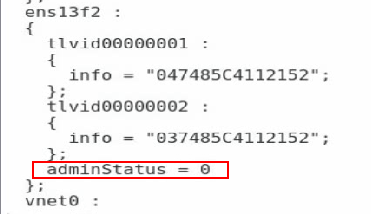

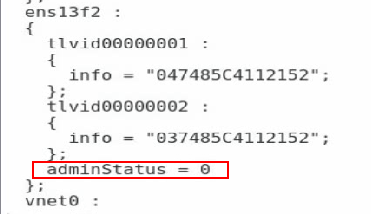

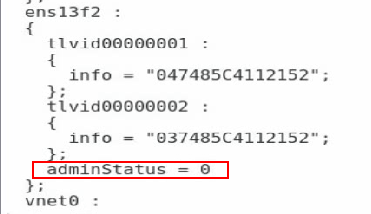

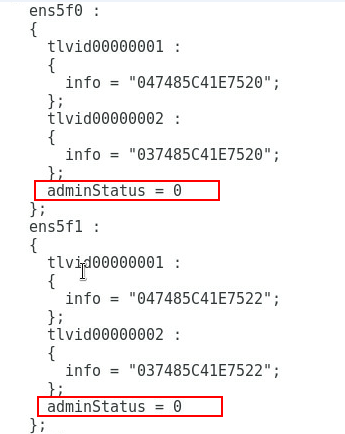

c. Execute the lldptool set-lldp -i ethM adminStatus=disabled command to set the LLDP admin status of the port to disabled. Then, check the configuration value of adminStatus of ethM in the /var/lib/lldpad/lldpad.conf configuration file. If it is zero, the command is executed successfully. If it is not, manually add a line "adminStatus = 0" to the "ethM" entry under "lldp" in the configuration file, as shown in Figure 26.

[root@localhost fcoe]# lldptool set-lldp –i ens5f0 adminStatus=disabled

Figure 26 Disabling the LLDP admin status

d. Execute the service fcoe restart and service lldpad restart commands to restart the FCoE and LLDP services.

[root@localhost fcoe]# service lldpad restart

[root@localhost fcoe]# service fcoe restart

e. Execute the chkconfig fcoe on and chkconfig lldpad on commands to automatically load the FCoE and LLDP services during the boot phase.

[root@localhost fcoe]# chkconfig fcoe on

[root@localhost fcoe]# chkconfig lldpad on

Configuring the interconnect module

Interconnecting interconnect modules and mezzanine cards

1. Configuring FCoE-related settings and ports

<H3C> system-view

# Configure the FCoE mode of the switch to NPV.

[H3C] fcoe-mode npv

# Create the VSAN on interconnect module 1. In this example, VSAN 1 is created.

[H3C] vsan 1

[H3C-vsan1] quit

# Create VLAN 10 on interconnect module 1 to map VSAN 1, and enable the FCoE function on VLAN 10.

[H3C] vlan 10

[H3C-vlan10] fcoe enable vsan 1

[H3C-vlan10] quit

# Create VFC ports on interconnect module 1, and bind them with internal ports XGE 1/0/23.

[H3C] interface vfc 1

[H3C-Vfc1] bind interface Ten-GigabitEthernet1/0/23

[H3C-Vfc1] port trunk vsan 1

[H3C-Vfc1] quit

# Configure the internal physical ports of interconnect module 1 to the trunk mode and allow VLAN 10 to pass.

[H3C] interface Ten-GigabitEthernet1/0/23

[H3C-Ten-GigabitEthernet1/0/23] port link-type trunk

[H3C-Ten-GigabitEthernet1/0/23] port trunk permit vlan 10

[H3C-Ten-GigabitEthernet1/0/23] undo stp enable

[H3C-Ten-GigabitEthernet1/0/23] quit

# Create the VSAN on interconnect module 4. In this example, VSAN 2 is created.

[H3C] vsan 2

[H3C-vsan2] quit

# Create VLAN 20 on interconnect module 4 to map VSAN 2 and enable the FCoE function on VLAN 20.

[H3C] vlan 20

[H3C-vlan20] fcoe enable vsan 2

[H3C-vlan20] quit

# Create VFC ports on interconnect module 4, and bind them with internal ports XGE 1/0/23.

[H3C] interface vfc 2

[H3C-Vfc2] bind interface Ten-GigabitEthernet1/0/23

[H3C-Vfc2] port trunk vsan 2

[H3C-Vfc2] quit

# Configure the internal physical ports of interconnect module 4 to the trunk mode and allow VLAN 20 to pass.

[H3C] interface Ten-GigabitEthernet1/0/23

[H3C-Ten-GigabitEthernet1/0/23] port link-type trunk

[H3C-Ten-GigabitEthernet1/0/23] port trunk permit vlan 20

[H3C-Ten-GigabitEthernet1/0/23] undo stp enable

[H3C-Ten-GigabitEthernet1/0/23] quit

2. Deploying DCBX

# Enable LLDP function globally.

[H3C] lldp global enable

# Create a Layer 2 ACL named DCBX and create rules for it to match FCoE packets (protocol number 0x8906) and FIP packets (protocol number 0x8914).

[H3C] acl mac name DCBX

[H3C-acl-mac-DCBX] rule 0 permit type 8906 ffff

[H3C-acl-mac-DCBX] rule 5 permit type 8914 ffff

[H3C-acl-mac-DCBX] quit

# Define a class named DCBX, the relation between the rules under it is OR, and define it to match the ACL named DCBX.

[H3C] traffic classifier DCBX operator or

[H3C-classifier-DCBX] if-match acl mac name DCBX

[H3C-classifier-DCBX] quit

# Define a stream behavior named DCBX and set the priority of the marked packets 802.1p to 3.

[H3C] traffic behavior DCBX

[H3C-behavior-DCBX] remark dot1p 3

[H3C-behavior-DCBX] quit

# Define a QoS policy named DCBX, specify the DCBX-like stream behavior as DCBX, and specify the policy as DCBX mode.

[H3C] qos policy DCBX

[H3C-qospolicy-DCBX] classifier DCBX behavior DCBX mode dcbx

[H3C-qospolicy-DCBX] quit

# Enable the LLDP function on the aggregation interface, and configure the bound physical ports to allow the port to send LLDP packets with DCBX TLV fields.

[H3C] interface Ten-GigabitEthernet 1/0/23

[H3C-Ten-GigabitEthernet1/0/23] lldp tlv-enable dot1-tlv dcbx

[H3C-Ten-GigabitEthernet1/0/23] qos apply policy DCBX outbound

[H3C-Ten-GigabitEthernet1/0/23] quit

3. Deploying PFC

|

|

NOTE: The WGE 1/1/1 to WGE 1/1/8 ports of the BX1020EF switch module does not support the PFC function. Therefore, do not use these ports to configure FCoE networking in actual situations. Otherwise, the network may not work correctly. |

# Configure the function to enable PFC through automatic negotiation with the server on the physical ports, enable the PFC function for 802.1p with priority set to 3, and configure to trust packets with the 802.1p priority.

[H3C] interface Ten-GigabitEthernet 1/0/23

[H3C-Ten-GigabitEthernet1/0/23] priority-flow-control auto

[H3C-Ten-GigabitEthernet1/0/23] priority-flow-control no-drop dot1p 3

[H3C-Ten-GigabitEthernet1/0/23] qos trust dot1p

[H3C-Ten-GigabitEthernet1/0/23] quit

4. Deploying ETS

# Configure the mapping from 802.1p priority to local priority, map 802.1p priority 3 to local priority 1, and map other 802.1p priorities to local priority 0.

[H3C] qos map-table dot1p-lp

[H3C-maptbl-dot1p-lp] import 3 export 1

[H3C-maptbl-dot1p-lp] import 0 export 0

[H3C-maptbl-dot1p-lp] import 1 export 0

[H3C-maptbl-dot1p-lp] import 2 export 0

[H3C-maptbl-dot1p-lp] import 4 export 0

[H3C-maptbl-dot1p-lp] import 5 export 0

[H3C-maptbl-dot1p-lp] import 6 export 0

[H3C-maptbl-dot1p-lp] import 7 export 0

[H3C-maptbl-dot1p-lp] quit

# Configure other queues to SP scheduling on the physical interface bound to the aggregate interface.

[H3C] interface Ten-GigabitEthernet 1/0/23

[H3C-Ten-GigabitEthernet1/0/23] qos wrr af2 group sp

[H3C-Ten-GigabitEthernet1/0/23] qos wrr af3 group sp

[H3C-Ten-GigabitEthernet1/0/23] qos wrr af4 group sp

[H3C-Ten-GigabitEthernet1/0/23] qos wrr ef group sp

[H3C-Ten-GigabitEthernet1/0/23] qos wrr cs6 group sp

[H3C-Ten-GigabitEthernet1/0/23] qos wrr cs7 group sp

[H3C-Ten-GigabitEthernet1/0/23] quit

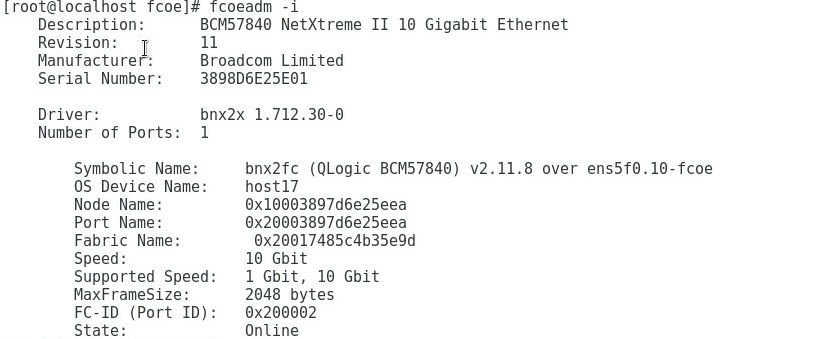

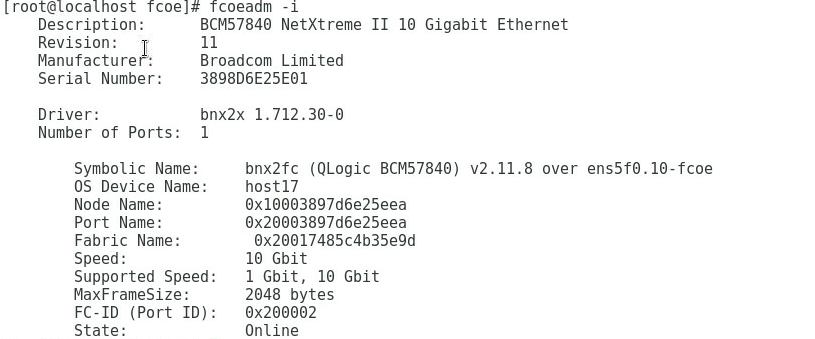

5. Verifying that the FCoE protocol has been negotiated

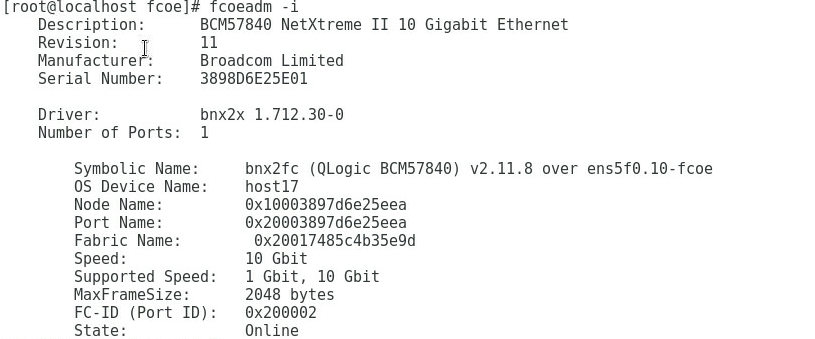

# Execute the fcoeadm -i command under the OS to query the information about the ports with FCoE configured and confirm that the protocol has been negotiated, as shown in Figure 27.

Figure 27 Querying the information about ports with FCoE configured

Interconnecting interconnect modules and FC switches

# Switch the external port XGE 1/1/1 to an FC port.

[H3C] interface Ten-GigabitEthernet 1/1/1

[H3C-Ten-GigabitEthernet1/1/1] port-type fc

The interface Ten-GigabitEthernet1/1/1, Ten-GigabitEthernet1/1/2, Ten-GigabitEth

ernet1/1/3, and Ten-GigabitEthernet1/1/4 will be deleted. Continue? [Y/N]:y

%Apr 3 18:49:23:050 2019 H3C OPTMOD/4/MODULE_IN: Fc1/1/1: The transceiver is 16

G_FC_SW_SFP.

# Configure the FC 1/1/1 port on interconnect module 1 to allow VSAN 1 to pass, and trust the 802.1p priority carried in packets.

[H3C-Fc1/1/1] port access vsan 1

[H3C-Fc1/1/1] fc mode np

[H3C-Fc1/1/1] qos trust dot1p

# Configure the FC 1/1/1 port on interconnect module 4 to allow VSAN 2 to pass, and trust the 802.1p priority carried in packets.

[H3C-Fc1/1/1] port access vsan 1

[H3C-Fc1/1/1] fc mode np

[H3C-Fc1/1/1] qos trust dot1p

# Save the configuration.

[H3C] save

The current configuration will be written to the device. Are you sure? [Y/N]:y

Please input the file name(*.cfg)[flash:/startup.cfg]

(To leave the existing filename unchanged, press the enter key):

# Check the information about the VFC port on the interconnect module, and confirm that the VFC port is UP.

[H3C]display interface vfc 1 brief

Brief information on VFC interface(s):

Admin Mode: E - e port; F - f port; NP - n port proxy

Oper Mode: TE - trunking e port; TF - trunking f port;

TNP - trunking n port proxy

Interface Admin Admin Oper Status Bind

Mode Trunk Mode Interface

Mode

Vfc1 F on -- UP XGE1/0/23

Querying the WWN number of the mezzanine card

Execute the display npv login command on the aggregation interconnect module to view the registration information about the node device connected to the downlink port of the aggregation interconnect module and the mapped uplink port. This command can be executed to display the detailed information, as shown in Table 2.

<H3C> display npv login

Server External

Interface VSAN FCID Node WWN Port WWN Interface

Vfc1 1 0x021101 20:00:f4:e9:D4:53:f1:c5 21:00:f4:e9:D4:53:f1:c5 Fc1/1/1

Configuring the Brocade switch

In this example, the Brocade switch uses the default settings. Ensure that Port 0 and Port 1 reside in the same FC zone.

Configuring 3Par storage

See "Configuring 3Par storage" for the process of configuring the 3Par storage server.

Configuring the OS

|

|

NOTE: · The following information provides the procedure for configuring multipath under Red Hat 7.5. Multipath can realize the loading balancing and reliability of the traffic between hosts and storage devices. For the procedure for configuring multipath and verifying the configuration under Windows, see "Configuring multipath and adding network disks on Windows Server." · Before configuration, ensure that the OS has installed the multipath tool, a built-in tool of most Linux systems. |

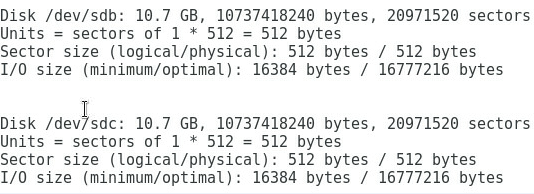

Preparation

Execute the fdisk -l command under the OS to view the two virtual volumes identified by the host, as shown in Figure 28.

Figure 28 Identifying the virtual volumes

|

|

NOTE: If no disk is found, execute the echo 1 > /sys/class/fc_host/hostnum/issue_lip command to manually refresh fc_host, where hostnum indicates the host number under the /sys/class/fc_host directory, such as host 1. |

Configuring multipath

1. Execute the following commands in turn to load the multipath server, set the polling mode, enable the multipath service, and format the path.

# modprobe dm-multipath

# modprobe dm-round-robin

# service multipathd start

# multipath –v2

|

|

NOTE: If it is prompted that the multipath.conf file does not exist under the Red Hat operating system, you can copy the file from another location and deliver it again, as shown in Figure 29. |

Figure 29 Handling the case when the multipath.conf file does not exist

2. Execute the following command to reboot the multipath server.

# systemctl restart multipathd.service

Verifying the configuration

After configuring the multipath, execute the fdisk -l command again. You can see a new mpatha volume, indicating that the system can access virtual volumes through multiple paths, as shown in Figure 30.

Figure 30 Viewing the mpatha volume

Example: Configuring FCoE (uplinking the aggregation interface of interconnect modules to FC switches)

Network requirement

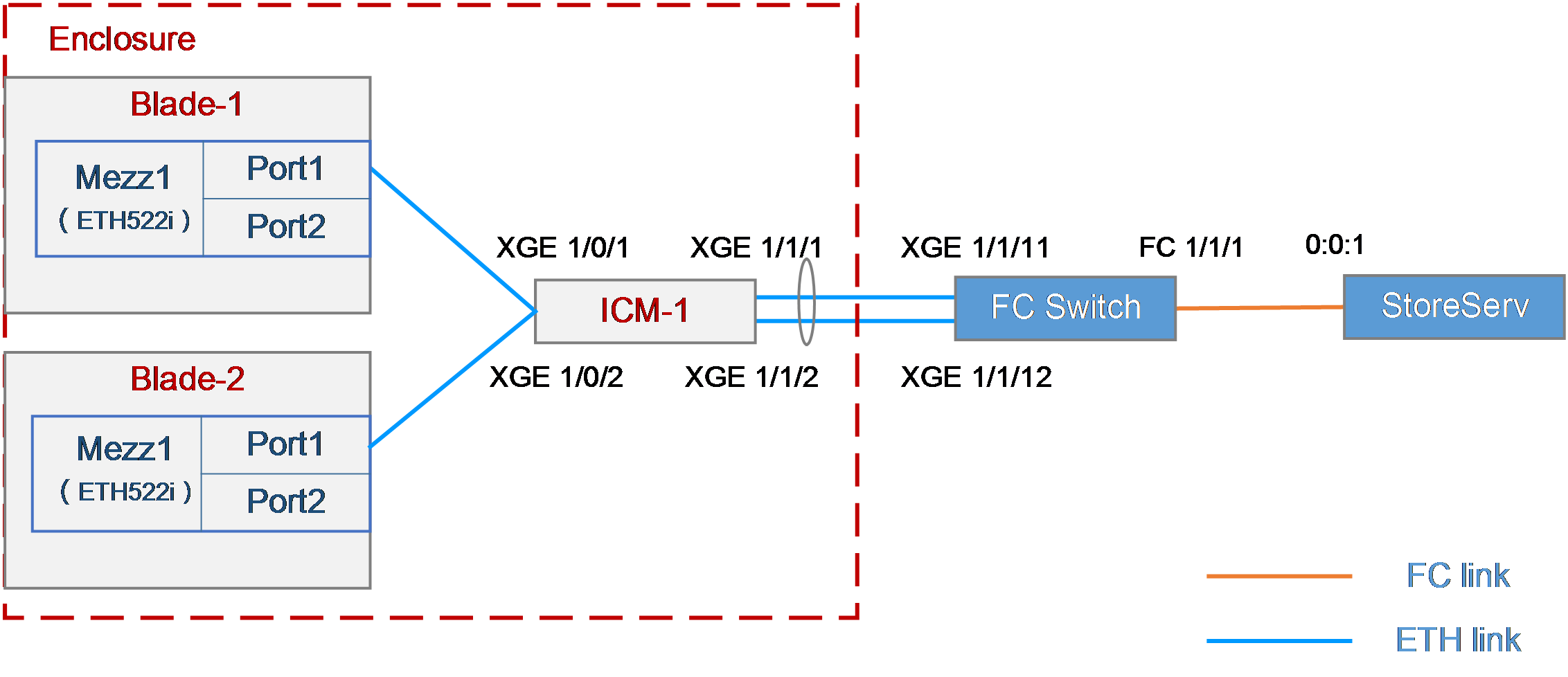

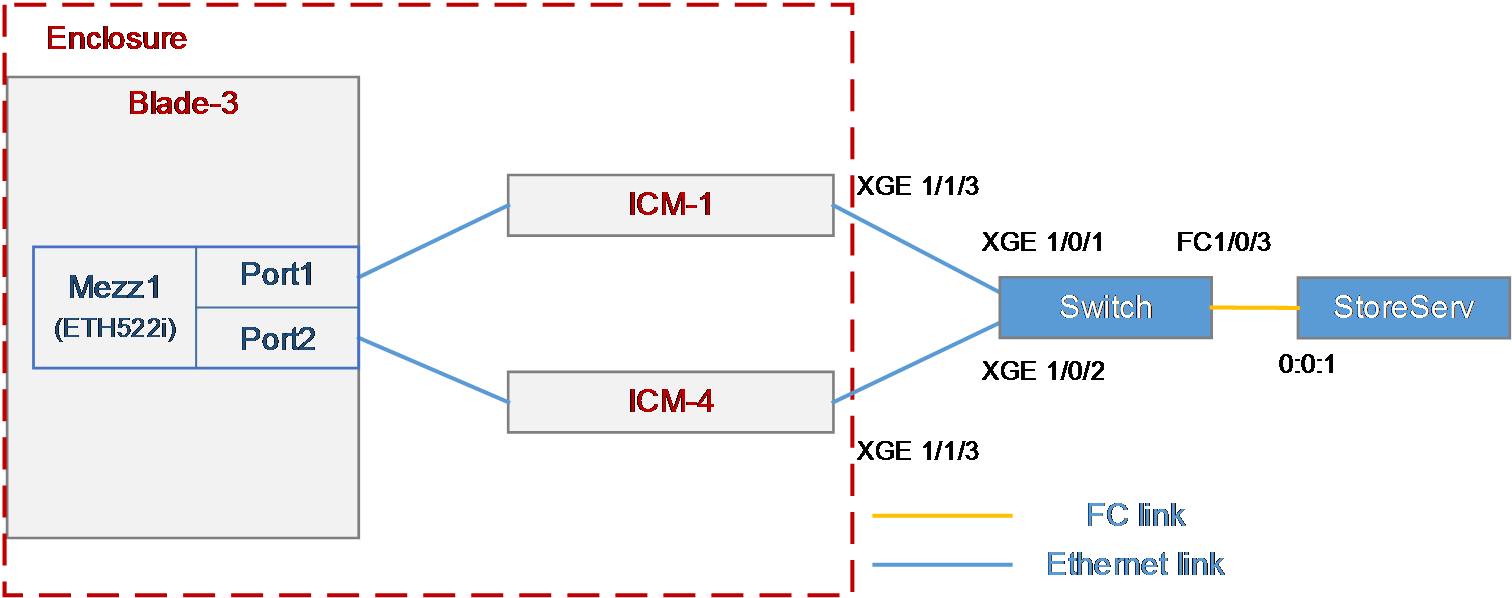

As shown in Figure 31, the blade servers and aggregation interconnect module are installed in the H3C B16000 blade server chassis. The blade servers are installed in slot 1 and slot 2, the aggregation interconnect module is installed in slot 1, and the mezzanine cards are installed in the mezzanine card slot 1 of the blade servers. The XGE 1/1/1 and XGE 1/1/2 ports of the interconnect module uplink the XGE 1/1/11 and XGE 1/1/12 ports of the FC switch. The FC 1/1/1 port of the FC switch is connected to the 0:0:1 port of the FC storage device.

In this example, the following devices and modules are used: blade server (H3C UniServer B5700 G3), mezzanine card (NIC-ETH522i-Mb-2*10G, "ETH522i"), aggregation interconnect module (BX720EF), FC switch (H3C S6800), and FC storage device (H3C CF8840 from the HPE 3Par StoreServ family).

The following requirements are expected to be met:

· Mounts storage volumes on the 3Par storage to the blade server to realize lossless remote storage.

· Breaks through the single-port traffic bottleneck of aggregation interconnect modules, so that the FCoE links of multiple blade servers share the same uplink aggregation interface.

Figure 31 Network diagram uplinking the aggregation interface of interconnect modules to FC switches

Analysis

· To mount 3Par storage volumes on the blade server, you need to build an FC link between the blade server and the 3Par storage to enable the transmission of FCoE traffic, which requires the following settings:

¡ Configure FCoE-related settings on the blade server and mezzanine card.

¡ Configure the FCoE function on the aggregation interconnect module to create the VFC port for transmitting the FC traffic.

¡ Configure the FC function on the FC switch to ensure FC link connectivity.

¡ Create a storage volume on the 3Par so that the blade server's OS can automatically recognize the storage volume.

· To break through the single-port traffic bottleneck of aggregation interconnect modules, you need to aggregate multiple physical ports of aggregation interconnect modules to form an aggregation interface, so that FCoE links of multiple blade servers can share the same aggregation interface to realize bandwidth aggregation and load sharing.

· To transmit storage data traffic of a SAN network over Ethernet links without losses, you need to create VFC ports and bind them with Ethernet ports. As a best practice, configure the PFC and ETS functions of DCBX and auto-negotiation modes on the internal ports of the aggregation switch modules.

Software versions used

This example was created and verified on versions SWITCH_SYS-1.00.11 and OM-1.00.11 of interconnect modules.

Configuration precautions

· Before adding a storage volume, ensure that the blade server has installed the OS and the appropriate network adapter driver, so that the OS can identify the ETH522i network adapter correctly. For details about installing the OS, see H3C Servers Operating System Installation Guide. For details about installing the network adapter driver, see ETH522i Mezzanine Card Module User Guide.

· After mounting the storage volumes and replacing existing storage volumes on the 3Par with new ones, reboot the OS of the blade server to identify the new volumes.

· As a best practice, disable the STP function of the internal ports on the interconnect modules, to prevent the rebooting or plugging in/out of the blade server from causing the STP of the interconnect modules to start calculating and resulting in short disconnection of external ports on the interconnect modules.

· Some packets are transmitted through VLAN 1 during the negotiation of the FCoE protocol. To ensure correct protocol negotiation, do not modify the relevant default settings of VLAN 1 to ensure normal communication of VLAN 1 traffic between the interconnect modules and blade servers.

· If you configure both FC ports and VFC ports on your devices, execute the qos trust dot1p command to enable normal PFC of the VFC ports.

Configuration procedure

Querying port information

Query the correspondence among mezzanine cards, internal ports of interconnect modules, and network adapters under the OS according to "Querying port relations."

It can be learned that:

· In this example, Port 1 of mezzanine card 1 on blade server 1 and Port 1 of mezzanine card 1 on blade server 2 are used and are named ens5f0 and ens5f1 under the OS, respectively.

· In this example, Ten-gigabitethernet 1/0/1, Ten-gigabitethernet 1/0/2, Ten-gigabitethernet 1/1/1, and Ten-gigabitethernet 1/1/2 ports of interconnect module 1 are used.

· In this example, Ten-gigabitethernet 1/1/11, Ten-gigabitethernet 1/1/12, and FC 1/1/1 ports of the H3C S6800 switch are used.

Configuring the mezzanine card

|

|

NOTE: · In this example, Red Hat 7.5 is installed on the blade server and used as an example in the following steps. · When Windows Server is installed on a blade server, there is no need to configure the mezzanine card under the system. |

1. Configure the FCoE function of ens5f0 and ens5f1 ports:

a. Under the /etc/fcoe directory, execute the cp cfg-ethX cfg-ethM command to copy and create the FCoE port configuration file. Where, cfg-ethM indicates the port name for FCoE connection. In this example, the names are cfg-ens5f0 and cfg-ens5f1. The following contents use cfg-ens5f0 as an example to describe the configuration steps.

[root@localhost]# cd /etc/fcoe/

[root@localhost fcoe]# ls

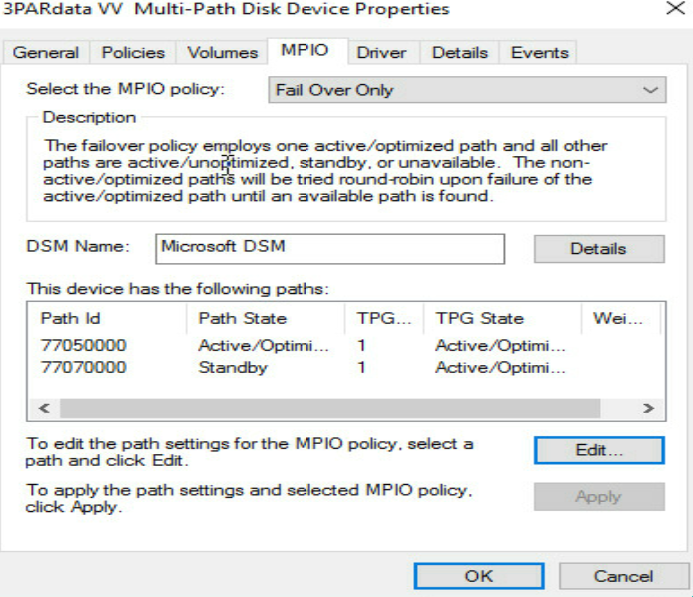

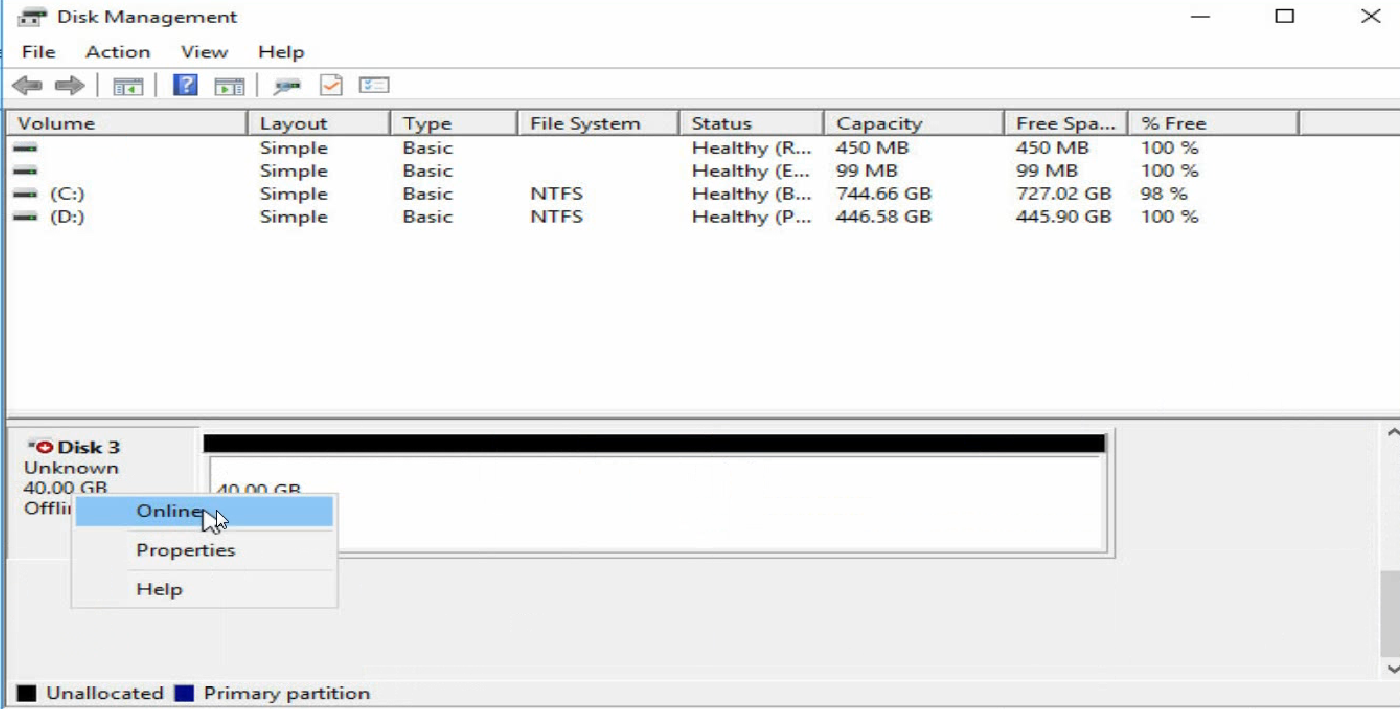

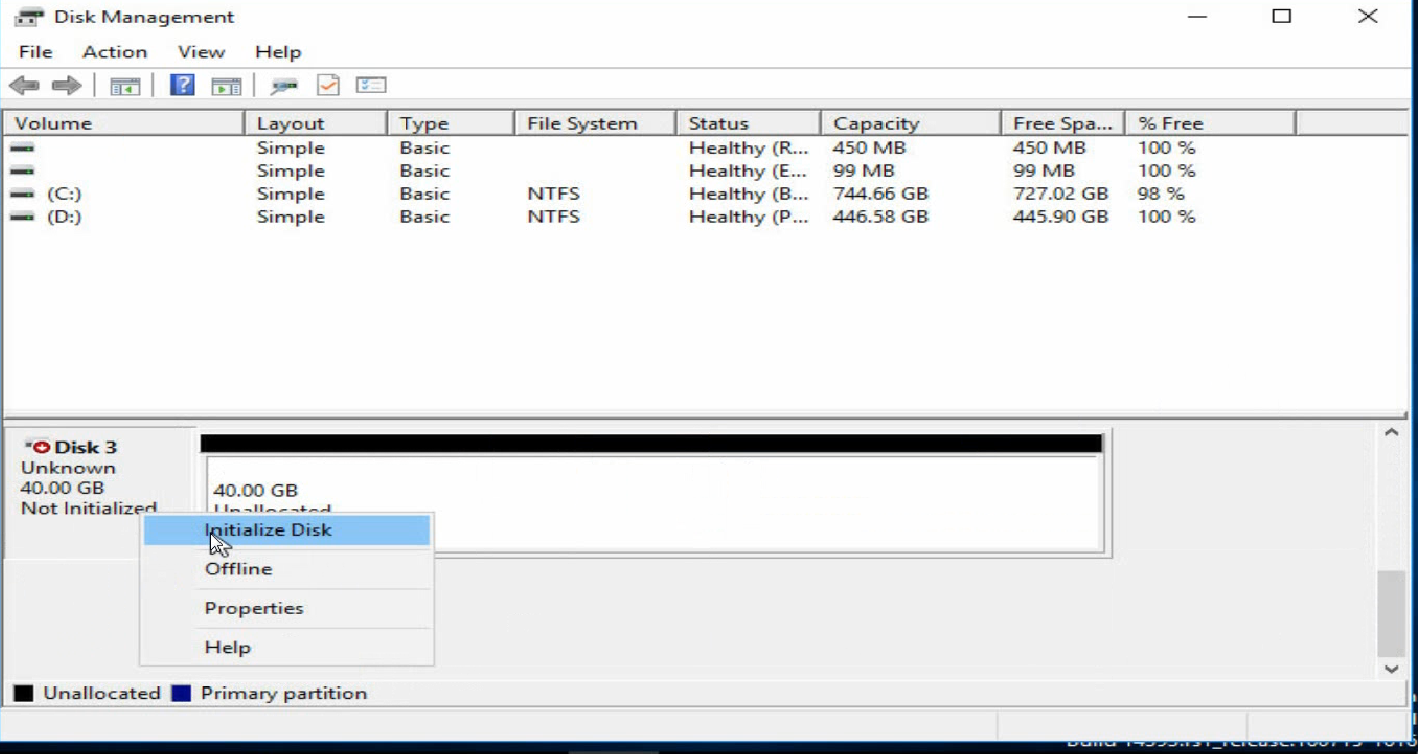

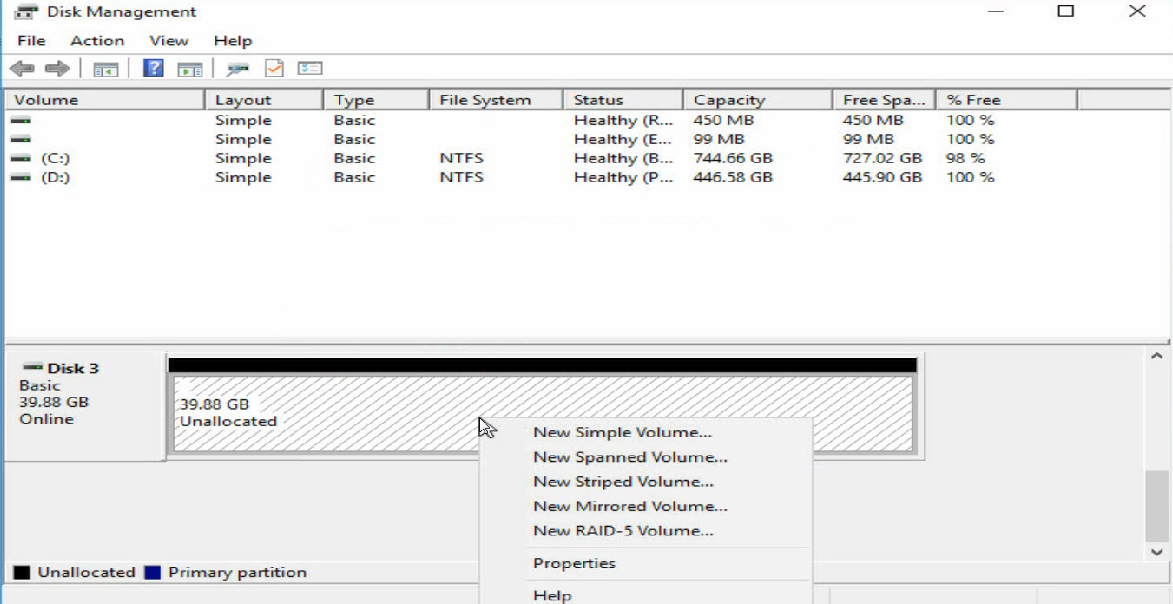

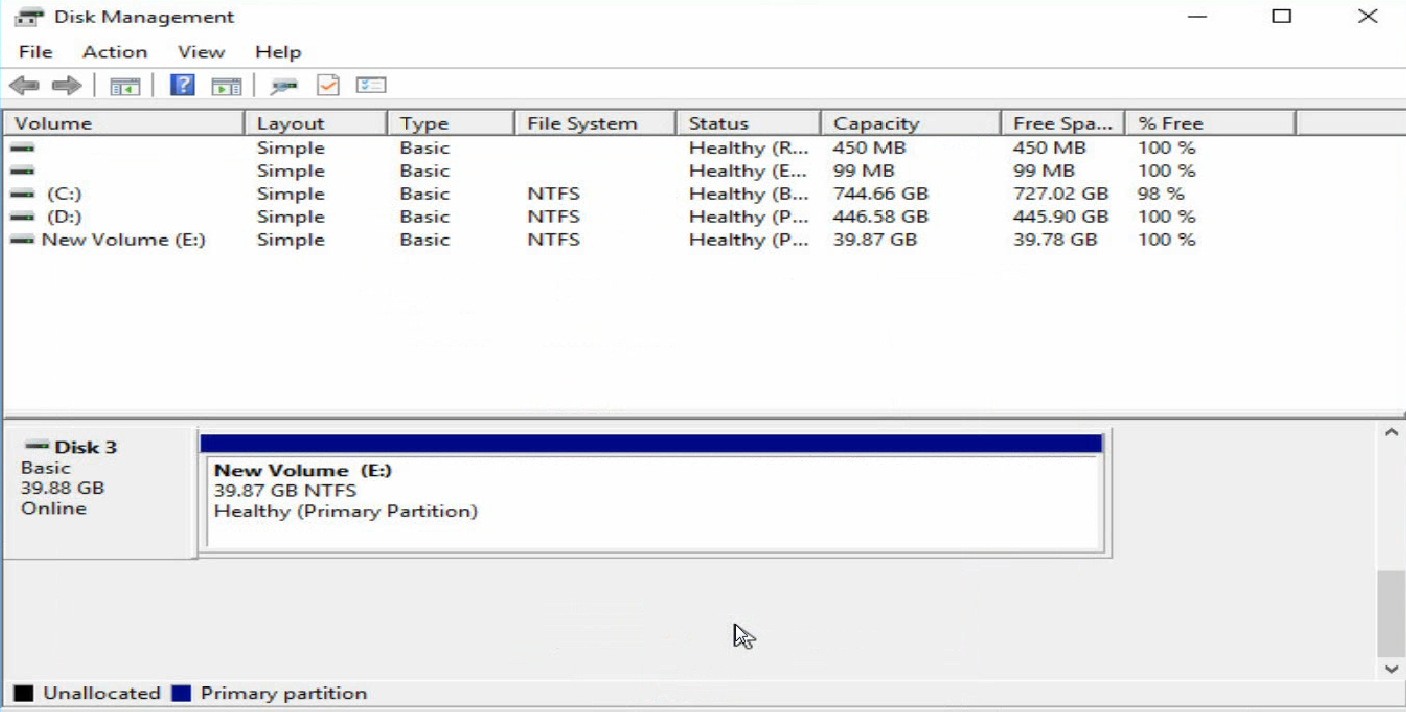

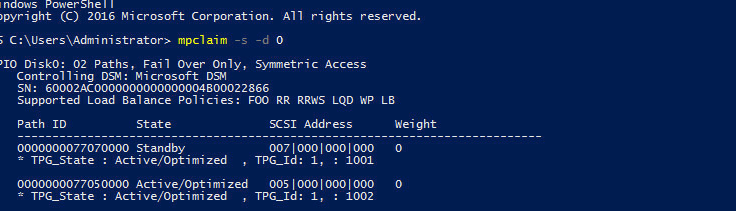

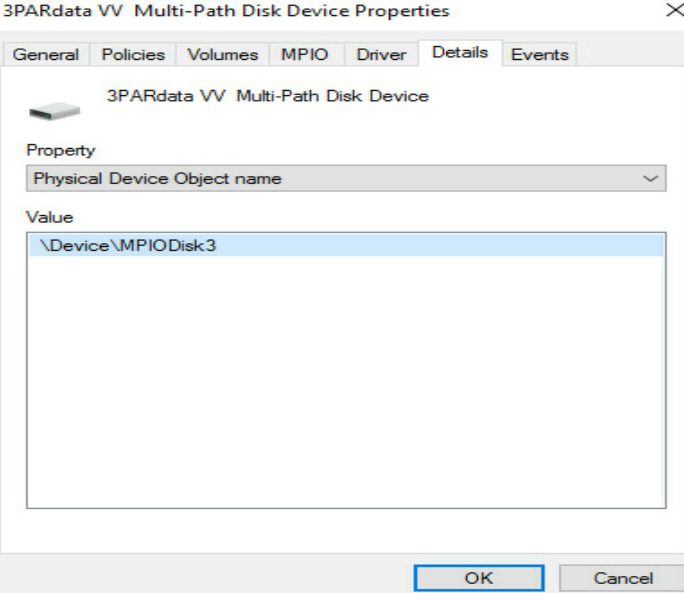

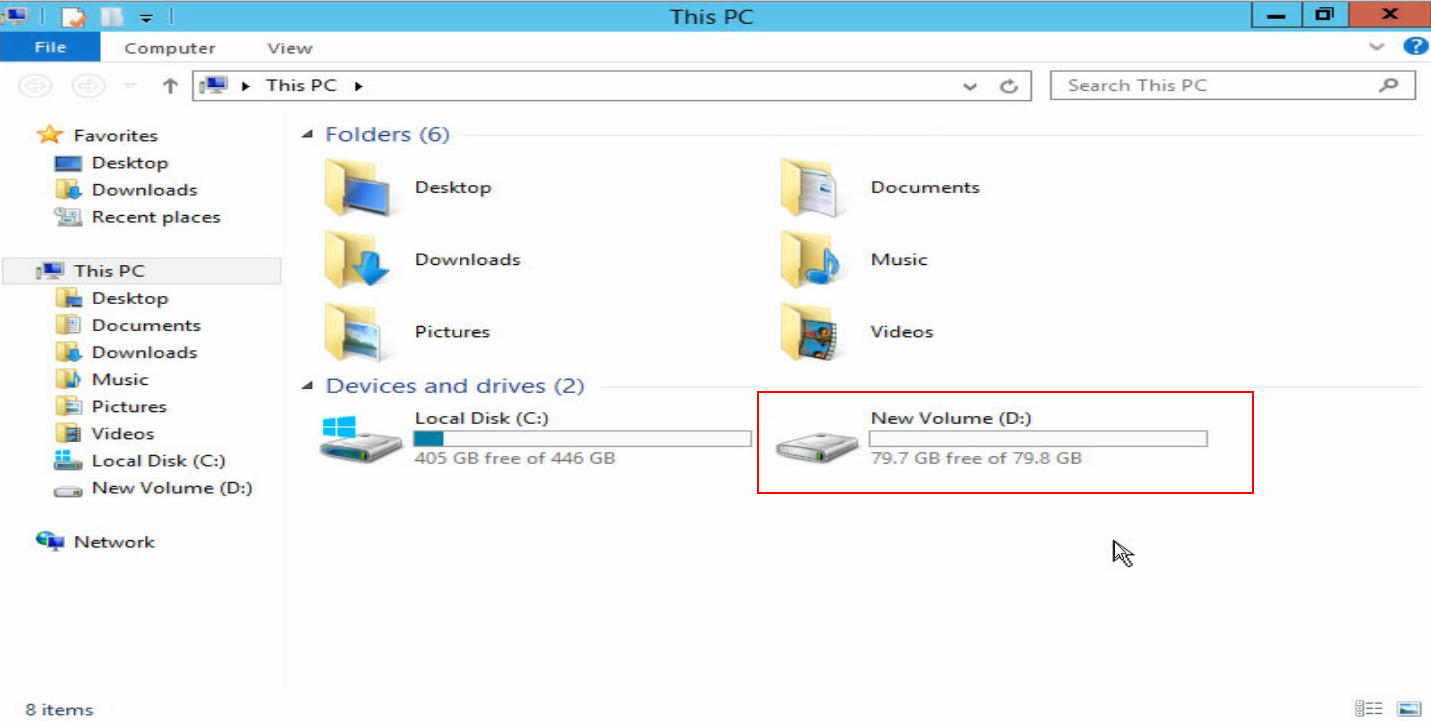

cfg-ethx