- Table of Contents

-

- H3C S9500 Series Routing Switches Operation Manual-(V1.01)

- 00-1Cover

- 01-Getting Started Operation

- 02-Port Operation

- 03-VLAN-QinQ Operation

- 04-Network Protocol Operation

- 05-Routing Protocol Operation

- 06-Multicast Protocol Operation

- 07-QACL Operation

- 08-MPLS Operation

- 09-STP Operation

- 10-Security Operation

- 11-Reliability Operation

- 12-System Management Operation

- 13-PoE Operation

- 14-NAT-URPF-VPLS Operation

- 15-Integrated Management Operation

- 16-Appendix

- Related Documents

-

| Title | Size | Download |

|---|---|---|

| 06-Multicast Protocol Operation | 790 KB |

Table of Contents

Chapter 1 IP Multicast Overview

1.1.1 Problems with Unicast/Broadcast

1.1.3 Application of Multicast

1.2 Implementation of IP Multicast

1.3 RPF Mechanism for IP Multicast Packets

Chapter 2 IGMP Snooping Configuration

2.2 IGMP Snooping Configuration

2.2.1 Enabling/Disabling IGMP Snooping

2.2.2 Configuring Router Port Aging Time

2.2.3 Configuring Maximum Response Time

2.2.4 Configuring Aging Time of Multicast Group Member Ports

2.2.5 Configuring Unknown Multicast Packets not Broadcasted within a VLAN

2.2.6 Configuring the Filtering Rule of Multicast Groups

2.2.7 Enabling/Disabling IGMP Snooping Fast Leave

2.3 Multicast Static Routing Port Configuration

2.3.3 Configuring a Multicast Static Routing Port

2.4 Displaying and Maintaining IGMP Snooping

2.5 IGMP Snooping Configuration Example

2.6 Troubleshoot IGMP Snooping

Chapter 3 Multicast VLAN Configuration

3.2 Multicast VLAN Configuration

3.3 Multicast VLAN Configuration Example

Chapter 4 Common Multicast Configuration

4.1 Introduction to Common Multicast Configuration

4.2 Common Multicast Configuration

4.2.1 Enabling Multicast Routing

4.2.2 Configuring Multicast Routing Table Size Limit

4.2.3 Clearing MFC Forwarding Entries or Its Statistic Information

4.2.4 Clearing Route Entries from the Kernel Multicast Routing Table

4.3 Managed multicast Configuration

4.3.1 Managed multicast Overview

4.3.2 Configuring Managed Multicast

4.3.3 Managed Multicast Configuration Example

4.4 Configuring Broadcast/Multicast Suppression

4.5 Displaying and Debugging Common Multicast Configuration

5.1.2 Introduction to IGMP Proxy

5.2.2 Enabling IGMP on an Interface

5.2.3 Configuring the IGMP Version

5.2.4 Configuring the Interval to Send IGMP Query Message

5.2.5 Configuring the Interval and the Number of Querying IGMP Packets

5.2.6 Configuring the Present Time of IGMP Querier

5.2.7 Configuring Maximum Response Time for IGMP Query Message

5.2.8 Configuring the limit of IGMP groups on an interface

5.2.9 Configuring a Router to Join Specified Multicast Group

5.2.10 Deleting IGMP Groups Joined on an Interface

5.2.11 Configuring the Filtering Rule of Multicast Groups

5.2.12 Enabling/Disabling IGMP Fast Leaving

5.3.3 IGMP Configuration Example

5.4 Displaying and Debugging IGMP

Chapter 6 PIM-DM Configuration

6.1.2 PIM-DM Working Principle

6.2.3 Configuring the Time Intervals for Ports to Send Hello Packets

6.2.5 Configuring the Filtering of Multicast Source/Group

6.2.6 Configuring the Filtering of PIM Neighbor

6.2.7 Configuring the Maximum Number of PIM Neighbor on an Interface

6.2.8 Clearing PIM Routing Table Entries

6.3 Displaying and Debugging PIM-DM

6.4 PIM-DM Configuration Example

Chapter 7 PIM-SM Configuration

7.1.2 PIM-SM Working Principle

7.1.3 Preparations before Configuring PIM-SM

7.2.4 Configuring the Time Intervals for Ports to Send Hello Packets

7.2.5 Configuring Candidate-BSRs

7.2.6 Configuring Candidate-RPs

7.2.8 Configuring the PIM-SM Domain Border

7.2.9 Configuring the filtering of multicast source/group

7.2.10 Configuring the filtering of PIM neighbor

7.2.11 Configuring RP to Filter the Register Messages Sent by DR

7.2.12 Limiting the range of legal BSR

7.2.13 Limiting the range of legal C-RP

7.2.14 Clearing multicast route entries from PIM routing table

7.3 Displaying and Debugging PIM-SM

7.4 PIM-SM Configuration Example

8.2.3 Configuring Static RPF Peers

8.2.4 Configuring Originating RP

8.2.5 Configuring SA Caching State

8.2.6 Configuring the Maximum Number of SA caching

8.2.7 Requesting Source Information of MSDP Peers

8.2.8 Controlling the Source Information Created

8.2.9 Controlling the Source Information Forwarded

8.2.10 Controlling the Received Source Information

8.2.11 Configuring MSDP Mesh Group

8.2.12 Configuring the MSDP Connection Retry Period

8.2.13 Shutting MSDP Peers Down

8.2.14 Clearing MSDP Connections, Statistics and SA Caching Configuration

8.3 Displaying and Debugging MSDP

8.4 MSDP Configuration Examples

8.4.1 Configuring Static RPF Peers

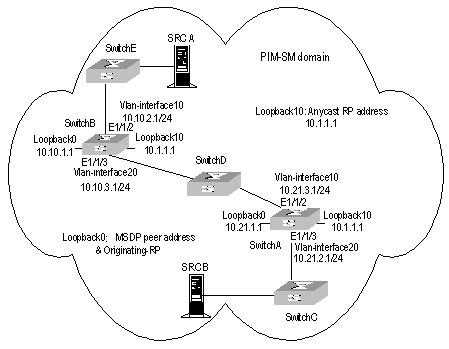

8.4.3 MSDP Integrated Networking

Chapter 9 MBGP Multicast Extension Configuration

9.1 MBGP Multicast Extension Overview

9.1.2 MBGP Extension Attributes for Multicast

9.1.3 MBGP Operating Mode and Message Type

9.2 MBGP Multicast Extension Configuration

9.2.1 Enabling MBGP Multicast Extension Protocol

9.2.2 Specifying Network Routes Notified by MBGP Multicast Extension

9.2.3 Configuring the MED Value for an AS

9.2.4 Comparing MED Values from Different AS Neighbor Paths

9.2.5 Configuring Local Preference

9.2.7 Configuring MBGP Peer (Group)

9.2.8 Configuring MBGP Route Aggregation

9.2.9 Configuring an MBGP Route Reflector

9.2.10 Configure MBGP Community Attributes

9.2.11 Importing IGP Routing Information into MBGP

9.2.12 Defining AS Path List and Routing Policy

9.2.13 Configuring MBGP Route Filtering

9.2.14 Resetting BGP Connections

9.3 Displaying and Debugging MBGP Configuration

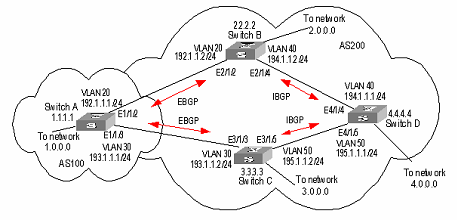

9.4 MBGP Multicast Extension Configuration Example

Chapter 1 IP Multicast Overview

An Ethernet switch functions as a router when it runs IP multicast protocol. A router that is referred to in the following represents a generalized router or a layer 3 Ethernet switch running IP multicast protocol.

1.1 IP Multicast Overview

1.1.1 Problems with Unicast/Broadcast

The constant development of the Internet and increasing interaction of versatile data, voice and video information over the network, has promoted the emergence of new services like e-commerce, network conference, online auction, video on demand (VoD), and tele-education. These services require higher information security and greater rewards.

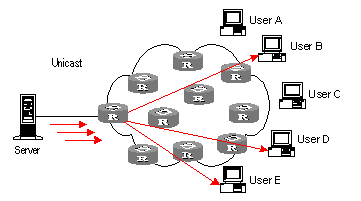

I. Data transmission in unicast mode

In unicast mode, every user that needs the information receives a copy through the channels the system separately establishes for them. See Figure 1-1.

Figure 1-1 Data transmission in unicast mode

Suppose that Users B, D, and E need the information, the information source Server establishes transmission channels with every of them. Since the traffic in transmission increases with the number of users, excessive copies of the information would spread over the network if there is a large number of users in need of this information. As the bandwidth would turn short, the unicast mode is incapable of massive transmission.

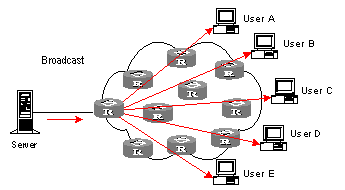

II. Data transmission in broadcast mode

In broadcast mode, every user on the network receives the information regardless of their needs. See Figure 1-2 Data transmission in broadcast mode.

Figure 1-2 Data transmission in broadcast mode

Suppose the Users B, D, and E need the information, the information source Server broadcasts the information through the router; User A and User C can also receive the information. In that case, information security and rewards to services are not guaranteed. Moreover, bandwidth is terribly wasted when only a few part of users are in need of the information.

In short, the unicast mode is useful in networks with scattered users, and the multicast mode is suitable for networks with dense users. When the number of users is uncertain, the adoption of unicast or multicast mode results in low efficiency.

1.1.2 Advantages of Multicast

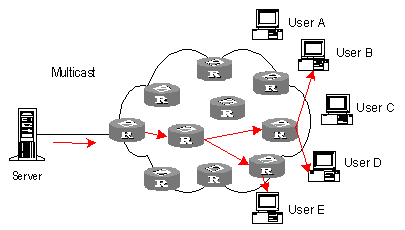

I. Multicast

IP multicast technology solves those problems. When some users in the network need specific information, it allows the multicast source to send the information only once. With the tree route established by the multicast routing protocol, the information will not be duplicated or distributed until it reaches the bifurcation point as far as possible. See Figure 1-3 Data transmission in multicast mode.

Figure 1-3 Data transmission in multicast mode

Suppose the Users B, D, and E need the information, they need to be organized into a receiver group to ensure that the information can reach them smoothly. The routers on the network duplicate and forward the information according to the distribution of these users in the group. Finally, the information is transmitted to the intended receivers B,D and E properly and correctly.

In multicast mode, the information sender is called the "multicast source", the receiver is called the "multicast group", and the routers for multicast information transmission are called "multicast routers". Members of a multicast group can scatter around the network; the multicast group therefore has no geographical limitation. It should be noted that a multicast source does not necessarily belong to a multicast group. It sends data to multicast groups but is not necessarily a receiver. Multiple sources can send packets to a multicast group simultaneously.

II. Advantages

The main advantages of multicast are:

l Enhanced efficiency: It reduces network traffic and relieves server and CPU of loads.

l Optimized performance: It eliminates traffic redundancy.

l Distributed application: It enables multipoint application.

1.1.3 Application of Multicast

IP multicast technology effectively implements point to multi-point forwarding with high speed, as saves network bandwidth a lot and can relieve network loads. It facilitates also the development of new value-added services in the Internet information service area that include online live show, Web TV, tele-education, telemedicine, network radio station and real-time audio/video conferencing. It takes a positive role in:

l Multimedia and streaming media application

l Occasional communication for training and cooperation

l Data storage and finance (stock) operation

l Point-to-multipoint data distribution

With the increasing popularity of multimedia services over IP network, multicast is gaining its marketplace. In addition, the multicast service becomes popular and prevalent gradually.

1.2 Implementation of IP Multicast

1.2.1 IP Multicast Addresses

In multicast mode, there are questions about where to send the information, how to locate the destination or know the receiver. All these questions can be narrowed down to multicast addressing. To guarantee the communication between a multicast source and a multicast group (that is, a group of receivers), the network layer multicast address (namely the IP multicast address) is required, along with the technique to correlate it with the link layer MAC multicast address. Following is the introduction to these two kinds of addresses.

I. IP Multicast Addresses

According to the definition in Internet Assigned Number Authority (IANA), IP addresses fall into four types: Class A, Class B, Class C and Class D. Unicast packets use IP addresses of Class A, Class B or Class C, depending on specific packet scales. Multicast packets use IP addresses of Class D as their destination addresses, but Class D IP addresses cannot be contained in the source IP field of IP packets.

During unicast data transmission, a packet is transmitted "hop-by-hop" from the source address to the destination address. However, in IP multicast environment, a packet has more than one destination address, or a group of addresses. All the information receivers are added to a group. Once a receiver joins the group, the data for this group address starts flowing to this receiver. All members in the group can receive the packets. This group is a multicast group.

Membership here is dynamic, and a host can join or leave the group at any time. A multicast group can be permanent or temporary. Some multicast group addresses are allocated by IANA, and the multicast group is called permanent multicast group. The IP addresses of a permanent multicast group are unchangeable, but its membership is changeable, and the number of members is arbitrary. It is quite possible for a permanent group to not a single member. Those not reserved for permanent multicast groups can be used by temporary multicast groups. Class D multicast addresses range from 224.0.0.0 to 239.255.255.255. More information is listed in Table 1-1 Ranges and meanings of Class D addresses.

Table 1-1 Ranges and meanings of Class D addresses

Reserved multicast addresses that are commonly used are described in the following table.

Table 1-2 Reserved multicast address list

|

Class D address range |

Description |

|

224.0.0.0 |

Base Address (Reserved) |

|

224.0.0.1 |

Addresses of all hosts |

|

224.0.0.2 |

Addresses of all multicast routers |

|

224.0.0.3 |

Not for allocation |

|

224.0.0.4 |

DVMRP routers |

|

224.0.0.5 |

OSPF routers |

|

224.0.0.6 |

OSPF DR |

|

224.0.0.7 |

ST routers |

|

224.0.0.8 |

ST hosts |

|

224.0.0.9 |

RIP-2 routers |

|

224.0.0.10 |

IGRP routers |

|

224.0.0.11 |

Active agents |

|

224.0.0.12 |

DHCP server/Relay agent |

|

224.0.0.13 |

All PIM routers |

|

224.0.0.14 |

RSVP encapsulation |

|

224.0.0.15 |

All CBT routers |

|

224.0.0.16 |

Specified SBM |

|

224.0.0.17 |

All SBMS |

|

224.0.0.18 |

VRRP |

|

…… |

…… |

II. Ethernet Multicast MAC Addresses

When a unicast IP packet is transmitted on the Ethernet, the destination MAC address is the MAC address of the receiver. However, for a multicast packet, the destination is no longer a specific receiver but a group with unspecific members. Therefore, the multicast MAC address should be used.

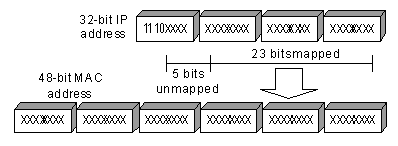

As Internet Assigned Number Authority (IANA) provisions, the high 24 bits of a multicast MAC address are 0x01005e and the low 23 bits of a MAC address are the low 23 bits of a multicast IP address. The high twenty-fifth bit is 0, a fixed value.

Figure 1-4 Mapping between a multicast IP address and an Ethernet MAC address

The first four bits of the multicast address are 1110, representing the multicast identifier. Among the rest 28 bits, only 23 bits are mapped to the MAC address, and the other five bits are lost. This may results in that 32 IP addresses are mapped to the same MAC address.

1.2.2 IP Multicast Protocols

IP multicast protocols mainly involves multicast group management protocols and multicast routing protocols. Their application positions are shown in Figure 1-5 Application positions of multicast-related protocols.

Figure 1-5 Application positions of multicast-related protocols

I. Multicast group management protocol

Multicast groups use Internet group management protocol (IGMP) as the management protocols. IGMP runs between the host and multicast router and defines the membership establishment and maintenance mechanism between them.

II. Multicast routing protocols

A multicast routing protocol runs between multicast routers to create and maintain multicast routes for correct and efficient forwarding of multicast packet. The multicast routing creates a loop-free data transmission path from one source to multiple receivers. The task of multicast routing protocols is to build up the distribution tree architecture. A multicast router can use multiple methods to build up a path for data transmission, that is, a distribution tree.

As in unicast routing, the multicast routing can also be intra-domain or inter-domain. Intra-domain multicast routing is rather mature and protocol independent multicast (PIM) is the most wildly used intra-domain protocol, which can work in collaboration with unicast routing protocols. The inter-domain routing first needs to solve how to transfer routing information between ASs. Since the ASs may belong to different telecom carriers, the inter-domain routing information must contain carriers’ policies, in addition to distance information. Currently, inter-domain routing protocols include multicast source discovery protocol (MSDP) and MBGP multicast extension.

1.3 RPF Mechanism for IP Multicast Packets

Chapter 2 IGMP Snooping Configuration

2.1 IGMP Snooping Overview

2.1.1 IGMP Snooping Principle

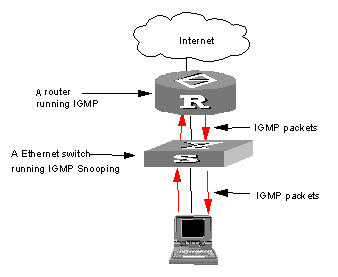

Running on the link layer, IGMP Snooping is a multicast control mechanism on the Layer 2 Ethernet switch and it is used for multicast group management and control.

When receiving the IGMP messages transmitted between the host and router, the Layer 2 Ethernet switch uses IGMP Snooping to analyze the information carried in the IGMP messages. If the switch hears IGMP host report message from an IGMP host, it will add the host to the corresponding multicast table. If the switch hears IGMP leave message from an IGMP host, it will remove the host from the corresponding multicast table. The switch continuously listens to the IGMP messages to create and maintain MAC multicast address table on Layer 2. And then it can forward the multicast packets transmitted from the upstream router according to the MAC multicast address table.

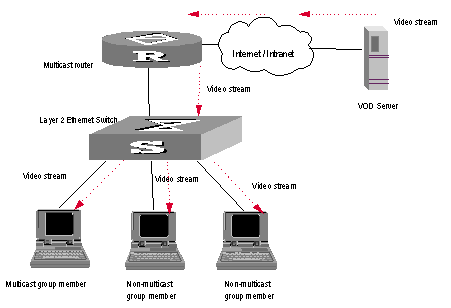

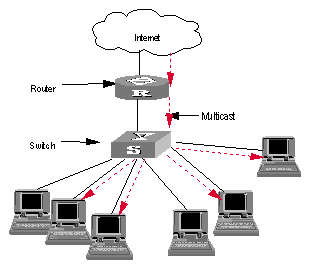

When IGMP Snooping is disabled, the packets are broadcasted on Layer 2. See the following figure:

Figure 2-1 Multicast packet transmission without IGMP Snooping

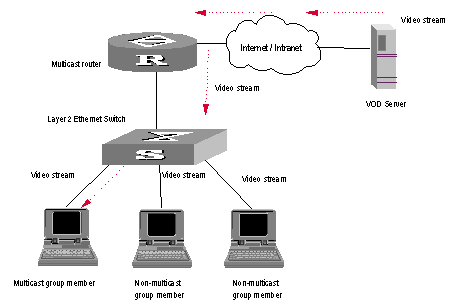

When IGMP Snooping runs, the packets are multicast rather than broadcasted on Layer 2. See the following figure:

Figure 2-2 Multicast packet transmission when IGMP Snooping runs

2.1.2 Implement IGMP Snooping

I. Related concepts of IGMP Snooping

To facilitate the description, this section first introduces some related switch concepts of IGMP Snooping.

l Router Port: The port of the switch, directly connected to the multicast router.

l Multicast member port: The Ethernet switch port connected to the multicast member. The multicast member refers to a host joined a multicast group.

l MAC multicast group: The multicast group is identified with MAC multicast address and maintained by the Ethernet switch.

l Router port aging time: Time set on the router port aging timer. If the switch has not received any IGMP general query message when the timer times out, it considers the port no longer as a router port.

l Multicast group member port aging time: When a port joins an IP multicast group, the aging timer of the port will begin timing. The multicast group member port aging time is set on this aging timer. If the switch has not received any IGMP report message when the timer times out, it transmits IGMP specific query message to the port.

l Maximum response time: When the switch transmits IGMP specific query message to the multicast member port, the Ethernet switch starts a response timer, which times before the response to the query. If the switch has not received any IGMP report message before the timer times out, it will remove the port from the multicast member ports

II. Implement Layer 2 multicast with IGMP Snooping

The Ethernet switch runs IGMP Snooping to listen to the IGMP messages and map the host and its ports to the corresponding multicast group address. To implement IGMP Snooping, the Layer 2 Ethernet switch processes different IGMP messages in the way illustrated in the figure below:

Figure 2-3 Implement IGMP Snooping

l IGMP general query message: Transmitted by the multicast router to the multicast group members to query which multicast group contains member. When an IGMP general query message arrives at a router port, the Ethernet switch will reset the aging timer of the port. When a port other than a router port receives the IGMP general query message, the Ethernet switch will start the aging timer for the port.

l IGMP specific query message: Transmitted from the multicast router to the multicast members and used for querying if a specific group contains any member. When received IGMP specific query message, the switch only transmits the specific query message to the IP multicast group which is queried.

l IGMP report message: Transmitted from the host to the multicast router and used for applying for joining a multicast group or responding to the IGMP query message. When received the IGMP report message, the switch checks if the MAC multicast group, corresponding to the IP multicast group the packet is ready to join exists.

If the corresponding MAC multicast group does not exist, the switch only notifies the router that a member is ready to join a multicast group, creates a new MAC multicast group, adds the port received the message to the group, starts the port aging timer, and then adds all the router ports in the native VLAN of the port into the MAC multicast forwarding table, and meanwhile creates an IP multicast group and adds the port received the report message to it.

If the corresponding MAC multicast group exists but does not contains the port received the report message, the switch adds the port into the multicast group and starts the port aging timer. And then the switch checks if the corresponding IP multicast group exists.

If it does not exist, the switch creates a new IP multicast group and adds the port received the report message to it. If it exists, the switch adds the port to it.

If the MAC multicast group corresponding to the message exists and contains the port received the message, the switch will only reset the aging timer of the port.

l IGMP leave message: Transmitted from the multicast group member to the multicast router to notify that a host left the multicast group. When received a leave message of an IP multicast group, the Ethernet switch transmits the specific query message concerning that group to the port received the message, in order to check if the host still has some other member of this group and meanwhile starts a maximum response timer. If the switch has not receive any report message from the multicast group after the timer expires, the port will be removed from the corresponding MAC multicast group. If the MAC multicast group does not have any member, the switch will notify the multicast router to remove the branch from the multicast tree.

2.2 IGMP Snooping Configuration

The main IGMP Snooping configuration includes:

l Enabling/Disabling IGMP Snooping

l Configuring Router Port Aging Time

l Configuring Maximum Response Time

l Configuring Aging Time of Multicast Group Member Ports

l Configuring Unknown Multicast Packets not Broadcasted within a VLAN

l Configuring the Filtering Rule of Multicast Groups

l Enabling/Disabling IGMP Snooping Fast Leave

2.2.1 Enabling/Disabling IGMP Snooping

You can use the following commands to enable/disable IGMP Snooping to control whether MAC multicast forwarding table is created and maintained on Layer 2.

Perform the following configuration in system view and VLAN view.

Table 2-1 Enabling/Disabling IGMP Snooping

|

Operation |

Command |

|

Enable/disable IGMP Snooping |

igmp-snooping { enable | disable } |

By default, IGMP Snooping is disabled.

![]() Caution:

Caution:

l First enable IGMP Snooping globally in system view, and then enable IGMP Snooping in VLAN view. Otherwise, IGMP Snooping will not take effect.

l Although layer 2 and layer 3 multicast protocols can be configured in pair, they cannot run on the same VLAN or its corresponding VLAN interface at the same time. For example, if PIM or IGMP is enabled on a VLAN, then IGMP Snooping cannot operate on this VLAN.

l If the VLAN VPN is enabled on a port, the IGMP Snooping feature cannot be enabled on the VLAN for the port or the IGMP feature cannot be enabled on the corresponding VLAN interface.

l If IGMP Snooping feature is enabled on a VLAN, or IGMP is enabled on the VLAN interface, you cannot add the member port on which VLAN VPN is enabled into the VLAN.

l Isolate-user-VLAN supports the IGMP-Snooping function. After IGMP-Snooping is enabled under isolate-user-VLAN, all secondary VLANs are IGMP-Snooping enabled. It makes no sense to enable IGMP-Snooping for a secondary VLAN.

l In a secondary VLAN, IGMP packets will be directly converted and processed in isolate-user-VLAN, namely all the multicast services are implemented within isolate-user-VLAN.

l Ports in secondary VLANs cannot be used as source addresses of multicast.

2.2.2 Configuring Router Port Aging Time

This task is to manually configure the router port aging time. If the switch has not received any general query message from the router before the router port is aged, it will remove the port from all MAC multicast groups.

Perform the following configuration in system view.

Table 2-2 Configuring router port aging time

|

Operation |

Command |

|

Configure router port aging time |

igmp-snooping router-aging-time seconds |

|

Restore the default aging time of the router port |

undo igmp-snooping router-aging-time |

By default, the router port aging time is 105s.

2.2.3 Configuring Maximum Response Time

This task is to manually configure the maximum response time. If the Ethernet switch receives no report message from a port within the maximum response time, it will remove the port from the multicast group.

Perform the following configuration in system view.

Table 2-3 Configuring the maximum response time

|

Operation |

Command |

|

Configure the maximum response time |

igmp-snooping max-response-time seconds |

|

Restore the default setting |

undo IGMP-snooping max-response-time |

By default, the maximum response time is 1 seconds.

2.2.4 Configuring Aging Time of Multicast Group Member Ports

This task is to manually set the aging time of the multicast group member port. If the Ethernet switch receives no multicast group report message during the member port aging time, it will transmit the specific query message to that port and starts a maximum response timer.

Perform the following configuration in system view.

Table 2-4 Configuring aging time of the multicast member ports

|

Operation |

Command |

|

Configure aging time of the multicast member |

igmp-snooping host-aging-time seconds |

|

Restore the default setting |

undo igmp-snooping host-aging-time |

By default, the aging time of the multicast member is 260 seconds.

2.2.5 Configuring Unknown Multicast Packets not Broadcasted within a VLAN

This configuration task is to enable/disable the function of not broadcasting unknown multicast packets within a VLAN. If this function is disabled but IGMP snooping enabled on VLAN, multicast packets are broadcasted on within the VLAN when the destination broadcast group has no member ports. When this function is enabled, however, multicast packets are only forwarded to the router port, but not broadcasted within the VLAN if no member port exists. In addition, since the router sends regularly IGMP Query and PIM Hello packets, the switch can identify which ports are router ports. If there is no member port or router port, the packets will be directly dropped, instead of being forwarded.

![]() Caution:

Caution:

If IGMP snooping is not enabled on the VLAN (nor Layer 3 multicast), unknown multicast packets are broadcasted within the VLAN no matter whether this function is enabled or not. Therefore, to disable unknown multicast packets from flooding within a VLAN, you must enable igmp-snooping in this VLAN and carry out the igmp-snooping nonflooding-enable command.

Perform the following configuration in system view.

Table 2-5 Globally enable/disable multicast packets not broadcasted within a VLAN

|

Operation |

Command |

|

Enable multicast packets not to be broadcasted within a VLAN |

igmp-snooping nonflooding-enable |

|

Disable multicast packets not to be broadcasted within a VLAN |

undo igmp-snooping nonflooding-enable |

By default, unknown multicast packets are broadcasted within the VLAN.

2.2.6 Configuring the Filtering Rule of Multicast Groups

On the IGMP snooping-enabled switch, you can configure ACL rules whether the specified multicast group can be joined to a VLAN or not. This feature filters every received IGMP join packet. According to the destination group address of the packets and the ACL rule bound to the VLAN, the switch determines whether to discard the packets or let them pass.

By setting the filtering rule of multicast groups in the VLAN, you can control access to IP multicast groups. You can only configure one ACL rule for each VLAN, and the new configured rule will replace the old one.

Perform the following configuration in system view.

Table 2-6 Configure the aging time of multicast group members

|

Operation |

Command |

|

Set the filtering rule of multicast groups in the specified VLAN |

igmp-snooping group-policy acl-number |

|

Cancel the filtering rule of multicast groups in the specified VLAN |

undo igmp-snooping group-policy |

By default, no filtering rule is set for a VLAN. In this case, a host can be joined to any multicast group.

![]() Caution:

Caution:

l If an inexistent acl-number is bound to the VLAN, or if the bound acl-number is not configured with a rule, a host is still allowed to join any multicast group.

l If no acl-number exists, you can also configure the filtering rule of multicast groups in VLAN view. That is, this rule is not restricted by the ACL itself, and is valid for all members in the specified VLAN.

2.2.7 Enabling/Disabling IGMP Snooping Fast Leave

An IGMP Snooping-enabled Layer 2 switch directly removes a fast leave–enabled port from the list of member ports of the multicast group when the port receives a leave packet. That is, the switch peels off the port from the multicast group and does not forward multicast data to the port any longer.

Table 2-7 Enable/Disable IGMP Snooping fast leave

|

Command |

Remarks |

|

|

Enter system view |

system-view |

- |

|

Enable IGMP Snooping fast leave in system view |

igmp-snooping fast-leave [ vlan { vlan-id [ to vlan-id ] } &<1-10> ] |

IGMP Snooping fast leave is disabled by default. |

|

Enter Ethernet port view |

interface interface-type interface-number |

- |

|

Enable IGMP Snooping fast leave in Ethernet port view |

igmp-snooping fast-leave [ vlan { vlan-id [ to vlan-id ] } &<1-10> ] |

IGMP Snooping fast leave is disabled by default. |

|

Disable IGMP Snooping fast leave |

undo igmp-snooping fast-leave [ vlan { vlan-id [ to vlan-id ] } &<1-10> ] |

- |

For detailed configuration, refer to the H3C S9500 Series Routing Switches Command Manual.

& Note:

l Fast leave configurations that are configured in system view and Ethernet port view operate separately.

l Fast leave works on all ports of the specified VLANs if you configure it in system view. However, it only works on the current port (e.g., when a Trunk port belongs to multiple VLANs) in the specified VLANs if you configure it in Ethernet port view.

![]() Caution:

Caution:

l Fast leave configured for a port takes effect only when the VLAN it belongs to is IGMP Snooping-enabled.

l Fast leave does not work if the corresponding specified VLANs do not exists, the port does not belongs to any of the specified VLANs, or the VLANs do not have IGMP Snooping enabled.

l A newly configured IGMP Snooping clears all existing fast leave configurations.

l The igmp-snooping fast-leave command is useless if you do not enable IGMP Snooping globally. (You can execute the igmp-snooping enable command in system view to enable IGMP Snooping globally.)

l When you configure IGMP Snooping fast leave on aggregation ports, the configuration takes effect only on primary aggregation ports.

l If you add an IGMP V1 host of the same multicast group to the port, the switch does not remove the port when the port receives an IGMP Leave packet of the multicast group even you enable IGMP Snooping fast leave for the port.

2.3 Multicast Static Routing Port Configuration

2.3.1 Introduction

By configuring a port in a VLAN to be a static routing port, you can enable IGMP packets to be transparently transmitted through the port, meeting the requirements of specific networks.

2.3.2 Prerequisites

l Ports and VLANs involved already exist.

l Ports to be configured belong to corresponding VLANs.

2.3.3 Configuring a Multicast Static Routing Port

You can configure a port in a VLAN to be a static routing port in VLAN view.

Table 2-8 Configure a port in a VLAN to be a static routing port in VLAN view

|

Operation |

Command |

Description |

|

Enter system view |

system-view |

- |

|

Enter VLAN view |

vlan vlan-id |

- |

|

Configure multicast static routing port |

igmp-snooping mrouter port port-number |

Provide the port-number argument in the format of interface-type interface-number, where the interface-type argument can only be Ethernet port type. By default, no static routing port is configured. |

You can also configure a port in a VLAN to be a static routing port in the corresponding Ethernet port view.

Table 2-9 Configure a port in a VLAN to be a static routing port in Ethernet port view

|

Operation |

Command |

Description |

|

Enter system view |

system-view |

- |

|

Enter Ethernet port view |

interface interface-type interface-number |

The interface-type argument can only be Ethernet port type. |

|

Configure multicast static routing port |

igmp-snooping mrouter vlan vlan-id |

By default, no static routing port is configured. |

![]() Caution:

Caution:

l You will fail to configure a port to be a static routing port if the port identified by the port-number argument does not exist, or the port does not belong to the VLAN.

l You will fail to configure a port to be a static routing port if the VLAN identified by the vlan-id argument does not exist or the port does not belong to the VLAN.

l You can configure multiple ports in a VLAN to be static routing ports by performing the above configuration repeatedly. The newly configured ports do not replace the existing static routing ports.

l When a trunk port belongs to multiple VLANs, this port can be configured as the static routing port for multiple VLANs.

l Static routing ports can be configured in VLAN view or Ethernet port view. However, you can verify the configured static routing ports only by executing the display this command in Ethernet port view.

l The configuration of a static routing port takes effect on the current port only, no matter whether the current port is an aggregated port or not. To configure all ports in an aggregation group as static routing ports, you can enable the static routing port function on all the ports in the aggregation group.

2.4 Displaying and Maintaining IGMP Snooping

Use the debugging mpm command in user view to carry out multicast debugging.

Table 2-10 Displaying and debugging IGMP Snooping

|

Operation |

Command |

|

Display the information about current IGMP Snooping configuration |

display igmp-snooping configuration |

|

Display IGMP Snooping statistics of received and sent messages |

display igmp-snooping statistics |

|

Display IP/MAC multicast group information in the VLAN |

display igmp-snooping group [ vlan vlanid ] |

|

Enable IGMP Snooping debugging |

debugging mpm { abnormal | all | event | forward | groups | packets | timer } |

|

Clear IGMP Snooping statistics information |

reset igmp-snooping statistics |

2.5 IGMP Snooping Configuration Example

2.5.1 Enable IGMP Snooping

I. Networking requirements

To implement IGMP Snooping on the switch, you need to enable IGMP Snooping on the switch first. The switch is connected with the router via the router port, and connected with user PC through the non-router ports.

II. Networking diagram

Figure 2-4 IGMP Snooping configuration networking

III. Configuration procedure

Suppose you need to enable IGMP Snooping on VLAN10. The procedures are as follows:

# Display the current state of IGMP Snooping.

<H3C> display igmp-snooping configuration

# If IGMP Snooping is not enabled, enable it in system view.

<H3C> system-view

System View: return to User View with Ctrl+Z.

[H3C] igmp-snooping enable

# Display the status of the VLAN10 interface, to check if PIM or IGMP is enabled on it.

[H3C] display current-configuration interface Vlan-interface 10

# You can enable IGMP Snooping in VLAN view only if PIM or IGMP is not running on VLAN10.

[H3C] vlan10

[H3C-vlan10] igmp-snooping enable

2.6 Troubleshoot IGMP Snooping

Fault: Multicast function cannot be implemented on the switch.

Troubleshooting:

1) IGMP Snooping is disabled.

l Carry out the display current-configuration command in any view to display the status of IGMP Snooping.

l If IGMP Snooping is not enabled, carry out the igmp-snooping enable command in system view to enable IGMP Snooping. Then, use the same command in VLAN view to enable IGMP Snooping in the corresponding VLAN.

2) Multicast forwarding table set up by IGMP Snooping is incorrect.

l Carry out the display igmp-snooping group command in any view to display if the multicast group is the expected one.

l If the multicast group created by IGMP Snooping is not correct, turn to professional maintenance personnel for help.

l Continue with diagnosis 3 if the second step is completed.

3) Multicast forwarding table set up on the bottom layer is incorrect.

l In any view, carry out the display mac-address vlan command to check whether the MAC multicast forwarding table established in the bottom layer by vlan-id is consistent with that established by IGMP Snooping.

l If they are not consistent, please contact the maintenance personnel for help.

Chapter 3 Multicast VLAN Configuration

3.1 Multicast VLAN Overview

Based on the current multicast on demand, when users in different VLANs request the service, multicast flow is duplicated in each VLAN and thus a great deal of bandwidth is wasted. To solve this problem, we provide the multicast VLAN feature. With this feature, you can add switch ports to a multicast VLAN and enable IGMP Snooping to allow users in different VLANs to share the same multicast VLAN. In this way, multicast flow is transmitted in one multicast VLAN instead of multiple user VLANs and bandwidth is greatly saved.

As multicast VLAN is isolated from user VLANs, this guarantees both security and enough bandwidth. After you configure the multicast VLAN, multicast information flow can be transmitted to users continuously.

3.2 Multicast VLAN Configuration

Multicast VLAN is based on layer 2 multicast. The following table describes the multicast VLAN configuration tasks:

Table 3-1 Configure multicast VLAN

|

Item |

Command |

Description |

|

Enter system view |

system-view |

- |

|

Enable IGMP Snooping in system view |

igmp-snooping enable |

Required |

|

Enter VLAN view |

vlan vlan-id |

- |

|

IGMP Snooping is enabled on the VLAN Enable IGMP Snooping in VLAN view |

igmp-snooping enable |

Required |

|

Enable multicast VLAN |

service-type multicast |

Required |

|

Quit VLAN view |

quit |

|

|

Enter the view of the Ethernet port connected to the user |

interface interface-type interface-number |

- |

|

Define the port type to hybrid |

port link-type hybrid |

Required |

|

Add ports to corresponding VLANs |

port hybrid vlan vlan-id-list untagged |

Required |

& Note:

l A port can only belong to one multicast VLAN.

l The type of the ports connected to user terminals must be hybrid untagged.

l The current system supports up to three multicast VLANS.

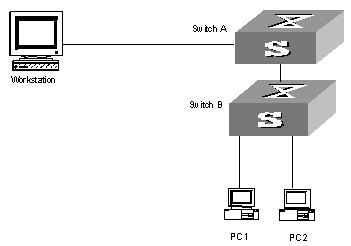

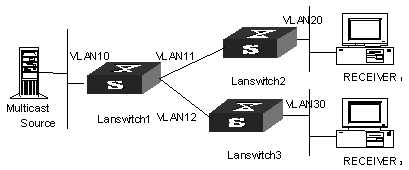

3.3 Multicast VLAN Configuration Example

I. Network requirements

Configure a multicast VLAN, so that users in VLAN 2 and VLAN 3 receive multicast flows through the multicast VLAN10.

Table 3-2 Device number and description

|

Device |

Description |

Requirement |

|

Switch A |

Layer 3 switch |

The IP address of VLAN 2 interface is 168.10.1.1. The port E1/1/1 belongs to VLAN 2 and is connected to the Workstation The IP address of VLAN 10 interface is 168.20.1.1. The port E1/1/10 belongs to VLAN 10 and is connected to Switch B Configure layer 3 multicast PIM DM and IGMP on VLAN 10 |

|

Switch B |

Layer 2 switch |

VLAN 2 contains the port E1/1/1 and VLAN 3 contains the port E1/1/2. The ports E1/1/1 and E1/1/2 are connected to PC1 and PC2 respectively. The port E1/1/10 is connected to Switch A. |

|

PC 1 |

User 1 |

PC1 is connected to the port E1/1/1 of Switch B. |

|

PC 2 |

User 2 |

PC2 is connected to the port E1/1/2 of Switch B. |

II. Network diagram

Figure 3-1 Network diagram for multicast VLAN configuration

III. Configuration procedure

Before performing the following configurations, you should configure the IP addresses and connect the devices correctly.

1) Configure Switch A

# Configure the IP address of the VLAN 2 interface to 168.10.1.1. Enable the PIM-DM protocol.

<Switch A> system-view

System View: return to User View with Ctrl+Z.

[Switch A] multicast routing-enable

[Switch A] interface vlan-interface 2

[Switch A-Vlan-interface2] ip address 168.10.1.1 255.255.255.0

[Switch A-Vlan-interface2] pim dm

[Switch A-Vlan-interface2] quit

# Configure the IP address of the VLAN 10 interface to 168.20.1.1. Enable the PIM-DM and IGMP protocols.

[Switch A] interface vlan-interface 10

[Switch A-Vlan-interface10] ip address 168.20.1.1 255.255.255.0

[Switch A-Vlan-interface10] pim dm

[Switch A-Vlan-interface10]igmp enable

[Switch A-Vlan-interface10] quit

# Define Ethernet 1/1/10 as a trunk port and add this port to VLAN 10.

[Switch A]interface Ethernet1/1/10

[Switch A-Ethernet1/1/10]port link-type trunk

[Switch A-Ethernet1/1/10]port trunk permit vlan 10

2) Configure Switch B

# Enable IGMP Snooping.

<Switch B> system-view

System View: return to User View with Ctrl+Z.

[Switch B] igmp-snooping enable

# Enable IGMP-Snooping on VLAN 2 and VLAN 3.

[Switch B] vlan 2

[Switch B-vlan 2] igmp-snooping enable

[Switch B-vlan 2]quit

[Switch B] vlan 3

[Switch B-vlan 3] igmp-snooping enable

# Configure VLAN 10 as multicast VLAN. Enable IGMP Snooping.

[Switch B] vlan 10

[Switch B-vlan10] igmp-snooping enable

[Switch B-vlan10] service-type multicast

[Switch B-vlan10] quit

# Define Ethernet 1/1/10 as trunk port. Add the port to VLAN 10.

[Switch B] interface Ethernet 1/1/10

[Switch B-Ethernet 1/1/10] port link-type trunk

[Switch B-Ethernet 1/1/10] port trunk vlan 10

[Switch B-Ethernet 1/1/10] quit

# Define Ethernet 1/1/1 as hybrid port. Add the port to VLAN 2 and VLAN 10. Make the port carry no VLAN label when it transmits packets of VLAN 2 and VLAN 10. Set the default VLAN ID of the port to VLAN 2.

[Switch B] interface Ethernet 1/1/1

[Switch B-Ethernet 1/1/1] port link-type hybrid

[Switch B-Ethernet 1/1/1] port hybrid vlan 2 10 untagged

[Switch B-Ethernet 1/1/1] port hybrid pvid vlan 2

[Switch B-Ethernet 1/1/1] quit

# Define Ethernet 1/1/2 as hybrid port. Add the port to VLAN 3 and VLAN 10. Make the port carry no VLAN label when it transmits packets of VLAN 3 and VLAN 10. Set the default VLAN ID of the port to VLAN 3.

[Switch B] interface Ethernet 1/1/2

[Switch B-Ethernet 1/1/2] port link-type hybrid

[Switch B-Ethernet 1/1/2] port hybrid vlan 3 10 untagged

[Switch B-Ethernet 1/1/2] port hybrid pvid vlan 3

[Switch B-Ethernet 1/1/2] quit

Chapter 4 Common Multicast Configuration

4.1 Introduction to Common Multicast Configuration

The multicast common configuration is for both the multicast group management protocol and the multicast routing protocol. The configuration includes enabling IP multicast routing, displaying multicast routing table and multicast forwarding table, etc.

4.2 Common Multicast Configuration

Common multicast configuration includes:

l Enabling multicast routing

l Configuring multicast route limit

l Clearing MFC (Multicast Forwarding Cache) forwarding entries or its statistic information

l Configuring managed multicast

l Clearing route entries from the kernel multicast routing table

l Configuring broadcast/multicast suppression

4.2.1 Enabling Multicast Routing

Enable multicast routing first before enabling multicast routing protocol.

Perform the following configuration in system view.

Table 4-1 Enabling multicast routing

|

Operation |

Command |

|

Enable multicast routing |

multicast routing-enable |

|

Disable multicast routing |

undo multicast routing-enable |

By default, multicast routing is disabled.

![]() Caution:

Caution:

Multicast routing must be enabled before other multicast configurations can take effect.

4.2.2 Configuring Multicast Routing Table Size Limit

Because too many multicast routing table entries may exhaust the router memory, you need to limit the size of the multicast routing table.

Perform the following configuration in system view.

Table 4-2 Configuring multicast routing table size limit

|

Operation |

Command |

|

Configure multicast routing table size limit |

multicast route-limit limit |

|

Restore multicast routing table size limit to the default value |

undo multicast route-limit |

By default, the maximum multicast routing table entries is 512.

4.2.3 Clearing MFC Forwarding Entries or Its Statistic Information

You can clear MFC forward entries or statistic information of FMC forward entries via the following command.

Perform the following configuration in user view.

Table 4-3 Clearing MFC forwarding entries or its statistic information

|

Operation |

Command |

|

Clear MFC forwarding entries or its statistic information |

reset multicast forwarding-table [ statistics ] { all | { group-address [ mask { group-mask | group-mask-length } ] | source-address [ mask { source-mask | source-mask-length } ] | incoming-interface { null NULL-interface-number | interface-type interface-number } } * } |

4.2.4 Clearing Route Entries from the Kernel Multicast Routing Table

You can clear route entries from the kernel multicast routing table, as well as MFC forwarding entries via the following command.

Perform the following configuration in user view.

Table 4-4 Clearing routing entries of multicast routing table

|

Operation |

Command |

|

Clear routing entries of multicast routing table |

reset multicast routing-table { all | { group-address [ mask { group-mask | group-mask-length } ] | source-address [ mask { source-mask | source-mask-length } ] | incoming-interface vlan-interface interface-number } * } |

4.3 Managed multicast Configuration

4.3.1 Managed multicast Overview

The managed multicast feature controls user’s authority to join multicast groups. This feature is based on ports: users must first pass the 802.1x authentication set for their ports. Then they are allowed to join the multicast groups specifically configured for them but are prohibited from joining any multicast group they are not authorized to join. In this way, users access to specific multicast groups under control.

Prerequisites of multicast authentication:

1) 802.1x is enabled both globally and on ports. Otherwise, when you enable managed multicast, all IGMP report messages are legal. Then the system allows users to join any group and cannot control the access to multicast groups.

2) The managed multicast is based on port. The 802.1x mode on port must be port authentication. Otherwise, the system discards all IGMP report messages without any processing.

4.3.2 Configuring Managed Multicast

Perform the following configurations in system view.

Table 4-5 Configure managed multicast in system view

|

Operation |

Command |

|

Enable managed multicast |

ip managed-multicast |

|

Disable managed multicast |

undo ip managed-multicast |

Table 4-6 Set managed multicast for users in a specific domain

|

Operation |

Command |

|

Set the multicast group which users in the specified domain are authorized to join |

local-user multicast [ domain domain-name ] ip-address [ mask-length ] |

|

Remove the multicast group which users in the specified domain are authorized to join |

undo local-user multicast [ domain domain-name ] ip-address |

Perform the following configuration in local user view.

Table 4-7 Configure managed multicast in local user view

|

Operation |

Command |

|

Set multicast group which users are authorized to join (managed multicast) |

multicast ip-address [ ip-address &<1-9> ] |

|

Remove the specified managed multicast |

undo multicast { ip-address [ ip-address &<1-9> ] | all } |

![]() Caution:

Caution:

In local user view, before executing this command, you must configure user service type to LAN-ACCESS, which is the only one supported by managed multicast at present.

4.3.3 Managed Multicast Configuration Example

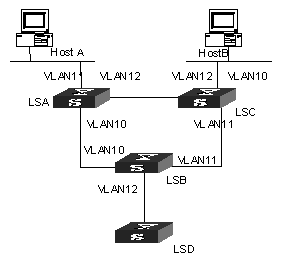

I. Network requirements

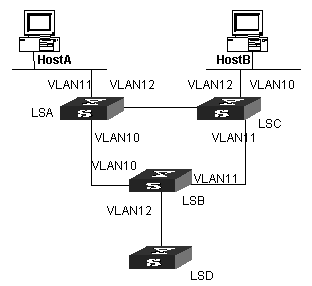

As shown in Figure 4-1, HostA and HostB join the multicast group. Layer 3 multicast is enabled on LSA, LSB, LSC and LSD. Managed multicast is enabled on LSA and LSC. Because managed multicast combines multicast with 802.1x, 802.1x must be enabled on LSA and LSC.

II. Network diagram

Figure 4-1 Network diagram for managed multicast

III. Configuration procedure

Managed multicast is a module combined with 802.1x, so you need to perform the following configuration beside multicast configuration:

# Enable managed multicast globally.

<H3C>system-view

System View: return to User View with Ctrl+Z.

[H3C] ip managed-multicast

# Enable 802.1x globally.

[H3C] dot1x

# Enable 802.1x on the controlled ports (the access ports for LSA and LSC).

[H3C]interface GigabitEthernet2/1/1

[H3C-GigabitEthernet2/1/1] dot1x

[H3C-GigabitEthernet2/1/1] interface GigabitEthernet2/1/2

[H3C-GigabitEthernet2/1/2] dot1x

# Configure the authentication mode on the controlled ports to port-based mode.

[H3C-GigabitEthernet2/1/2] dot1x –method portbased

[H3C-GigabitEthernet2/1/2] interface GigabitEthernet2/1/1

[H3C-GigabitEthernet2/1/1] dot1x –method portbased

[H3C-GigabitEthernet2/1/1] quit

# Create a local-user in system view. Then set the password and service type for the user.

[H3C] local-user liu

[H3C-luser-liu] password simple aaa

[H3C-luser-liu] service-type lan-access

# In user view, configure the allowed multicast group for the user to join.

[H3C-luser-liu] multicast 227.1.1.1

4.4 Configuring Broadcast/Multicast Suppression

4.4.1 Introduction

To prevent port congestion resulting from broadcast/multicast packet flooding, the switch supports broadcast/multicast suppression. You can enable broadcast/multicast suppression by setting the speed percentage or bandwidth values.

4.4.2 Configuration

Table 4-8 Configuring Broadcast/Multicast Suppression

|

Operation |

Command |

Description |

|

Enter system view |

system-view |

- |

|

Enter Ethernet port view |

interface interface-type interface-number |

Required interface-type must be Ethernet |

|

Configure multicast suppression ration Ethernet port |

multicast-suppression { ratio | bandwidth bandwidth } |

Optional By default, the multicast suppression ratio is 100% |

|

Configure broadcast suppression ration Ethernet port |

broadcast-suppression { ratio | bandwidth bandwidth } |

Optional By default, the broadcast suppression ratio is 50% |

![]() Caution:

Caution:

l You cannot enable both broadcast suppression and multicast suppression simultaneously on the same card. Namely, once you have enabled broadcast suppression on some ports of a card, you cannot enable multicast suppression on the other ports of the card, and vice versa.

l If multicast suppression is enabled, broadcast packets are also suppressed at the same time, while broadcast suppression does not work on multicast suppression.

l No distinction is made between known multicast and unknown multicast for multicast suppression.

4.5 Displaying and Debugging Common Multicast Configuration

After the above configuration, execute display command in any view to display the running of the multicast configuration, and to verify the effect of the configuration.

Execute debugging command in user view for the debugging of multicast.

Table 4-9 Displaying and Debugging Common Multicast Configuration

|

Operation |

Command |

|

Display the multicast routing table |

display multicast routing-table [ group-address [ mask { mask | mask-length } ] | source-address [ mask { mask | mask-length } ] | incoming-interface { vlan-interface vlan-interface-number | register } ]* |

|

Display the multicast forwarding table |

display multicast forwarding-table [ group-address [ mask { mask | mask-length } ] | source-address [ mask { mask | mask-length } ] | incoming-interface { interface-type interface-number | null NULL-interface- number | register } ]* |

|

View port-specific multicast forwarding table n\information |

display mpm forwarding-table [ group-address | source-address ] |

|

View IP multicast group and MAC multicast group information of all VLANs or a specific VLAN |

display mpm group [ vlan vlan-id [ ip-address ] ] |

|

Enable multicast packet forwarding debugging |

debugging multicast forwarding |

|

Disable multicast packet forwarding debugging |

undo debugging multicast forwarding |

|

Enable multicast forwarding status debugging |

debugging multicast status-forwarding |

|

Disable multicast forwarding status debugging |

undo debugging multicast status-forwarding |

|

Enable multicast kernel routing debugging |

debugging multicast kernel-routing |

|

Disable multicast kernel routing debugging |

undo debugging multicast kernel-routing |

The multicast routing tables can be layered as follows:

l Each multicast routing protocol has a multicast routing table of itself.

l All the multicast routing tables can be summarized into the multicast kernel routing tables.

l The multicast kernel routing tables should keep consistent with the multicast forwarding tables which actually control the forwarding of the multicast data packets.

The multicast forwarding tables are mainly used for debugging. Usually, users can view the multicast kernel routing tables to get the required information.

Chapter 5 IGMP Configuration

5.1 IGMP Overview

5.1.1 Introduction to IGMP

Internet Group Management Protocol (IGMP) is a protocol in the TCP/IP suite responsible for management of IP multicast members. It is used to establish and maintain multicast membership among IP hosts and their directly connected neighboring routers. IGMP excludes transmitting and maintenance of membership information among multicast routers, which are completed by multicast routing protocols. All hosts participating in multicast must implement IGMP.

Hosts participating in IP multicast can join and leave a multicast group at any time. The number of members of a multicast group can be any integer and the location of them can be anywhere. A multicast router does not need and cannot keep the membership of all hosts. It only uses IGMP to learn whether receivers (i.e., group members) of a multicast group are present on the subnet connected to each interface. A host only needs to keep which multicast groups it has joined.

IGMP is not symmetric on hosts and routers. Hosts need to respond to IGMP query messages from the multicast router, i.e., report the group membership to the router. The router needs to send membership query messages periodically to discover whether hosts join the specified group on its subnets according to the received response messages. When the router receives the report that hosts leave the group, the router will send a group-specific query packet (IGMP Version 2) to discover whether no member exists in the group.

Up to now, IGMP has three versions, namely, IGMP Version 1 (defined by RFC1112), IGMP Version 2 (defined by RFC2236) and IGMP Version 3. At present, IGMP Version 2 is the most widely used version.

IGMP Version 2 boasts the following improvements over IGMP Version 1:

I. Election mechanism of multicast routers on the shared network segment

A shared network segment means that there are multiple multicast routers on a network segment. In this case, all routers running IGMP on the network segment can receive the membership report from hosts. Therefore, only one router is necessary to send membership query messages. In this case, the router election mechanism is required to specify a router as the querier.

In IGMP Version 1, selection of the querier is determined by the multicast routing protocol. While IGMP Version 2 specifies that the multicast router with the lowest IP address is elected as the querier when there are multiple multicast routers on the same network segment.

II. Leaving group mechanism

In IGMP Version 1, hosts leave the multicast group quietly without informing the multicast router. In this case, the multicast router can only depend on the timeout of the response time of the multicast group to confirm that hosts leave the group. In Version 2, when a host is intended to leave, it will send a leave group message if it is the host who responds to the latest membership query message.

III. Specific group query

In IGMP Version 1, a query of a multicast router is targeted at all the multicast groups on the network segment, which is known as General Query.

In IGMP Version 2, Group-Specific Query is added besides general query. The destination IP address of the query packet is the IP address of the multicast group. The group address domain in the packet is also the IP address of the multicast group. This prevents the hosts of members of other multicast groups from sending response messages.

IV. Max response time

The Max Response Time is added in IGMP Version 2. It is used to dynamically adjust the allowed maximum time for a host to respond to the group query message.

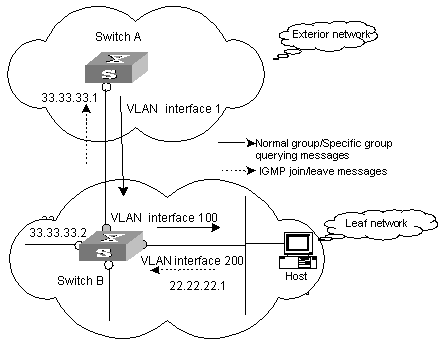

5.1.2 Introduction to IGMP Proxy

For a large scale PIM-DM (protocol independent multicast-dense mode) network with multicast routing protocol employed, many leaf networks may exist (a leaf network here refers to an end node of a multicast forwarding tree, it is a subnet that contains multicast clients only). It is a heavy load to configure and manage all these leaf networks.

You can ease the workload of configuring and managing leaf networks without affecting the multicast connections in them by enabling IGMP proxy on devices in these leaf networks.

After IGMP proxy is configured, the devices in leaf networks act as a host to the exterior network. They receive the multicast data of the associated group only when some of the hosts directly connected to them are multicast group members.

I. Description of IGMP proxy configuration

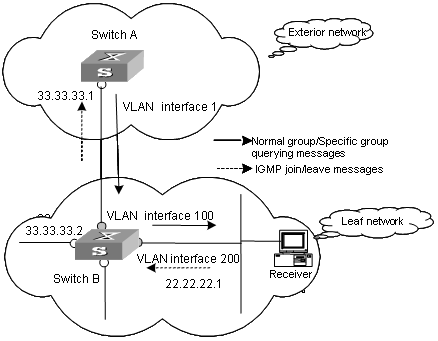

Figure 5-1 A schematic diagram of IGMP proxy

Figure 5-1 illustrates how IGMP proxy works. In this figure:

1) Switch B is configured as follows:

l Multicast is enabled.

l PIM and IGMP are configured on the interfaces of VLAN 100 and VLAN 200.

l The interface of VLAN 100 is configured as the IGMP proxy interface of the interface of VLAN 200.

2) witch A is configured as follows:

l Multicast is enabled.

l PIM and IGMP are configured on the interface of VLAN 100.

l The pim neighbor-policy command is executed in VLAN 100 interface view to filter the PIM neighbors of the network segment 33.33.33.0/24. That is, prevent Switch B from being the PIM neighbor.

II. Operating mechanism of IGMP Proxy

The procedures to process IGMP join/leave messages are as follows:

l After receiving an IGMP join/leave message sourced from a host through the interface of VLAN 200, Switch B changes the source address of the message to the IP address of VLAN 100 interface (33.33.33.2), which is the outbound interface leading to Switch A.

l Switch B sends the IGMP message to Switch A.

l Switch A processes the message after receiving the IGMP message sent by Switch B through the interface of VLAN 100, just as the message is sent by a host directly connected to the interface of VLAN 100.

The procedures to process IGMP normal group or specific group querying messages are as follows:

l After receiving a normal group or a specific group querying message from Switch A, Switch B changes the source address of the querying message to the address of the outbound interface leading to hosts.

l Switch B transmits the message through the interface of VLAN 200.

5.2 IGMP Configuration

After the multicast function is enabled, you must enable IGMP on the interface first and then perform other IGMP configurations.

1) IGMP basic configuration includes:

l Enabling multicast

l Enabling IGMP on an interface

2) IGMP advanced configuration includes:

l Configuring a router to join specified multicast group

l Configuring the IGMP query message interval

l Configuring the IGMP version

l Configuring the IGMP querier present timer

l Configuring the maximum query response time

l Configuring the times of sending IGMP Group-Specific Query packet

l Configuring the interval of sending IGMP Group-Specific Query packet

l Configuring the limit of IGMP groups on an interface

l Deleting IGMP Groups Joined on an Interface

l Configuring the Filtering Rule of Multicast Groups

l Enabling/Disabling IGMP Fast Leaving

5.2.1 Enabling Multicast

Only if the multicast function is enabled can the multicast-related configurations take effect.

Refer to 4.2.1 Enabling Multicast Routing.

5.2.2 Enabling IGMP on an Interface

This configuration task is to enable IGMP on the interface which needs to maintain the multicast membership. After this, you can initiate IGMP feature configuration.

Perform the following configuration in VLAN interface view.

Table 5-1 Enabling/Disabling IGMP on an interface

|

Operation |

Command |

|

Enable IGMP on an interface |

igmp enable |

|

Disable IGMP on an interface |

undo igmp enable |

![]() Caution:

Caution:

l If the VLAN VPN is enabled on a port, the IGMP Snooping feature cannot be enabled on the VLAN for the port or the IGMP feature cannot be enabled on the corresponding VLAN interface.

l If IGMP Snooping feature is enabled on a VLAN, or IGMP is enabled on the VLAN interface, you cannot add the member port on which VLAN VPN is enabled into the VLAN.

By default, IGMP is not enabled.

5.2.3 Configuring the IGMP Version

Perform the following configuration in VLAN interface view.

Table 5-2 Configuring the IGMP version

|

Operation |

Command |

|

Select the IGMP version that the router uses |

igmp version { 2 | 1 } |

|

Restore the default setting |

undo igmp version |

By default, IGMP Version 2 is used.

![]() Caution:

Caution:

The system does not support automatic switching between different IGMP versions. Therefore, all routers on a subnet must be configured to run the IGMP version.

5.2.4 Configuring the Interval to Send IGMP Query Message

Multicast routers send IGMP query messages to discover which multicast groups are present on attached networks. Multicast routers send query messages periodically to refresh their knowledge of members present on their networks.

Perform the following configuration in VLAN interface view.

Table 5-3 Configuring the interval to send IGMP query message

|

Operation |

Command |

|

Configure the interval to send IGMP query message |

igmp timer query seconds |

|

Restore the default value |

undo igmp timer query |

When there are multiple multicast routers on a network segment, the querier is responsible for sending IGMP query messages to all hosts on the LAN.

By default, the interval is 60 seconds.

5.2.5 Configuring the Interval and the Number of Querying IGMP Packets

On the shared network, it is the query router (querier) that maintains IGMP membership on the interface. The igmp lastmember-queryinterval and igmp robust-count commands are used to configure the interval and times of sending IGMP group-specific query packets for the querier when it receives an IGMP leave message from a host.

l The host sends the IGMP Leave message.

l Upon receiving the message, IGMP querier sends the group-specific IGMP query message for specified times (defined by the robust-value in igmp robust-count, with the default value being 2) and at a time interval (defined by the seconds in igmp lastmember-queryinterval, with the default value being 1 second).

l When other hosts receive the message from the IGMP querier and are interested in this group, they return the IGMP Membership Report message within the defined maximum response time.

l If IGMP querier receives the report messages from other hosts within the period equal to robust-value ´ seconds, it continues membership maintenance for this group.

l If it receives no report message from any other host within this period, it reckons this as timeout and ends membership maintenance for this group.

This configuration takes effect only when the querier runs IGMP version 2. If a host runs IGMP Version 1, it does not send IGMP Leave Group message when it leaves a group. In this case, this configuration does not work for the host.

Please perform the following configurations in VLAN interface view.

I. Configuring interval for querying IGMP packets

Table 5-4 Configuring interval for querying IGMP packets

|

Operation |

Command |

|

Configure interval for querying IGMP packets |

igmp lastmember-queryinterval seconds |

|

Restore the default query interval |

undo igmp lastmember-queryinterval |

By default, the interval is 1 second.

II. Configuring the number of last member querying

Table 5-5 Configuring the number of last member querying

|

Operation |

Command |

|

Configure number of last member querying |

igmp robust-count robust-value |

|

Restore the default number of querying |

undo igmp robust-count |

By default, an IGMP group-specific query message is sent for twice.

5.2.6 Configuring the Present Time of IGMP Querier

On shared network, namely a network segment where multiple multicast routers exist, a query router (querier for short) sends query messages on the interface regularly. If a non-query router fails to receive messages from the querier within a period of time, it will deem that the querier has failed and take over the job of the original querier.

In the IGMP V1 version, the querier selection is determined by the multicast routing protocol; in the IGMP V2 version, the router with the lowest IP address on a shared network segment acts as the querier.

The IGMP querier presence time is the period of time before the router takes over as the querier sending query messages, after the previous querier has stopped doing so.

Perform the following configuration in VLAN interface view.

Table 5-6 Configuring the present time of IGMP querier

|

Operation |

Command |

|

Change the present time of IGMP querier |

igmp timer other-querier-present seconds |

|

Restore the default value |

undo igmp timer other-querier-present |

By default, the value is twice the IGMP query message interval, namely 120 seconds.

5.2.7 Configuring Maximum Response Time for IGMP Query Message

When a router receives a query message, the host will set a timer for each multicast group it belongs to. The value of the timer is randomly selected between 0 and the maximum response time. When any timer becomes 0, the host will send the membership report message of the multicast group.

Setting the maximum response time reasonably can enable the host to respond to query messages quickly. In this case, the router can fast master the existing status of the members of the multicast group.

Perform the following configuration in VLAN interface view.

Table 5-7 Configuring the maximum response time for IGMP query message

|

Operation |

Command |

|

Configure the maximum response time for IGMP query message |

igmp max-response-time seconds |

|

Restore the maximum query response time to the default value |

undo igmp max-response-time |

The smaller the maximum query response time value, the faster the router prunes groups. The actual response time is a random value in the range from 1 to 25 seconds. By default, the maximum query response time is 10 seconds.

5.2.8 Configuring the limit of IGMP groups on an interface

If there is no limit to the number of IGMP groups added on a router interface or a router, the router memory may be exhausted, which may cause router failure.

You can set number limit for the IGMP groups added on the interface, but not the number limit for the IGMP groups added in the router, which is defined by the system.

Perform the following configuration in VLAN interface view.

Table 5-8 Configuring the limit of IGMP groups on an interface

|

Operation |

Command |

|

Configure the limit of IGMP groups on an interface |

igmp group-limit limit |

|

Restore the limit of IGMP groups on an interface to the default value |

undo igmp group-limit |

By default, the maximum number of IGMP groups on an interface is 512.

5.2.9 Configuring a Router to Join Specified Multicast Group

Usually, the host operating IGMP will respond to IGMP query packet of the multicast router. In case of response failure, the multicast router will consider that there is no multicast member on this network segment and will cancel the corresponding path. Configuring one interface of the router as multicast member can avoid such problem. When the interface receives IGMP query packet, the router will respond, thus ensuring that the network segment where the interface located can normally receive multicast packets.

For an Ethernet switch, you can configure a port in a VLAN interface to join a multicast group.

Perform the following configuration in the corresponding view.

Table 5-9 Configuring a router to join specified multicast group

|

Operation |

Command |

|

Configure the router to join a specified multicast group (in VLAN interface view) |

igmp host-join group-address port interface-type interface-number [ to interface-type interface- number ] |

|

Cancel the configuration (in VLAN interface view) |

undo igmp host-join group-address port interface-type interface- num [ to interface-type interface-number ] |

|

Configure the router to join a specified multicast group (in Ethernet port view) |

igmp host-join group-address vlan vlan-id |

|

Cancel the configuration (in Ethernet port view) |

undo igmp host-join group-address vlan vlan-id |

& Note:

The above two configuration methods have the same result (both takes effect on port). You can select either of them.

By default, a router joins no multicast group. Note that the specified port must belong to this VLAN interface on which IGMP is enabled. Otherwise, the configuration does not take effect.

5.2.10 Deleting IGMP Groups Joined on an Interface

This configuration task is to delete all IGMP groups joined on all interfaces or specific interfaces of the router, or to delete the IGMP groups at the specific address or in the specific network segment on the specific interfaces of the router.

Perform the following configuration in user view.

Table 5-10 Deleting IGMP groups joined on an interface

|

Operation |

Command |

|

Delete IGMP groups joined on an interface |

reset igmp group { all | interface vlan-interface interface-number { all | group-address [ group-mask ] } } |

After a group is deleted, if other IGMP membership report messages occur, the interfaces can join the corresponding group again.

5.2.11 Configuring the Filtering Rule of Multicast Groups

On the IGMP snooping-enabled switch, you can configure ACL rules whether the specified multicast group can be joined to a VLAN or not. This feature filters every received IGMP join packet. According to the destination group address of the packets and the ACL rule bound to the VLAN, the switch determines whether to discard the packets or let them pass.

By setting the filtering rule of multicast groups in the VLAN, you can control access to IP multicast groups. You can only configure one ACL rule for each VLAN, and the new configured rule will replace the old one.

Perform the following configuration in VLAN view.

Table 5-11 Configure the aging time of multicast group members

|

Operation |

Command |

|

Set the filtering rule of multicast groups in the specified VLAN |

igmp-snooping group-policy acl-number |

|

Cancel the filtering rule of multicast groups in the specified VLAN |

undo igmp-snooping group-policy |

By default, no filtering rule is set for a VLAN. In this case, a host can be joined to any multicast group.

![]() Caution:

Caution:

l If an inexistent acl-number is bound to the VLAN, or if the bound acl-number is not configured with a rule, a host is still allowed to join any multicast group.

l If no acl-number exists, you can also configure the filtering rule of multicast groups in VLAN view. That is, this rule is not restricted by the ACL itself, and is valid for all members in the specified VLAN.

5.2.12 Enabling/Disabling IGMP Fast Leaving

An IGMP-enabled Layer 3 switch does not query packets of the specific multicast group to a fast leave-enabled port any longer when the port receives an IGMP leave packet. Instead, the switch removes the port from the outbound port lists of all Layer 3 multicast forwarding tables that are of the same multicast group to peel off the port from the multicast group. That is, the switch does not forward multicast data to the port.

Perform the following configuration in Ethernet port view or system view.

Table 5-12 Enable/Disable IGMP fast leave

|

Operation |

Command |

Remarks |

|

Enter system view |

system-view |

- |

|

Enable IGMP fast leave in system view |

igmp fast-leave [ vlan { vlan-id [ to vlan-id ] } &<1-10> ] |

IGMP fast leave is disabled by default |

|

Enter Ethernet port view |

interface interface-type interface-number |

- |

|

Enable IGMP fast leave in Ethernet port view |

igmp fast-leave [ vlan { vlan-id [ to vlan-id ] } &<1-10> ] |

IGMP fast leave is disabled by default |

|

Disable IGMP fast leave |

undo igmp fast-leave [ vlan { vlan-id [ to vlan-id ] } &<1-10> ] |

- |

For detailed configuration, refer to the H3C S9500 Series Routing Switches Command Manual.

& Note:

l Fast leaves that are configured in system view and Ethernet port view operate separately.

l The configuration made in system view will be effective to ports within all the specified VLANs, while the configuration in port view will be effective to the port within the specific VLANs (for example, when a trunk port belongs to multiple VLANs).

![]() Caution:

Caution: