- Table of Contents

-

- 12-High Availability Configuration Guide

- 00-Preface

- 01-Interface backup configuration

- 02-DLDP configuration

- 03-Monitor Link configuration

- 04-VRRP configuration

- 05-Load balancing configuration

- 06-Reth interface and redundancy group configuration

- 07-BFD configuration

- 08-Track configuration

- 09-Process placement configuration

- 10-Interface collaboration configuration

- Related Documents

-

| Title | Size | Download |

|---|---|---|

| 05-Load balancing configuration | 1.09 MB |

Configuring server load balancing

NAT-mode server load balancing

Indirect-mode server load balancing

Server load balancing tasks at a glance

Relationship between configuration items

Adding and configuring a server farm member

Configuring scheduling algorithms for a server farm

Setting the availability criteria

Enabling the slow online feature

Configuring intelligent monitoring

Configuring the action to take when a server farm is busy

Specifying a fault processing method

Creating a real server and specifying a server farm

Specifying an IP address and port number

Configuring the bandwidth and connection parameters

Enabling the slow offline feature

Setting the bandwidth ratio and maximum expected bandwidth

Disabling VPN instance inheritance

Virtual server tasks at a glance for Layer 4 server load balancing

Virtual server tasks at a glance for Layer 7 server load balancing

Configuring a TCP virtual server to operate at Layer 7

Specifying the VSIP and port number

Configuring the bandwidth and connection parameters

Enabling per-packet load balancing for UDP traffic

Configuring the HTTP redirection feature

Specifying a parameter profile

Applying an LB connection limit policy

Enabling IP address advertisement for a virtual server

Specifying an interface for sending gratuitous ARP packets and ND packets

Creating a match rule that references an LB class

Creating a source IP address match rule

Creating an interface match rule

Creating a user group match rule

Creating a TCP payload match rule

Creating an HTTP content match rule

Creating an HTTP cookie match rule

Creating an HTTP header match rule

Creating an HTTP URL match rule

Creating an HTTP method match rule

Creating a RADIUS attribute match rule

Configuring a forwarding LB action

Configuring a modification LB action

Specifying a response file for matching HTTP requests

Specifying a response file used upon load balancing failure

About configuring an LB policy

Specifying the default LB action

Sticky group tasks at a glance for Layer 4 server load balancing

Sticky group tasks at a glance for Layer 7 server load balancing

Configuring the IP sticky method

Configuring the HTTP content sticky method

Configuring the HTTP cookie sticky method

Configuring the HTTP header sticky method

Configuring the HTTP or UDP payload sticky method

Configuring the RADIUS attribute sticky method

Configuring the SIP call ID sticky method

Configuring the SSL sticky method

Configuring the timeout timer for sticky entries

Ignoring the limits for sessions that match sticky entries

Enabling stickiness-over-busyness

Configuring a parameter profile

Parameter profile tasks at a glance

Configuring the ToS field in IP packets sent to the client

Configuring the maximum local window size for TCP connections

Configuring the idle timeout for TCP connections

Configuring the TIME_WAIT state timeout time for TCP connections

Configuring the retransmission timeout time for SYN packets

Configuring the TCP keepalive parameters

Configuring the FIN-WAIT-1 state timeout time for TCP connections

Configuring the FIN-WAIT-2 state timeout time for TCP connections

Setting the MSS for the LB device

Configuring the TCP payload match parameters

Enabling load balancing for each HTTP request

Configuring connection reuse between the LB device and the server

Modifying the header in each HTTP request or response

Disabling case sensitivity matching for HTTP

Configuring the maximum length to parse the HTTP content

Configuring secondary cookie parameters

Specifying the action to take when the header of an HTTP packet exceeds the maximum length

Configuring the HTTP compression feature

Configuring the HTTP statistics feature

Configuring an LB probe template

About configuring an LB probe template

Configuring a TCP-RST LB probe template

Configuring a TCP zero-window LB probe template

Configuring an LB connection limit policy

Performing a load balancing test

About performing a load balancing test

Performing an IPv4 load balancing test

Performing an IPv6 load balancing test

Enabling load balancing logging

Enabling load balancing basic logging

Enabling load balancing NAT logging

Displaying and maintaining server load balancing

Server load balancing configuration examples

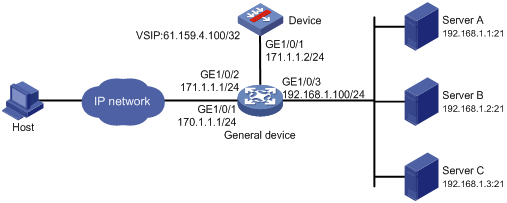

Example: Configuring basic Layer 4 server load balancing

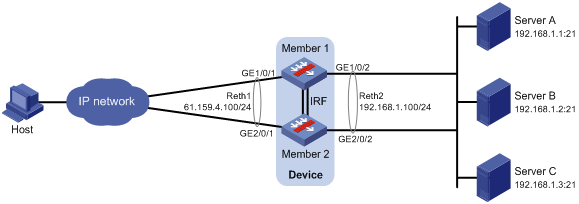

Example: Configuring Layer 4 server load balancing hot backup

Example: Configuring basic Layer 7 server load balancing

Example: Configuring Layer 7 server load balancing SSL termination

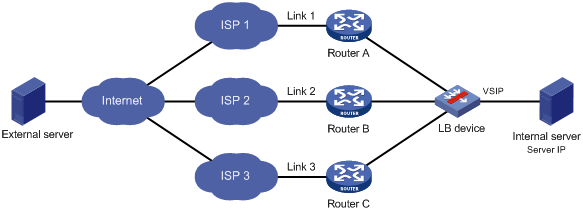

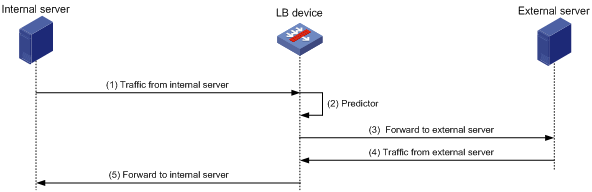

Configuring outbound link load balancing

About outbound link load balancing

Outbound link load balancing tasks at a glance

Relationship between configuration items

Adding and configuring a link group member

Configuring a scheduling algorithm for a link group

Setting the availability criteria

Enabling the slow online feature

Specifying a fault processing method

Configuring the proximity feature

Creating a link and specifying a link group

Specifying an outbound next hop for a link

Specifying an outgoing interface for a link

Configuring the bandwidth and connection parameters

Enabling the slow offline feature

Setting the link cost for proximity calculation

Setting the bandwidth ratio and maximum expected bandwidth

Disabling VPN instance inheritance for a link

Virtual server tasks at a glance

Specifying the VSIP and port number

Specifying a parameter profile

Configuring the bandwidth and connection parameters

Enabling the link protection feature

Enabling bandwidth statistics collection by interfaces

Creating a match rule that references an LB class

Creating a source IP address match rule

Creating a destination IP address match rule

Creating an input interface match rule

Creating a user group match rule

Creating a domain name match rule

Creating an application group match rule

Configuring a forwarding LB action

Configuring the ToS field in IP packets sent to the server

Specifying the default LB action

Sticky group tasks at a glance

Configuring the IP sticky method

Configuring the timeout time for sticky entries

Ignoring the limits for sessions that match sticky entries

Configuring a parameter profile

About configuring a parameter profile

Configuring the ToS field in IP packets sent to the client

About configuring ISP information

Configuring ISP information manually

Setting the aging time for DNS cache entries

Performing a load balancing test

About performing a load balancing test

Performing an IPv4 load balancing test

Performing an IPv6 load balancing test

Enabling load balancing logging

Enabling load balancing basic logging

Enabling load balancing NAT logging

Enabling load balancing link busy state logging

Displaying and maintaining outbound link load balancing

Outbound link load balancing configuration examples

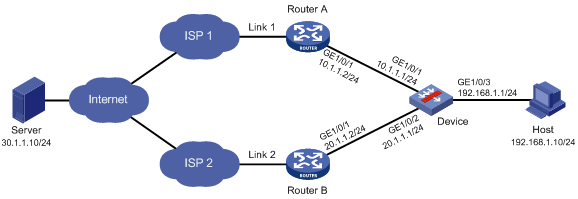

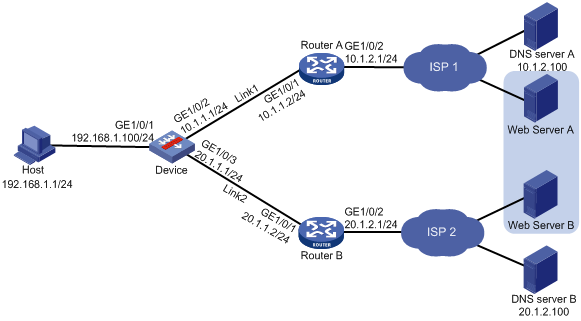

Example: Configuring outbound link load balancing

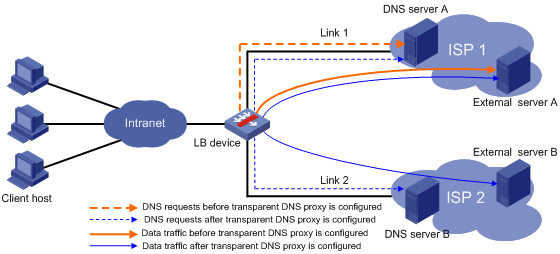

Configuring transparent DNS proxies

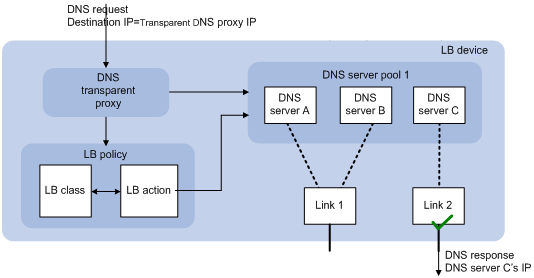

Transparent DNS proxy on the LB device

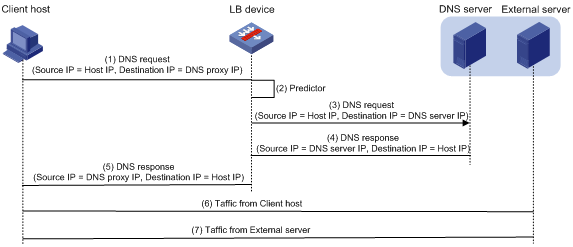

Transparent DNS proxy tasks at a glance

Configuring a transparent DNS proxy

Transparent DNS proxy tasks at a glance

Creating a transparent DNS proxy

Specifying an IP address and port number

Specifying the default DNS server pool

Enabling the link protection feature

Enabling the transparent DNS proxy

Adding and configuring a DNS server pool member

Configuring a scheduling algorithm for a DNS server pool

Creating a DNS server and specifying a DNS server pool

Specifying an IP address and port number

Enabling the device to automatically obtain the IP address of a DNS server

Associating a link with a DNS server

Specifying an outbound next hop for a link

Specifying an outgoing interface for a link

Configuring the maximum bandwidth

Setting the bandwidth ratio and maximum expected bandwidth

Creating a match rule that references an LB class

Creating a source IP address match rule

Creating a destination IP address match rule

Creating a domain name match rule

Configuring a forwarding LB action

Configuring the ToS field in IP packets sent to the DNS server

Specifying the default LB action

Sticky group tasks at a glance

Configuring the IP sticky method

Configuring the timeout time for sticky entries

Enabling load balancing logging

Enabling load balancing NAT logging

Enabling load balancing link busy state logging

Displaying and maintaining transparent DNS proxy

Transparent DNS proxy configuration examples

Example: Configuring transparent DNS proxy

Load balancing overview

Load balancing (LB) is a cluster technology that distributes services among multiple network devices or links.

Advantages of load balancing

Load balancing has the following advantages:

· High performance—Improves overall system performance by distributing services to multiple devices or links.

· Scalability—Meets increasing service requirements without compromising service quality by easily adding devices or links.

· High availability—Improves overall availability by using backup devices or links.

· Manageability—Simplifies configuration and maintenance by centralizing management on the load balancing device.

· Transparency—Preserves the transparency of the network topology for end users. Adding or removing devices or links does not affect services.

Load balancing types

LB includes the following types:

· Server load balancing—Data centers generally use server load balancing for networking. Network services are distributed to multiple servers or firewalls to enhance the processing capabilities of the servers or firewalls.

· Link load balancing—Link load balancing applies to a network environment where there are multiple carrier links to implement dynamic link selection. This enhances link utilization. Link load balancing supports IPv4 and IPv6, but does not support IPv4-to-IPv6 packet translation. Link load balancing is classified into the following types based on the direction of connection requests:

¡ Outbound link load balancing—Load balances traffic among the links from the internal network to the external network.

¡ Transparent DNS proxy—Load balances DNS requests among the links from the internal network to the external network.

Configuring server load balancing

About server load balancing

Server load balancing types

Server load balancing is classified into Layer 4 server load balancing and Layer 7 server load balancing.

· Layer 4 server load balancing—Identifies network layer and transport layer information, and is implemented based on streams. It distributes packets in the same stream to the same server. Layer 4 server load balancing cannot distribute Layer 7 services based on contents.

· Layer 7 server load balancing—Identifies network layer, transport layer, and application layer information, and is implemented based on contents. It analyzes packet contents, distributes packets one by one based on the contents, and distributes connections to the specified server according to the predefined policies. Layer 7 server load balancing applies load balancing services to a large scope.

Server load balancing supports IPv4 and IPv6, but Layer 4 server load balancing does not support IPv4-to-IPv6 or IPv6-to-IPv4 translation.

Deployment modes

Server load balancing uses the NAT and indirect deployment modes.

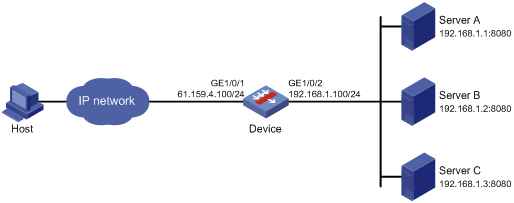

NAT-mode server load balancing

NAT-mode network diagram

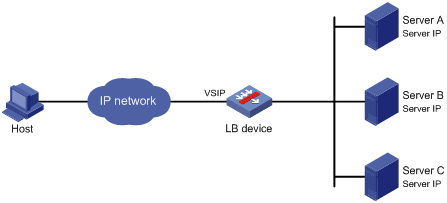

As shown in Figure 1, NAT-mode server load balancing contains the following elements:

· LB device—Distributes different service requests to multiple servers.

· Server—Responds to and processes different service requests.

· VSIP—Virtual service IP address of the cluster, used for users to request services.

· Server IP—IP address of a server, used by the LB device to distribute requests.

NAT-mode implementation modes

NAT-mode server load balancing is implemented through the following modes:

· Destination NAT (DNAT).

· Source NAT (SNAT).

· DNAT + SNAT.

DNAT mode

DNAT-mode server load balancing requires you to change the gateway or configure a static route for the server to send packets destined to the host through the LB device.

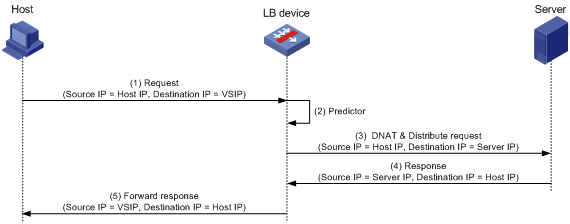

Figure 2 DNAT-mode server load balancing workflow

Table 1 Workflow description

|

Description |

Source IP address |

Destination IP address |

|

1. The host sends a request. |

Host IP |

VSIP |

|

2. When the LB device receives the request, it uses a scheduling algorithm to calculate to which server it distributes the request. |

N/A |

N/A |

|

3. The LB device uses the DNAT technology to distribute the request, using Server IP as the destination IP. |

Host IP |

Server IP |

|

4. The server receives and processes the request and then sends a response. |

Server IP |

Host IP |

|

5. The LB device receives the response, translates the source IP to VSIP, and forwards the response to the requesting host. |

VSIP |

Host IP |

SNAT mode

SNAT-mode server load balancing requires the following configurations:

· Configure VSIP for the loopback interface on each server.

· Configure a route to the IP address assigned by the SNAT address pool.

As a best practice, do not use SNAT-mode server load balancing because its application scope is limited. This chapter does not provide detailed information about SNAT-mode server load balancing.

DNAT + SNAT mode

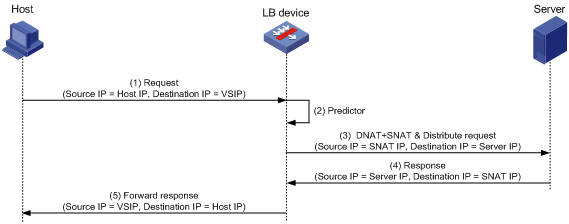

Figure 3 DNAT + SNAT-mode server load balancing workflow

Table 2 Workflow description

|

Description |

Source IP address |

Destination IP address |

|

1. The host sends a request. |

Host IP |

VSIP |

|

2. When the LB device receives the request, it uses a scheduling algorithm to calculate to which server it distributes the request. |

N/A |

N/A |

|

3. The LB device uses the DNAT + SNAT technology to distribute the request, using the IP address in the SNAT address pool as the source IP and Server IP as the destination IP. |

SNAT IP |

Server IP |

|

4. The server receives and processes the request and then sends a response. |

Server IP |

SNAT IP |

|

5. The LB device receives the response, translates the source IP to VSIP and destination IP to Host IP, and forwards the response to the requesting host. |

VSIP |

Host IP |

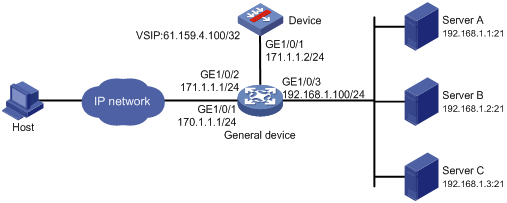

Indirect-mode server load balancing

Indirect-mode network diagram

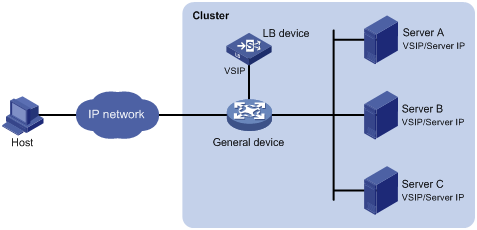

As shown in Figure 4, indirect-mode server load balancing contains the following elements:

· LB device—Distributes different service requests to multiple servers.

· General device—Forwards data according to general forwarding rules.

· Server—Responds to and processes different service requests.

· VSIP—Virtual service IP address of the cluster, used for users to request services.

· Server IP—IP address of a server, used by the LB device to distribute requests.

Indirect-mode workflow

Indirect-mode server load balancing requires configuring the VSIP on both the LB device and the servers. Because the VSIP on a server cannot be contained in an ARP request and response, you can configure the VSIP on a loopback interface.

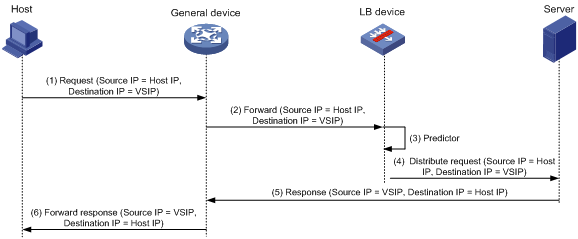

Figure 5 Indirect-mode server load balancing workflow

Table 3 Workflow description

|

Description |

Source IP address |

Destination IP address |

|

1. The host sends a request. |

Host IP |

VSIP |

|

2. When the general device receives the request, it forwards it to LB device. The VSIP cannot be contained in an ARP request and response, so the general device only forwards the request to the LB device. |

Host IP |

VSIP |

|

3. When the LB device receives the request, it uses a scheduling algorithm to calculate to which server it distributes the request. |

N/A |

N/A |

|

4. The LB device distributes the request. The source and destination IP addresses in the request packet are not changed. |

Host IP |

VSIP |

|

5. The server receives and processes the request and then sends a response. |

VSIP |

Host IP |

|

6. The general device receives the response, and forwards it to the requesting host. |

VSIP |

Host IP |

In indirect mode, the LB device does not forward packets returned by the server.

Server load balancing tasks at a glance

Relationship between configuration items

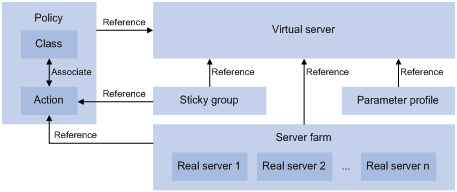

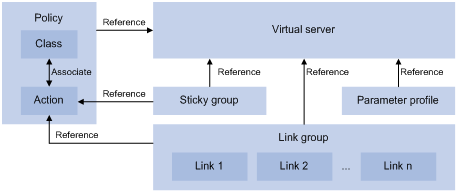

Figure 6 shows the relationship between the following configuration items:

· Server farm—A collection of real servers that contain similar content. A sever farm can be referenced by a virtual server or an LB action.

· Real server—An entity on the LB device to process user services.

· Virtual server—A virtual service provided by the LB device to determine whether to perform load balancing for packets received on the LB device. Only the packets that match a virtual server are load balanced.

· LB class—Classifies packets to implement load balancing based on packet type.

· LB action—Drops, forwards, or modifies packets.

· LB policy—Associates an LB class with an LB action. An LB policy can be referenced by a virtual server.

· Sticky group—Uses a sticky method to distribute similar sessions to the same real server. A sticky group can be referenced by a virtual server or an LB action.

· Parameter profile—Defines advanced parameters to process packets. A parameter profile can be referenced by a virtual server.

Figure 6 Relationship between the main configuration items

Tasks at a glance

To configure server load balancing, perform the following tasks:

3. Configuring a virtual server

4. (Optional.) Configuring an LB policy

5. (Optional.) Configuring a sticky group

6. (Optional.) Configuring templates

¡ Configuring a parameter profile

¡ Configuring an LB probe template

7. (Optional.) Configuring an LB connection limit policy

8. (Optional.) Configuring the ALG feature

9. (Optional.) Reloading a response file

10. (Optional.) Performing a load balancing test

11. (Optional.) Configuring SNMP notifications and logging for load balancing

¡ Enabling load balancing logging

Configuring a server farm

You can add real servers that contain similar content to a server farm to facilitate management.

Server farm tasks at a glance

The server farm configuration tasks for Layer 4 and Layer 7 server load balancing are the same.

To configure a server farm, perform the following tasks:

2. (Optional.) Adding and configuring a server farm member

3. Configuring scheduling algorithms for a server farm

4. Configuring NAT

Choose the following tasks as needed:

¡ Configuring indirect-mode NAT

5. Setting the availability criteria

6. (Optional.) Enabling the slow online feature

7. (Optional.) Configuring health monitoring

8. (Optional.) Configuring intelligent monitoring

9. (Optional.) Configuring the action to take when a server farm is busy

10. (Optional.) Specifying a fault processing method

Creating a server farm

1. Enter system view.

system-view

2. Create a server farm and enter server farm view.

server-farm server-farm-name

3. (Optional.) Configure a description for the server farm.

description text

By default, no description is configured for the server farm.

Adding and configuring a server farm member

About adding and configuring a server farm member

Perform this task to create a server farm member or add an existing real server as a server farm member in server farm view. You can also specify a server farm for a real server in real server view to achieve the same purpose (see "Creating a real server and specifying a server farm").

After adding a server farm member, you can configure the following parameters and features for the real server in the server farm:

· Weight.

· Priority.

· Connection limits.

· Health monitoring.

· Slow offline.

The member-based scheduling algorithm selects the best real server based on these configurations.

Adding a server farm member

1. Enter system view.

system-view

2. Enter server farm view.

server-farm server-farm-name

3. Create and add a server farm member and enter server farm member view.

real-server real-server-name port port-number

If the real server already exists, the command adds the existing real server as a server farm member.

4. (Optional.) Configure a description for the server farm member.

description text

By default, no description is configured for the server farm member.

Setting the weight and priority of the server farm member

1. Enter system view.

system-view

2. Enter server farm view.

server-farm server-farm-name

3. Enter server farm member view.

real-server real-server-name port port-number

4. Set the weight of the server farm member.

weight weight-value

The default setting is 100.

5. Set the priority of the server farm member.

priority priority

The default setting is 4.

Setting the connection limits of the server farm member

1. Enter system view.

system-view

2. Enter server farm view.

server-farm server-farm-name

3. Enter server farm member view.

real-server real-server-name port port-number

4. Set the connection rate of the server farm member.

rate-limit connection connection-number

The default setting is 0 (the connection rate is not limited).

5. Set the maximum number of connections allowed for the server farm member.

connection-limit max max-number

The default setting is 0 (the maximum number of connections is not limited).

Configuring health monitoring for the server farm member

1. Enter system view.

system-view

2. Enter server farm view.

server-farm server-farm-name

3. Enter server farm member view.

real-server real-server-name port port-number

4. Specify a health monitoring method for the server farm member.

probe template-name [ nqa-template-port ]

By default, no health monitoring method is specified for the server farm member.

You can specify an NQA template or load balancing template for health monitoring. For information about NQA templates, see NQA configuration in Network Management and Monitoring Configuration Guide.

5. Specify the health monitoring success criteria for the server farm member.

success-criteria { all | at-least min-number }

By default, health monitoring succeeds only when all the specified health monitoring methods succeed.

Enabling the slow offline feature for the server farm member

1. Enter system view.

system-view

2. Enter server farm view.

server-farm server-farm-name

3. Enter server farm member view.

real-server real-server-name port port-number

4. Enable the slow offline feature for the server farm member.

slow-shutdown enable

By default, the slow offline feature is disabled.

5. Shut down the server farm member.

shutdown

By default, the server farm member is activated.

Configuring scheduling algorithms for a server farm

About scheduling algorithms for server farms

Perform this task to specify a scheduling algorithm for a server farm and specify the number of real servers to participate in scheduling. The LB device calculates the real servers to process user requests based on the specified scheduling algorithm.

The device provides the following scheduling algorithms for a server farm:

· Source IP address hash algorithm—Hashes the source IP address of user requests and distributes user requests to different real servers according to the hash values.

· Source IP address and port hash algorithm—Hashes the source IP address and port number of user requests and distributes user requests to different real servers according to the hash values.

· Destination IP address hash algorithm—Hashes the destination IP address of user requests and distributes user requests to different real servers according to the hash values.

· HTTP hash algorithm—Hashes the content of user requests and distributes user requests to different real servers according to the hash values.

· Cache Array Routing Protocol hash algorithm—The CARP hash algorithm is an enhancement to the hash algorithm. When the number of available real servers changes, this algorithm makes all available real servers have the smallest load changes. This algorithm supports hashing based on source IP address, source IP address and port number, destination IP address, and HTTP content.

· Dynamic round robin—Assigns new connections to real servers based on load weight values calculated by using the memory usage, CPU usage, and disk usage of the real servers. The smaller the load, the greater the weight value. A real server with a greater weight value is assigned more connections. This algorithm can take effect only if you specify an SNMP-DCA NQA template. If no SNMP-DCA NQA template is specified, the non-weighted round robin algorithm is used. For more information about NQA templates, see NQA configuration in Network Management and Monitoring Configuration Guide.

· Weighted least connection algorithm (real server-based)—Always assigns user requests to the real server with the fewest number of weighted active connections (the total number of active connections in all server farms divided by weight). The weight value used in this algorithm is configured in real server view.

· Weighted least connection algorithm (server farm member-based)—Always assigns user requests to the real server with the fewest number of weighted active connections (the total number of active connections in the specified server farm divided by weight). The weight value used in this algorithm is configured in server farm member view.

· Random algorithm—Randomly assigns user requests to real servers.

· Least time algorithm—Assigns new connections to real servers based on load weight values calculated by using the response time of the real servers. The shorter the response time, the greater the weight value. A real server with a greater weight value is assigned more connections.

· Round robin algorithm—Assigns user requests to real servers based on the weights of real servers. A higher weight indicates more user requests will be assigned.

· Bandwidth algorithm—Distributes user requests to real servers according to the weights and remaining bandwidth of real servers.

· Maximum bandwidth algorithm—Distributes user requests always to an idle real server that has the largest remaining bandwidth.

Procedure

1. Enter system view.

system-view

2. Enter server farm view.

server-farm server-farm-name

3. Specify a scheduling algorithm for the server farm.

¡ Specify a real server-based scheduling algorithm.

predictor { dync-roundrobin | least-connection | least-time | { bandwidth | max-bandwidth } [ inbound | outbound ] }

¡ Specify a server farm member-based scheduling algorithm.

predictor hash [ carp ] address { destination | source | source-ip-port } [ mask mask-length ] [ prefix prefix-length ]

predictor hash [ carp ] http [ offset offset ] [ start start-string ] [ [ end end-string ] | [ length length ] ]

predictor { least-connection member | random | round-robin }

By default, the scheduling algorithm for the server farm is weighted round robin.

4. Specify the number of real servers to participate in scheduling.

selected-server min min-number max max-number

By default, the real servers with the highest priority participate in scheduling.

Configuring indirect-mode NAT

Restrictions and guidelines

Indirect-mode NAT configuration requires disabling NAT for the server farm.

Procedure

1. Enter system view.

system-view

2. Enter server farm view.

server-farm server-farm-name

3. Disable NAT for the server farm.

transparent enable

By default, NAT is enabled for a server farm.

If the server farm is referenced by a virtual server of the HTTP type, the NAT feature takes effect even if it is disabled.

Configuring NAT-mode NAT

About NAT-mode NAT

The NAT-mode NAT configuration varies by NAT mode.

· For DNAT mode, you only need to enable NAT for the server farm.

· For SNAT mode and DNAT + SNAT mode, you must create an SNAT address pool to be referenced by the server farm.

After the server farm references the SNAT address pool, the LB device replaces the source address of the packets it receives with an SNAT address before forwarding the packets.

Restrictions and guidelines

An SNAT address pool can have a maximum of 256 IPv4 addresses and 65536 IPv6 addresses. No overlapping IPv4 or IPv6 addresses are allowed in different SNAT address pools.

Configuring DNAT

1. Enter system view.

system-view

2. Enter server farm view.

server-farm server-farm-name

3. Enable NAT for the server farm.

undo transparent enable

By default, NAT is enabled for a server farm.

If the server farm is referenced by a virtual server of the HTTP type, the NAT feature takes effect even if it is disabled.

4. (Optional.) Specify an interface for sending gratuitous ARP packets and ND packets.

arp-nd interface interface-type interface-number

By default, no interface is specified for sending gratuitous ARP packets and ND packets.

Configuring SNAT and DNAT+SNAT

1. Enter system view.

system-view

2. Create an SNAT address pool and enter SNAT address pool view.

loadbalance snat-pool pool-name

3. (Optional.) Configure a description for the SNAT address pool.

description text

By default, no description is configured for an SNAT address pool.

4. Specify an address range for the SNAT address pool.

IPv4:

ip range start start-ipv4-address end end-ipv4-address

IPv6:

ipv6 range start start-ipv6-address end end-ipv6-address

By default, no address range is specified for an SNAT address pool.

5. Return to system view.

quit

6. Enter server farm view.

server-farm server-farm-name

7. Enable NAT for the server farm.

undo transparent enable

By default, NAT is enabled for a server farm.

If a server farm is referenced by a virtual server of the HTTP type, the NAT feature takes effect even when it is disabled.

8. Specify the SNAT address pool to be referenced by the server farm.

snat-pool pool-name

By default, no SNAT address pool is referenced by a server farm.

9. (Optional.) Specify an interface for sending gratuitous ARP packets and ND packets.

arp-nd interface interface-type interface-number

By default, no interface is specified for sending gratuitous ARP packets and ND packets.

Setting the availability criteria

About setting the availability criteria

Perform this task to set the criteria (lower percentage and higher percentage) to determine whether a server farm is available. This helps implement traffic switchover between the master and backup server farms.

· When the number of available real servers to the total number of real servers in the master server farm is smaller than the lower percentage, traffic is switched to the backup server farm.

· When the number of available real servers to the total number of real servers in the master server farm is greater than the upper percentage, traffic is switched back to the master server farm.

Procedure

1. Enter system view.

system-view

2. Enter server farm view.

server-farm server-farm-name

3. Set the criteria to determine whether the server farm is available.

activate lower lower-percentage upper upper-percentage

By default, when a minimum of one real server is available, the server farm is available.

Enabling the slow online feature

About the slow online feature

The real servers newly added to a server farm might not be able to immediately process large numbers of services assigned by the LB device. To resolve this issue, enable the slow online feature for the server farm. The feature uses the standby timer and ramp-up timer. When the real servers are brought online, the LB device does not assign any services to the real servers until the standby timer expires.

When the standby timer expires, the ramp-up timer starts. During the ramp-up time, the LB device increases the service amount according to the processing capability of the real servers, until the ramp-up timer expires.

Procedure

1. Enter system view.

system-view

2. Enter server farm view.

server-farm server-farm-name

3. Enable the slow online feature for the server farm.

slow-online [ standby-time standby-time ramp-up-time ramp-up-time ]

By default, the slow online feature is disabled for the server farm.

Configuring health monitoring

About configuring health monitoring

Perform this task to enable health monitoring to detect the availability of real servers.

Restrictions and guidelines

The health monitoring configuration in real server view takes precedence over the configuration in server farm view.

You can specify an NQA template or load balancing template for health monitoring. For information about NQA templates, see NQA configuration in Network Management and Monitoring Configuration Guide.

Procedure

1. Enter system view.

system-view

2. Enter server farm view.

server-farm server-farm-name

3. Specify a health monitoring method for the server farm.

probe template-name [ nqa-template-port ]

By default, no health monitoring method is specified for the server farm.

4. Specify the health monitoring success criteria for the server farm.

success-criteria { all | at-least min-number }

By default, health monitoring succeeds only when all the specified health monitoring methods succeed.

Configuring intelligent monitoring

About intelligent monitoring

Intelligent monitoring identifies the health of server farm members by counting the number of RST packets or zero-window packets sent by each server farm member. Upon packet threshold violation, a protection action is taken. This feature is implemented by referencing a TCP-RST or TCP zero-window probe template in server farm view.

You can use the following methods to recover a server farm member placed in Auto shutdown state by this feature:

· Set the automatic recovery time in server farm view for the server farm member to automatically recover.

· Manually recover the server farm member.

Restrictions and guidelines

A real server that is shut down or placed in busy state due to packet threshold violation will be restored to the normal state immediately when the referenced probe template is deleted.

Prerequisites

Before configuring this feature, configure an LB probe template (see "Configuring an LB probe template").

Specifying an LB probe template for a server farm

1. Enter system view.

system-view

2. Enter server farm view.

server-farm server-farm-name

3. Specify an LB probe template for the server farm.

probe-template { tcp-rst | tcp-zero-window } template-name

By default, no LB probe template is specified for a server farm.

4. (Optional.) Set the automatic recovery time.

auto-shutdown recovery-time recovery-time

By default, the automatic recovery time is 0 seconds, which means that a server farm member placed in Auto shutdown state does not automatically recover.

Manually recovering a real server in Auto shutown state

1. Enter system view.

system-view

2. Enter real server view.

real-server real-server-name

3. Manually recover the real server.

recover-from-auto-shutdown

Manually recovering a server farm member in Auto shutown state

1. Enter system view.

system-view

2. Enter server farm view.

server-farm server-farm-name

3. Enter server farm member view.

real-server real-server-name port port-number

4. Manually recover the server farm member.

recover-from-auto-shutdown

Configuring the action to take when a server farm is busy

About configuring the action to take when a server farm is busy

A server farm is considered busy when all its real servers are busy. You can configure one of the following actions:

· drop—Stops assigning client requests to a server farm. If the LB policy for the server farm contains the action of matching the next rule, the device compares client requests with the next rule. Otherwise, the device drops the client requests.

· enqueue—Stops assigning client requests to a server farm and assigns new client requests to a wait queue. New client requests will be dropped when the queue length exceeds the configured length. Client requests already in the queue will be aged out when the configured timeout time expires.

· force—Forcibly assigns client requests to all real servers in the server farm.

The device determines whether a real server is busy based on the following factors:

· Maximum number of connections.

· Maximum number of connections per second.

· Maximum number of HTTP requests per second.

· Maximum bandwidth.

· SNMP-DCA probe result.

Procedure

1. Enter system view.

system-view

2. Enter server farm view.

server-farm server-farm-name

3. Configure the action to take when the server farm is busy.

busy-action { drop | enqueue length length timeout timeout-value | force }

The default action is drop.

Specifying a fault processing method

About fault processing methods

Perform this task to specify one of the following fault processing methods for a server farm:

· Keep—Does not actively terminate the connection with the failed real server. Keeping or terminating the connection depends on the timeout mechanism of the protocol.

· Reschedule—Redirects the connection to another available real server in the server farm.

· Reset—Terminates the connection with the failed real server by sending RST packets (for TCP packets) or ICMP unreachable packets (for other types of packets).

Procedure

1. Enter system view.

system-view

2. Enter server farm view.

server-farm server-farm-name

3. Specify a fault processing method for the server farm.

fail-action { keep | reschedule | reset }

By default, the fault processing method is keep. All available connections are kept.

Configuring a real server

A real server is an entity on the LB device to process user services. A real server can belong to multiple server farms. A server farm can have multiple real servers.

Real server tasks at a glance

The real server configuration tasks for Layer 4 and Layer 7 server load balancing are the same.

To configure a real server, perform the following tasks:

1. Creating a real server and specifying a server farm

2. Specifying an IP address and port number

3. Setting a weight and priority

4. (Optional.) Configuring the bandwidth and connection parameters

5. (Optional.) Configuring health monitoring

6. (Optional.) Enabling the slow offline feature

7. (Optional.) Setting the bandwidth ratio and maximum expected bandwidth

8. (Optional.) Configuring a VPN instance for a real server

¡ Disabling VPN instance inheritance

Creating a real server and specifying a server farm

1. Enter system view.

system-view

2. Create a real server and enter real server view.

real-server real-server-name

3. (Optional.) Configure a description for the real server.

description text

By default, no description is configured for the real server.

4. Specify a server farm for the real server.

server-farm server-farm-name

By default, the real server does not belong to any server farms.

Specifying an IP address and port number

1. Enter system view.

system-view

2. Enter real server view.

real-server real-server-name

3. Specify an IP address for the real server.

IPv4:

ip address ipv4-address

IPv6:

ipv6 address ipv6-address

By default, no IP address is specified for the real server.

4. Specify the port number for the real server.

port port-number

By default, the port number of the real server is 0. Packets use their respective port numbers.

Setting a weight and priority

About setting a weight and priority

Perform this task to set a weight for the weighted round robin and weighted least connection algorithms of a real server, and the scheduling priority in the server farm for the server.

Procedure

1. Enter system view.

system-view

2. Enter real server view.

real-server real-server-name

3. Set a weight for the real server.

weight weight-value

By default, the weight of the real server is 100.

4. Set a priority for the real server.

priority priority

By default, the priority of the real server is 4.

Configuring the bandwidth and connection parameters

About configuring the bandwidth and connection parameters

This task allows you to configure the following parameters:

· Maximum bandwidth.

· Maximum number of connections.

· Maximum number of connections per second.

· Maximum number of HTTP requests per second.

If any of the preceding thresholds is exceeded, the real server is placed in busy state.

Procedure

1. Enter system view.

system-view

2. Enter real server view.

real-server real-server-name

3. Set the maximum bandwidth for the real server.

rate-limit bandwidth [ inbound | outbound ] bandwidth-value

By default, the maximum bandwidth, inbound bandwidth, and outbound bandwidth are 0 KBps for the real server. The bandwidths are not limited.

4. Set the maximum number of connections for the real server.

connection-limit max max-number

By default, the maximum number of connections is 0 for the real server. The number is not limited.

5. Set the maximum number of connections per second for the real server.

rate-limit connection connection-number

By default, the maximum number of connections per second is 0 for the real server. The number is not limited.

6. Set the maximum number of HTTP requests per second for the real server.

rate-limit http-request request-number

By default, the maximum number of HTTP requests per second is 0 for the real server. The number is not limited.

Configuring health monitoring

About configuring health monitoring

Perform this task to enable health monitoring to detect the availability of a real server.

Restrictions and guidelines

The health monitoring configuration in real server view takes precedence over the configuration in server farm view.

Procedure

1. Enter system view.

system-view

2. Enter real server view.

real-server real-server-name

3. Specify a health monitoring method for the real server.

probe template-name [ nqa-template-port ]

By default, no health monitoring method is specified for the real server.

4. Specify the health monitoring success criteria for the real server.

success-criteria { all | at-least min-number }

By default, the health monitoring succeeds only when all the specified health monitoring methods succeed.

Enabling the slow offline feature

About the slow offline feature

The shutdown command immediately terminates existing connections of a real server. The slow offline feature ages out the connections, and does not establish new connections.

Restrictions and guidelines

To enable the slow offline feature for a real server, you must execute the slow-shutdown enable command and then the shutdown command. If you execute the shutdown command and then the slow-shutdown enable command, the slow offline feature does not take effect and the real server is shut down.

Procedure

1. Enter system view.

system-view

2. Enter real server view.

real-server real-server-name

3. Enable the slow offline feature for the real server.

slow-shutdown enable

By default, the slow offline feature is disabled.

4. Shut down the real server.

shutdown

By default, the real server is activated.

Setting the bandwidth ratio and maximum expected bandwidth

About setting the bandwidth ratio and maximum expected bandwidth

When the traffic exceeds the maximum expected bandwidth multiplied by the bandwidth ratio of a real server, new traffic is not distributed to the real server. When the traffic drops below the maximum expected bandwidth multiplied by the bandwidth recovery ratio of the real server, the real server participates in scheduling again.

In addition to being used for link protection, the maximum expected bandwidth is used for remaining bandwidth calculation in the bandwidth algorithm and maximum bandwidth algorithm.

Procedure

1. Enter system view.

system-view

2. Enter real server view.

real-server real-server-name

3. Set the bandwidth ratio.

bandwidth [ inbound | outbound ] busy-rate busy-rate-number [ recovery recovery-rate-number ]

By default, the total bandwidth ratio is 70.

4. Set the maximum expected bandwidth.

max-bandwidth [ inbound | outbound ] bandwidth-value

By default, the maximum expected bandwidth, maximum uplink expected bandwidth, and maximum downlink expected bandwidth are 0 KBps. The bandwidths are not limited.

Specifying a VPN instance

1. Enter system view.

system-view

2. Enter real server view.

real-server real-server-name

3. Specify a VPN instance for the real server.

vpn-instance vpn-instance-name

By default:

¡ A real server belongs to the public network if VPN instance inheritance is disabled.

¡ A real server belongs to the VPN instance of its virtual server if VPN instance inheritance is enabled.

Disabling VPN instance inheritance

About VPN instance inheritance

When VPN instance inheritance is enabled, a real server inherits the VPN instance of its virtual server if no VPN instance is specified for the real server. When VPN instance inheritance is disabled, a real server belongs to the public network if no VPN instance is specified for the real server.

Procedure

1. Enter system view.

system-view

2. Enter real server view.

real-server real-server-name

3. Disable VPN instance inheritance for the real server.

inherit vpn-instance disable

By default, VPN instance inheritance is enabled for a real server.

Configuring a virtual server

A virtual server is a virtual service provided by the LB device to determine whether to perform load balancing for packets received on the LB device. Only the packets that match a virtual server are load balanced.

Restrictions and guidelines

If both the "Specifying server farms" and "Specifying an LB policy" tasks are configured, packets are processed by the LB policy first. If the processing fails, the packets are processed by the specified server farms.

Virtual server tasks at a glance for Layer 4 server load balancing

2. Configuring a TCP virtual server to operate at Layer 7

3. Specifying the VSIP and port number

5. Configure a packet processing policy

Choose one of the following tasks:

6. (Optional.) Configuring the bandwidth and connection parameters

7. (Optional.) Enabling per-packet load balancing for UDP traffic

8. (Optional.) Specifying a parameter profile or LB connection limit policy

¡ Specifying a parameter profile

¡ Applying an LB connection limit policy

9. (Optional.) Configuring hot backup

10. (Optional.) Enabling IP address advertisement for a virtual server

11. (Optional.) Specifying an interface for sending gratuitous ARP packets and ND packets

Virtual server tasks at a glance for Layer 7 server load balancing

2. Specifying the VSIP and port number

4. Configure a packet processing policy

Choose one of the following tasks:

5. (Optional.) Configuring the bandwidth and connection parameters

6. (Optional.) Configuring the HTTP redirection feature

7. (Optional.) Specifying a parameter profile or policy

¡ Specifying a parameter profile

¡ Applying an LB connection limit policy

8. (Optional.) Configuring hot backup

9. (Optional.) Enabling IP address advertisement for a virtual server

10. (Optional.) Specifying an interface for sending gratuitous ARP packets and ND packets

Creating a virtual server

About virtual server types

The virtual server types of Layer 4 server load balancing include IP, TCP, and UDP.

The virtual server types of Layer 7 server load balancing include fast HTTP, HTTP, RADIUS, TCP-based SIP, and UDP-based SIP. For information about SIP, see Voice Configuration Guide.

Restrictions and guidelines

Do not use fast HTTP virtual servers together with the TCP client verification feature. For more information the TCP client verification feature, see attack detection and prevention configuration in Security Configuration Guide.

Creating a virtual server for Layer 4 server load balancing

1. Enter system view.

system-view

2. Create an IP, TCP, or UDP virtual server and enter virtual server view.

virtual-server virtual-server-name type { ip | tcp | udp }

When you create a virtual server, you must specify the virtual server type. You can enter an existing virtual server view without specifying the virtual server type. If you specify the virtual server type when entering an existing virtual server view, the virtual server type must be the one specified when you create the virtual server.

3. (Optional.) Configure a description for the virtual server.

description text

By default, no description is configured for the virtual server.

Creating a virtual server for Layer 7 server load balancing

1. Enter system view.

system-view

2. Create a fast HTTP, RADIUS, HTTP, TCP-based SIP, or UDP-based SIP virtual server and enter virtual server view.

virtual-server virtual-server-name type { fast-http | http | radius | sip-tcp | sip-udp }

When you create a virtual server, you must specify the virtual server type. You can enter an existing virtual server view without specifying the virtual server type. If you specify the virtual server type when entering an existing virtual server view, the virtual server type must be the one specified when you create the virtual server.

3. (Optional.) Configure a description for the virtual server.

description text

By default, no description is configured for the virtual server.

Configuring a TCP virtual server to operate at Layer 7

1. Enter system view.

system-view

2. Enter TCP virtual server view.

virtual-server virtual-server-name

3. Configure the TCP virtual server to operate at Layer 7.

application-mode enable

By default, a TCP virtual server operates at Layer 4.

Specifying the VSIP and port number

Restrictions and guidelines

Do not specify the same VSIP and port number for virtual servers of the fast HTTP, HTTP, IP, RADIUS, TCP-based SIP, and TCP types.

Do not specify the same VSIP and port number for virtual servers of the UDP and UDP-based SIP, types.

Specifying the VSIP and port number for Layer 4 server load balancing

1. Enter system view.

system-view

2. Enter IP, TCP, or UDP virtual server view.

virtual-server virtual-server-name

3. Specify the VSIP for the virtual server.

IPv4:

virtual ip address ipv4-address [ mask-length | mask ]

IPv6:

virtual ipv6 address ipv6-address [ prefix-length ]

By default, no IP address is specified for the virtual server.

4. Specify the port number for the virtual server.

port { port-number [ to port-number ] } &<1-n>

By default, the port number is 0 (meaning any port number) for the virtual server of the IP, TCP, or UDP type.

Specifying the VSIP and port number for Layer 7 server load balancing

1. Enter system view.

system-view

2. Enter fast HTTP, HTTP, RADIUS, TCP-based SIP, or UDP-based SIP virtual server view.

virtual-server virtual-server-name

3. Specify the VSIP for the virtual server.

IPv4:

virtual ip address ipv4-address [ mask-length | mask ]

IPv6:

virtual ipv6 address ipv6-address [ prefix-length ]

By default, no IP address is specified for the virtual server.

4. Specify the port number for the virtual server.

port { port-number [ to port-number ] } &<1-n>

By default:

¡ The port number is 80 for the virtual server of the fast HTTP or HTTP type.

¡ The port number is 0 (meaning any port number) for the virtual server of the RADIUS type.

¡ The port number is 5060 for the virtual server of the SIP type.

If the virtual server has referenced an SSL policy, you must specify a non-default port number (typically 443) for the virtual server.

Specifying a VPN instance

1. Enter system view.

system-view

2. Enter virtual server view.

virtual-server virtual-server-name

3. Specify a VPN instance for the virtual server.

vpn-instance vpn-instance-name

By default, a virtual server belongs to the public network.

Specifying server farms

About specifying server farms

When the primary server farm is available (contains available real servers), the virtual server forwards packets through the primary server farm. When the primary server farm is not available, the virtual server forwards packets through the backup server farm.

Procedure

1. Enter system view.

system-view

2. Enter virtual server view.

virtual-server virtual-server-name

3. Specify server farms.

default server-farm server-farm-name [ backup backup-server-farm-name ] [ sticky sticky-name ]

By default, no server farm is specified for the virtual server.

Specifying an LB policy

About specifying an LB policy

By referencing an LB policy, the virtual server load balances matching packets based on the packet contents.

Procedure

1. Enter system view.

system-view

2. Enter virtual server view.

virtual-server virtual-server-name

3. Specify an LB policy for the virtual server.

lb-policy policy-name

By default, the virtual server does not reference any LB policies.

A virtual server can only reference a policy profile of the specified type. For example, a virtual server of the fast HTTP or HTTP type can reference a policy profile of the generic type or HTTP type. A virtual server of the IP, SIP, TCP, or UDP type can only reference a policy profile of the generic type. A virtual server of the RADIUS type can reference a policy profile of the generic or RADIUS type.

Configuring the bandwidth and connection parameters

1. Enter system view.

system-view

2. Enter virtual server view.

virtual-server virtual-server-name

3. Set the maximum bandwidth for the virtual server.

rate-limit bandwidth [ inbound | outbound ] bandwidth-value

By default, the maximum bandwidth, inbound bandwidth, and outbound bandwidth are 0 KBps for the virtual server. The bandwidths are not limited.

4. Set the maximum number of connections for the virtual server.

connection-limit max max-number

By default, the maximum number of connections is 0 for the virtual server. The number is not limited.

5. Set the maximum number of connections per second for the virtual server.

rate-limit connection connection-number

By default, the maximum number of connections per second is 0 for the virtual server. The number is not limited.

Enabling per-packet load balancing for UDP traffic

About per-packet load balancing for UDP traffic

By default, the LB device distributes traffic matching the virtual server according to application type. Traffic of the same application type is distributed to one real server. Perform this task to enable the LB device to distribute traffic matching the virtual server on a per-packet basis.

Procedure

1. Enter system view.

system-view

2. Enter UDP-based SIP or UDP virtual server view.

virtual-server virtual-server-name

3. Enable per-packet load balancing for UDP traffic for the virtual server.

udp per-packet

By default, per-packet load balancing for UDP traffic is disabled for the virtual server.

Configuring the HTTP redirection feature

About the HTTP redirection feature

This feature redirects all HTTP request packets matching a virtual server to the specified URL.

Procedure

1. Enter system view.

system-view

2. Enter HTTP virtual server view.

virtual-server virtual-server-name

3. Enable the redirection feature and specify a redirection URL for the virtual server.

redirect relocation relocation

By default, the redirection feature is disabled for the virtual server.

4. Specify the redirection status code that the LB device returns to a client.

redirect return-code { 301 | 302 }

By default, the redirection status code that the LB device returns to a client is 302.

This command takes effect only when the redirection feature is enabled for the virtual server.

Specifying a parameter profile

About specifying a parameter profile

You can configure advanced parameters through a parameter profile. The virtual server references the parameter profile to analyze, process, and optimize service traffic.

Specifying a parameter profile for Layer 4 server load balancing

1. Enter system view.

system-view

2. Enter IP, TCP, or UDP virtual server view.

virtual-server virtual-server-name

3. Specify a parameter profile for the virtual server.

parameter { ip | tcp } profile-name [ client-side | server-side ]

By default, the virtual server does not reference any parameter profiles.

TCP virtual servers can only use a TCP parameter profile. IP virtual servers and UDP virtual servers can only use an IP parameter profile. Only TCP parameter profiles support the client-side and server-side keywords.

Specifying a parameter profile for Layer 7 server load balancing

1. Enter system view.

system-view

2. Enter fast HTTP, HTTP, RADIUS, TCP-based SIP, or UDP-based SIP virtual server view.

virtual-server virtual-server-name

3. Specify a parameter profile for the virtual server.

parameter { http | http-compression | http-statistics | ip | oneconnect | tcp | tcp-application } profile-name [ client-side | server-side ]

By default, the virtual server does not reference any parameter profiles.

Only fast HTTP and HTTP virtual servers support HTTP and TCP parameter profiles. Only HTTP virtual servers support HTTP-compression, HTTP-statistics, and OneConnect parameter profiles. Only TCP virtual servers operating at Layer 7 support TCP-application parameter profiles.

Only TCP parameter profiles support the client-side and server-side keywords.

Applying an LB connection limit policy

About applying an LB connection limit policy

Perform this task to limit the number of connections accessing the virtual server.

Procedure

1. Enter system view.

system-view

2. Enter virtual server view.

virtual-server virtual-server-name

3. Apply an LB connection limit policy to the virtual server.

lb-limit-policy policy-name

By default, no LB connection limit policies are applied to the virtual server.

Specifying an SSL policy

About specifying an SSL policy

Specifying an SSL client policy enables the LB device (SSL client) to send encrypted traffic to an SSL server.

Specifying an SSL server policy enables the LB device (SSL server) to send encrypted traffic to an SSL client.

Restrictions and guidelines

You must disable and then enable a virtual server for a modified SSL policy to take effect.

Procedure

1. Enter system view.

system-view

2. Enter TCP or HTTP virtual server view.

virtual-server virtual-server-name

3. Specify an SSL client policy for the virtual server.

ssl-client-policy policy-name

By default, the virtual server does not reference any SSL client policies.

The virtual servers of the TCP type and fast HTTP type do not support this command.

4. Specify an SSL server policy for the virtual server.

ssl-server-policy policy-name

By default, the virtual server does not reference any SSL server policies.

The virtual servers of the fast HTTP type do not support this command.

Configuring hot backup

About configuring hot backup

To implement hot backup for two LB devices, you must enable synchronization for session extension information and sticky entries to avoid service interruption.

Restrictions and guidelines

For successful sticky entry synchronization, if you want to specify a sticky group, enable sticky entry synchronization before specifying a sticky group on both LB devices. You can specify a sticky group by using the sticky sticky-name option when you specify server farms.

Procedure

1. Enter system view.

system-view

2. Enter virtual server view.

virtual-server virtual-server-name

3. Enable session extension information synchronization.

connection-sync enable

By default, session extension information synchronization is disabled.

The virtual servers of the HTTP type do not support this command.

4. Enable sticky entry synchronization.

sticky-sync enable

By default, sticky entry synchronization is disabled.

Enabling IP address advertisement for a virtual server

About enabling IP address advertisement for a virtual server

This feature can implement load balancing among data centers in a disaster recovery network. You must enable IP address advertisement for a virtual server on the LB device in each data center.

After this feature is configured, the device advertises the IP address of the virtual server to OSPF for route calculation. When the service of a data center switches to another data center, the traffic to the virtual server can also be switched to that data center. For information about OSPF, see Layer 3—IP Routing Configuration Guide.

Procedure

1. Enter system view.

system-view

2. Enter virtual server view.

virtual-server virtual-server-name

3. Enable IP address advertisement for the virtual server.

route-advertisement enable

By default, IP address advertisement is disabled for a virtual server.

Specifying an interface for sending gratuitous ARP packets and ND packets

About specifying an interface for sending gratuitous ARP packets and ND packets

Perform this task to specify an interface from which gratuitous ARP packets and ND packets are sent out. For information about gratuitous ARP, see ARP configuration in Layer 3—IP Services Configuration Guide. For information about ND, see IPv6 basics configuration in Layer 3—IP Services Configuration Guide.

Procedure

1. Enter system view.

system-view

2. Enter virtual server view.

virtual-server virtual-server-name

3. Specify an interface for sending gratuitous ARP packets and ND packets.

arp-nd interface interface-type interface-number

By default, no interface is specified for sending gratuitous ARP packets and ND packets.

Enabling a virtual server

About enabling a virtual server

After you configure a virtual server, you must enable the virtual server to for it to work.

Procedure

1. Enter system view.

system-view

2. Enter virtual server view.

virtual-server virtual-server-name

3. Enable the virtual server.

service enable

By default, the virtual server is disabled.

Configuring an LB class

An LB class classifies packets by comparing packets against specific rules. Matching packets are further processed by LB actions. You can create a maximum of 65535 rules for an LB class.

LB class tasks at a glance

To configure an LB class, perform the following tasks:

1. Configure a generic LB class

b. Create a match rule

Choose the following tasks as needed:

- Creating a match rule that references an LB class

- Creating a source IP address match rule

- Creating an interface match rule

- Creating a user group match rule

- Creating a TCP payload match rule

2. Configure an HTTP LB class

b. Create a match rule

Choose the following tasks as needed:

- Creating a match rule that references an LB class

- Creating a source IP address match rule

- Creating an interface match rule

- Creating a user group match rule

- Creating an HTTP content match rule

- Creating an HTTP cookie match rule

- Creating an HTTP header match rule

- Creating an HTTP URL match rule

- Creating an HTTP method match rule

- Creating a RADIUS attribute match rule

3. Configure a RADIUS LB class

b. Create a match rule

Choose the following tasks as needed:

- Creating a match rule that references an LB class

- Creating a source IP address match rule

- Creating a RADIUS attribute match rule

Creating an LB class

Creating an LB class for Layer 4 server load balancing

1. Enter system view.

system-view

2. Create a generic LB class, and enter LB class view.

loadbalance class class-name type generic [ match-all | match-any ]

When you create an LB class, you must specify the class type. You can enter an existing LB class view without specifying the class type. If you specify the class type when entering an existing LB class view, the class type must be the one specified when you create the LB class.

3. (Optional.) Configure a description for the LB class.

description text

By default, no description is configured for the LB class.

Creating an LB class for Layer 7 server load balancing

1. Enter system view.

system-view

2. Create an HTTP or RADIUS LB class, and enter LB class view.

loadbalance class class-name type { http | radius } [ match-all | match-any ]

When you create an LB class, you must specify an class type. You can enter an existing LB class view without specifying the class type. If you specify the class type when entering an existing LB class view, the class type must be the one specified when you create the LB class.

3. (Optional.) Configure a description for the LB class.

description text

By default, no description is configured for the LB class.

Creating a match rule that references an LB class

1. Enter system view.

system-view

2. Enter LB class view.

loadbalance class class-name

3. Create a match rule that references an LB class.

match [ match-id ] class class-name

Creating a source IP address match rule

1. Enter system view.

system-view

2. Enter LB class view.

loadbalance class class-name

3. Create a source IP address match rule.

match [ match-id ] source { ip address ipv4-address [ mask-length | mask ] | ipv6 address ipv6-address [ prefix-length ] }

Creating an ACL match rule

1. Enter system view.

system-view

2. Enter LB class view.

loadbalance class class-name

3. Create an ACL match rule.

match [ match-id ] acl [ ipv6 ] { acl-number | name acl-name }

Creating an interface match rule

1. Enter system view.

system-view

2. Enter generic or HTTP LB class view.

loadbalance class class-name

3. Create an interface match rule.

match [ match-id ] interface interface-type interface-number

Creating a user match rule

1. Enter system view.

system-view

2. Enter generic or HTTP LB class view.

loadbalance class class-name

3. Create a user match rule.

match [ match-id ] [ identity-domain domain-name ] user user-name

Creating a user group match rule

1. Enter system view.

system-view

2. Enter generic or HTTP LB class view.

loadbalance class class-name

3. Create a user group match rule.

match [ match-id ] [ identity-domain domain-name ] user-group user-group-name

Creating a TCP payload match rule

About the TCP payload match rule

The device takes the corresponding LB action on TCP packets matching a TCP payload match rule. If you specify the not keyword for a TCP payload match rule, the device takes the corresponding LB action on TCP packets not matching the TCP payload match rule.

Procedure

1. Enter system view.

system-view

2. Enter generic LB class view.

loadbalance class class-name

3. Create a TCP payload match rule.

match [ match-id ] payload payload [ case-insensitive ] [ not ]

Creating an HTTP content match rule

1. Enter system view.

system-view

2. Enter HTTP LB class view.

loadbalance class class-name

3. Create an HTTP content match rule.

match [ match-id ] content content [ offset offset ]

This command is not supported by virtual servers of the fast HTTP type.

Creating an HTTP cookie match rule

1. Enter system view.

system-view

2. Enter HTTP LB class view.

loadbalance class class-name

3. Create an HTTP cookie match rule.

match [ match-id ] cookie cookie-name value value

Creating an HTTP header match rule

1. Enter system view.

system-view

2. Enter HTTP LB class view.

loadbalance class class-name

3. Create an HTTP header match rule.

match [ match-id ] header header-name value value

Creating an HTTP URL match rule

1. Enter system view.

system-view

2. Enter HTTP LB class view.

loadbalance class class-name

3. Create an HTTP URL match rule.

match [ match-id ] url url

Creating an HTTP method match rule

1. Enter system view.

system-view

2. Enter HTTP LB class view.

loadbalance class class-name

3. Create an HTTP method match rule.

match [ match-id ] method { ext ext-type | rfc rfc-type }

Creating a RADIUS attribute match rule

1. Enter system view.

system-view

2. Enter RADIUS LB class view.

loadbalance class class-name

3. Create a RADIUS attribute match rule.

match [ match-id ] radius-attribute { code attribute-code | user-name } value attribute-value

Configuring an LB action

About LB action modes

LB actions include the following modes:

· Forwarding mode—Determines whether and how to forward packets. If no forwarding action is specified, packets are dropped.

· Modification mode—Modifies packets. To prevent the LB device from dropping the modified packets, the modification action must be used together with a forwarding action.

· Response mode—Responds to client requests by using a file.

If you create an LB action without specifying any of the previous action modes, packets are dropped.

Restrictions and guidelines

For Layer 4 server load balancing, the following configurations are mutually exclusive:

· Configure the forwarding mode.

· Specify server farms.

For Layer 7 server load balancing, any two of the following configurations are mutually exclusive:

· Specify server farms.

· Configure the redirection feature.

· Specify a response file for matching HTTP requests.

For Layer 7 server load balancing, the following configurations are also mutually exclusive:

· Match the next rule upon failure to find a real server.

· Specify a response file used upon load balancing failure.

LB action tasks at a glance

To configure an LB action, perform the following tasks:

1. Configuring a generic LB action

b. (Optional.) Configuring a forwarding LB action

- Configuring the forwarding mode

You can configure only one of the "Configuring the forwarding mode", "Specifying server farms", and "Closing TCP connections" tasks.

- (Optional.) Matching the next rule upon failure to find a real server

- (Optional.) Closing TCP connections upon failure to find a real server

c. (Optional.) Configuring a modification LB action

- Configuring the ToS field in IP packets sent to the server

2. Configuring an HTTP LB action.

b. (Optional.) Configuring a forwarding LB action

You can configure only one of the "Specifying server farms" and "Closing TCP connections" tasks.

- (Optional.) Matching the next rule upon failure to find a real server

- (Optional.) Closing TCP connections upon failure to find a real server

c. (Optional.) Configuring a modification LB action

Choose the following tasks as needed:

- Configuring the ToS field in IP packets sent to the server

- Rewriting the URL in the Location header of HTTP responses from the server

- Specifying an SSL client policy

- Rewriting the content of HTTP responses

- Configuring the HTTP redirection feature

d. (Optional.) Configuring a response LB action

- Specifying a response file for matching HTTP requests

- Specifying a response file used upon load balancing failure

3. Configuring a RADIUS LB action.

b. (Optional.) Configuring a forwarding LB action

- Matching the next rule upon failure to find a real server

c. (Optional.) Configuring a modification LB action

- Configuring the ToS field in IP packets sent to the server

Creating an LB action

Creating an LB action for Layer 4 server load balancing

1. Enter system view.

system-view

2. Create a generic LB action, and enter LB action view.

loadbalance action action-name type generic

When you create an LB action, you must specify the action type. You can enter an existing LB action view without specifying the action type. If you specify the action type when entering an existing LB action view, the action type must be the one specified when you create the LB action.

3. (Optional.) Configure a description for the LB action.

description text

By default, no description is configured for the LB action.

Creating an LB action for Layer 7 server load balancing

1. Enter system view.

system-view

2. Create an HTTP or RADIUS LB action, and enter LB action view.

loadbalance action action-name type { http | radius }

When you create an LB action, you must specify the action type. You can enter an existing LB action view without specifying the action type. If you specify the action type when entering an existing LB action view, the action type must be the one specified when you create the LB action.

3. (Optional.) Configure a description for the LB action.

description text

By default, no description is configured for the LB action.

Configuring a forwarding LB action

About forwarding LB actions

Three forwarding LB action types are available:

· Forward—Forwards matching packets.

· Specify server farms—When the primary server farm is available (contains available real servers), the primary server farm is used to guide packet forwarding. When the primary server farm is not available, the backup server farm is used to guide packet forwarding.

· Close TCP connections—Closes TCP connections matching the LB policy by sending FIN or RST packets.

· Match the next rule upon failure to find a real server—If the device fails to find a real server according to the LB action, it matches the packet with the next rule in the LB policy.

· Close TCP connections upon failure to find a real server—Closes TCP connections matching the LB policy by sending FIN or RST packets if the device fails to find a real server according to the LB action.

Configuring the forwarding mode

1. Enter system view.

system-view

2. Enter generic LB action view.