- Table of Contents

-

- H3C Data Center Switches M-LAG Configuration Guide-6W100

- 00-M-LAG network planning

- 01-M-LAG+IPv4 and IPv6 Dual-Active VLAN Gateway Configuration Example

- 02-Multi-Layer M-LAG+STP+Dual-Active VLAN Gateway Configuration Examples

- 03-Multi-Layer M-LAG+Dual-Active VLAN Gateway+OSPF Configuration Examples

- 04-Multi-tier M-LAG+Spine Gateways+ECMP Paths to External Network Configuration Example

- 05-M-LAG and VRRP Configuration Example

- 06-M-LAG+RDMA Configuration Example

- 07-M-LAG and EVPN Distributed Gateway (IS-IS for underlay routing) Configuration Example

- 08-M-LAG and EVPN Distributed Gateway (BGP for Underlay Routing) Configuration Example

- 09-M-LAG+EVPN Distributed Gateway (OSPF on Underlay Network)+DHCP Relay+Microsegmentation+Service Chain Configuration Example

- 10-M-LAG+EVPN Centralized Gateway Configuration Example

- 11-Access to M-LAG Through Dynamic Routing and Distributed EVPN Gateways Configuration Example

- 12-M-LAG+EVPN+Monitor Link Configuration Examples

- 13-M-LAG and MVXLAN Configuration Example

- 14-M-LAG and DCI Configuration Example

- 15-M-LAG+EVPN DC Switchover Upon Border Failure Configuration Examples

- Related Documents

-

| Title | Size | Download |

|---|---|---|

| 06-M-LAG+RDMA Configuration Example | 488.82 KB |

Example: Deploying M-LAG and RDMA

Configuring S6850 switches as leaf nodes

Configuring global RoCE settings

Configuring the links towards the spine tier

Configuring RoCE settings for the links towards the spine tier

Configuring RoCE settings for the peer link

Configuring the links towards the servers

Configuring RoCE settings for the links towards the servers

Configuring an underlay BGP instance

Configuring VLAN interfaces and gateways (dual-active gateways)

Configuring VLAN interfaces and gateways (VRRP gateways)

Spine device configuration tasks at a glance

Configuring global RoCE settings

Configuring the links towards the leaf tier

Configuring RoCE settings for the links towards the leaf tier

Configuring routing policies to replace the original AS numbers with the local AS number

Configuring an underlay BGP instance

Influence of adding or deleting commands on traffic

Convergence performance test results

Guidelines for tuning parameters

Identifying whether packets are dropped

Example: Deploying M-LAG and RDMA

Network configuration

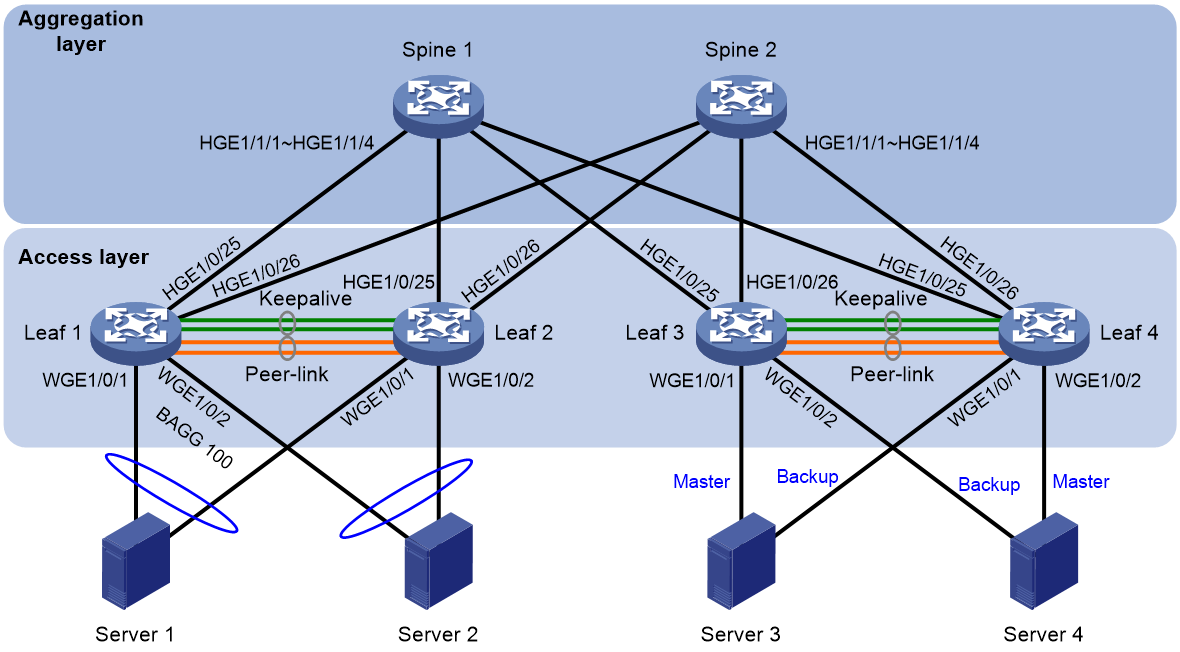

As shown in Figure 1, build a Layer 2 network within the data center to implement traffic forwarding across leaf devices and spine devices.

The following is the network configuration:

· Deploy the leaf devices as access switches. Use M-LAG to build two pairs of access switches as M-LAG systems. The M-LAG interfaces in an M-LAG system form a multichassis aggregate link for improved link usage. Configure dual-active-gateway or VRRP for the leaf devices to provide connectivity for servers.

· Deploy the spine devices as aggregation switches. Configure them as route reflectors (RRs) to reflect BGP routes among leaf devices.

· Configure intelligent lossless network features such as PFC and data buffer to provide zero packet loss for RDMA. In this example, zero packet loss is implemented for packets with 802.1p priority 3.

· Configure DHCP relay on leaf devices to help servers obtain IP addresses.

|

Device |

Interface |

IP address |

Remarks |

|

Leaf 1 |

WGE1/0/1 |

N/A |

Member port of an M-LAG interface. Connected to Server 1. |

|

WGE1/0/2 |

N/A |

Member port of an M-LAG interface. Connected to Server 2. |

|

|

HGE1/0/29 |

N/A |

Member port of the peer-link interface. Connected to HGE 1/0/29 on Leaf 2. |

|

|

HGE1/0/30 |

N/A |

Member port of the peer-link interface. Connected to HGE 1/0/30 on Leaf 2. |

|

|

HGE1/0/31 |

N/A |

Member port of the peer-link interface. Connected to HGE 1/0/31 on Leaf 2. |

|

|

HGE1/0/32 |

N/A |

Member port of the peer-link interface. Connected to HGE 1/0/32 on Leaf 2. |

|

|

WGE1/0/55 |

RAGG 1000: 1.1.1.1/30 |

Keepalive link between M-LAG member devices. Connected to WGE 1/0/55 on Leaf 2. |

|

|

WGE1/0/56 |

Keepalive link between M-LAG member devices. Connected to WGE 1/0/56 on Leaf 2. |

||

|

HGE1/0/25 |

172.16.2.154/30 |

Connected to WGE 1/1/1 on Spine 1. |

|

|

HGE1/0/26 |

172.16.3.154/30 |

Connected to WGE 1/1/1 on Spine 2. |

|

|

Loopback1 |

50.50.255.41/32 |

N/A |

|

|

Leaf 2 |

WGE1/0/1 |

N/A |

Member port of an M-LAG interface. Connected to Server 1. |

|

WGE1/0/2 |

N/A |

Member port of an M-LAG interface. Connected to Server 2. |

|

|

HGE1/0/29 |

N/A |

Member port of the peer-link interface. Connected to HGE 1/0/29 on Leaf 1. |

|

|

HGE1/0/30 |

N/A |

Member port of the peer-link interface. Connected to HGE 1/0/30 on Leaf 1. |

|

|

HGE1/0/31 |

N/A |

Member port of the peer-link interface. Connected to HGE 1/0/31 on Leaf 1. |

|

|

HGE1/0/32 |

N/A |

Member port of the peer-link interface. Connected to HGE 1/0/32 on Leaf 1. |

|

|

WGE1/0/55 |

RAGG 1000: 1.1.1.2/30 |

Keepalive link between M-LAG member devices. Connected to WGE 1/0/55 on Leaf 1. |

|

|

WGE1/0/56 |

Keepalive link between M-LAG member devices. Connected to WGE 1/0/56 on Leaf 1. |

||

|

HGE1/0/25 |

172.16.2.158/30 |

Connected to WGE 1/1/2 on Spine 1. |

|

|

HGE1/0/26 |

172.16.3.158/30 |

Connected to WGE 1/1/2 on Spine 2. |

|

|

Loopback1 |

50.50.255.42/32 |

N/A |

|

|

Leaf 3 |

WGE1/0/1 |

N/A |

Interface connected to a single-homed device. Connected Server 3. |

|

WGE1/0/2 |

N/A |

Interface connected to a single-homed device. Connected Server 4. |

|

|

HGE1/0/29 |

N/A |

Member port of the peer-link interface. Connected to HGE 1/0/29 on Leaf 4. |

|

|

HGE1/0/30 |

N/A |

Member port of the peer-link interface. Connected to HGE 1/0/30 on Leaf 4. |

|

|

HGE1/0/31 |

N/A |

Member port of the peer-link interface. Connected to HGE 1/0/31 on Leaf 4. |

|

|

HGE1/0/32 |

N/A |

Member port of the peer-link interface. Connected to HGE 1/0/32 on Leaf 4. |

|

|

WGE1/0/55 |

RAGG 1000: 1.1.1.1/30 |

Keepalive link between M-LAG member devices. Connected to WGE 1/0/55 on Leaf 1. |

|

|

WGE1/0/56 |

Keepalive link between M-LAG member devices. Connected to WGE 1/0/56 on Leaf 1. |

||

|

HGE1/0/25 |

172.16.2.82/30 |

Connected to WGE 1/1/2 on Spine 1. |

|

|

HGE1/0/26 |

172.16.3.82/30 |

Connected to WGE 1/1/2 on Spine 2. |

|

|

Loopback1 |

50.50.255.23/32 |

N/A |

|

|

Leaf 4 |

WGE1/0/1 |

N/A |

Interface connected to a single-homed device. Connected Server 3. |

|

WGE1/0/2 |

N/A |

Interface connected to a single-homed device. Connected Server 4. |

|

|

HGE1/0/29 |

N/A |

Member port of the peer-link interface. Connected to HGE 1/0/29 on Leaf 3. |

|

|

HGE1/0/30 |

N/A |

Member port of the peer-link interface. Connected to HGE 1/0/30 on Leaf 3. |

|

|

HGE1/0/31 |

N/A |

Member port of the peer-link interface. Connected to HGE 1/0/31 on Leaf 3. |

|

|

HGE1/0/32 |

N/A |

Member port of the peer-link interface. Connected to HGE 1/0/32 on Leaf 3. |

|

|

WGE1/0/55 |

RAGG 1000: 1.1.1.2/30 |

Keepalive link between M-LAG member devices. Connected to WGE 1/0/55 on Leaf 1. |

|

|

WGE1/0/56 |

Keepalive link between M-LAG member devices. Connected to WGE 1/0/56 on Leaf 1. |

||

|

HGE1/0/25 |

172.16.2.86/30 |

Connected to WGE 1/1/2 on Spine 1. |

|

|

HGE1/0/26 |

172.16.3.86/30 |

Connected to WGE 1/1/2 on Spine 2. |

|

|

Loopback1 |

50.50.255.24/32 |

N/A |

|

|

Spine 1 |

HGE1/1/1 |

172.16.2.153/30 |

Connected to HGE 1/0/25 on Leaf 1. |

|

HGE1/1/2 |

172.16.2.157/30 |

Connected to HGE 1/0/25 on Leaf 2. |

|

|

HGE1/1/3 |

172.16.2.81/30 |

Connected to HGE 1/0/25 on Leaf 3. |

|

|

HGE1/1/4 |

172.16.2.85/30 |

Connected to HGE 1/0/25 on Leaf 4. |

|

|

Loopback1 |

50.50.255.1/32 |

N/A |

|

|

Spine 2 |

HGE1/1/1 |

172.16.3.153/30 |

Connected to HGE 1/0/26 on Leaf 1. |

|

HGE1/1/2 |

172.16.3.157/30 |

Connected to HGE 1/0/26 on Leaf 2. |

|

|

HGE1/1/3 |

172.16.3.81/30 |

Connected to HGE 1/0/26 on Leaf 3. |

|

|

HGE1/1/4 |

172.16.3.85/30 |

Connected to HGE 1/0/26 on Leaf 4. |

|

|

Loopback1 |

50.50.255.2/32 |

N/A |

Connectivity models

The following are the types of connectivity between servers:

· Layer 2 connectivity between servers attached to the same M-LAG system at the leaf tier.

· Layer 3 connectivity between servers attached to the same M-LAG system at the leaf tier.

· Layer 3 connectivity between servers attached to different M-LAG systems at the leaf tier.

Applicable product matrix

|

Role |

Devices |

Software version |

|

Spine |

S12500X-AF This example uses S12500X-AF switches. |

Not recommended. |

|

S12500G-AF |

Not recommended. |

|

|

S9820-8C This example uses S9820-8C switches as spine nodes. |

R6710 |

|

|

Leaf |

S6800, S6860 |

Not recommended. |

|

S6812, S6813 |

Not recommended. |

|

|

S6805, S6825, S6850, S9850 This example uses S6850 switches as leaf nodes. |

R6710 |

|

|

S6890 |

Not recommended. |

|

|

S9820-64H |

R6710 |

|

|

Server NIC |

Mellanox ConnectX-6 Lx |

· Driver version: MLNX_OFED_LINUX-5.4-3.2.7.2.3-rhel8.4-x86_64 · Firmware version: ¡ Driver: mlx5_core ¡ Version: 5.4-3.2.7.2.3 ¡ firmware-version: 26.31.2006 (MT_0000000531) |

|

Mellanox ConnectX-5 |

· Driver version: MLNX_OFED_LINUX-5.4-3.2.7.2.3-rhel8.4-x86_64 · Firmware version: ¡ Driver: mlx5_core ¡ Version: 5.4-3.2.7.2.3 ¡ firmware-version: 16.31.2006 (MT_0000000080) |

|

|

Mellanox ConnectX-4 Lx |

· Driver version: MLNX_OFED_LINUX-5.4-3.2.7.2.3-rhel8.4-x86_64 · Firmware version: ¡ Driver: mlx5_core ¡ Version: 5.4-3.2.7.2.3 ¡ firmware-version: 14.31.2006 (MT_2420110034) |

Restrictions and guidelines

Determine the appropriate convergence ratio according to actual business needs. As a best practice, use two or four high-speed interfaces for the peer link to meet the east-west traffic forwarding requirements among servers in the same leaf group. Use other high-speed interfaces as uplink interfaces. Use common-rate interfaces to connect to servers. If the convergence ratio cannot meet requirements, attach less servers to leaf devices.

When you enable PFC for an 802.1p priority, the device will set a default value for each threshold. As a best practice, use the default threshold values. For more information, see "Tuning the parameters" and "Guidelines for tuning parameters."

You can configure WRED by using one of the following approaches:

· Interface configuration—Configure WRED parameters on an interface and enable WRED. You can configure WRED parameters for only the RoCE queue. You can configure WRED parameters on different interfaces. This approach is recommended.

· WRED table configuration—Create a WRED table, configure WRED parameters for a queue, and then apply the WRED table to an interface. If you do not configure WRED parameters for a queue, the default values are used for that queue. As a best practice, configure WRED parameters for each queue because the default values are small and might not be applicable.

Configuring S6850 switches as leaf nodes

This example describes the procedure to deploy nodes Leaf 1 and Leaf 2. The same procedure applies to nodes Leaf 3 and Leaf 4 except the single-homed device configuration.

Procedure summary

· Configuring global RoCE settings

· Configuring the links towards the spine tier

· Configuring RoCE settings for the links towards the spine tier

Choose one option as needed

¡ Configuring WRED on an interface

· Configuring RoCE settings for the peer link

· Configuring the links towards the servers

· Configuring RoCE settings for the links towards the servers

Choose one option as needed

¡ Configuring WRED on an interface

· Configuring an underlay BGP instance

· Configuring VLAN interfaces and gateways. Choose one option as needed:

¡ Configuring VLAN interfaces and gateways (dual-active gateways)

¡ Configuring VLAN interfaces and gateways (VRRP gateways)

Configuring global RoCE settings

Configuring global PFC settings and data buffer settings

|

Leaf 1 |

Leaf 2 |

Description |

Remarks |

|

priority-flow-control poolID 0 headroom 131072 |

priority-flow-control poolID 0 headroom 131072 |

Set the maximum number of cell resources that can be used in a headroom storage space. |

Set the maximum value allowed. |

|

priority-flow-control deadlock cos 3 interval 10 |

priority-flow-control deadlock cos 3 interval 10 |

Set the PFC deadlock detection interval for the specified CoS value. |

N/A |

|

priority-flow-control deadlock precision high |

priority-flow-control deadlock precision high |

Set the precision for the PFC deadlock detection timer. |

Set the high precision for the PFC deadlock detection timer. |

|

buffer egress cell queue 3 shared ratio 100 |

buffer egress cell queue 3 shared ratio 100 |

Set the maximum shared-area ratio to 100% for the RoCE queue. |

N/A |

|

buffer egress cell queue 6 shared ratio 100 |

buffer egress cell queue 6 shared ratio 100 |

Set the maximum shared-area ratio to 100% for the CNP queue. |

N/A |

|

buffer apply |

buffer apply |

Apply manually configured data buffer settings. |

N/A |

Configuring WRED tables

|

Leaf 1 |

Leaf 2 |

Description |

Remarks |

|

qos wred queue table 100G-WRED-Template |

qos wred queue table 100G-WRED-Template |

Create a queue-based WRED table. |

N/A |

|

queue 0 drop-level 0 low-limit 37999 high-limit 38000 discard-probability 20 queue 0 drop-level 1 low-limit 37999 high-limit 38000 discard-probability 20 queue 0 drop-level 2 low-limit 37999 high-limit 38000 discard-probability 20 |

queue 0 drop-level 0 low-limit 37999 high-limit 38000 discard-probability 20 queue 0 drop-level 1 low-limit 37999 high-limit 38000 discard-probability 20 queue 0 drop-level 2 low-limit 37999 high-limit 38000 discard-probability 20 |

Configure WRED table settings for queue 0. |

N/A |

|

queue 1 drop-level 0 low-limit 37999 high-limit 38000 discard-probability 20 queue 1 drop-level 1 low-limit 37999 high-limit 38000 discard-probability 20 queue 1 drop-level 2 low-limit 37999 high-limit 38000 discard-probability 20 |

queue 1 drop-level 0 low-limit 37999 high-limit 38000 discard-probability 20 queue 1 drop-level 1 low-limit 37999 high-limit 38000 discard-probability 20 queue 1 drop-level 2 low-limit 37999 high-limit 38000 discard-probability 20 |

Configure WRED table settings for queue 1. |

N/A |

|

queue 2 drop-level 0 low-limit 37999 high-limit 38000 discard-probability 20 queue 2 drop-level 1 low-limit 37999 high-limit 38000 discard-probability 20 queue 2 drop-level 2 low-limit 37999 high-limit 38000 discard-probability 20 |

queue 2 drop-level 0 low-limit 37999 high-limit 38000 discard-probability 20 queue 2 drop-level 1 low-limit 37999 high-limit 38000 discard-probability 20 queue 2 drop-level 2 low-limit 37999 high-limit 38000 discard-probability 20 |

Configure WRED table settings for queue 2. |

N/A |

|

queue 3 drop-level 0 low-limit 1000 high-limit 2000 discard-probability 20 queue 3 drop-level 1 low-limit 1000 high-limit 2000 discard-probability 20 queue 3 drop-level 2 low-limit 1000 high-limit 2000 discard-probability 20 queue 3 weighting-constant 0 queue 3 ecn |

queue 3 drop-level 0 low-limit 1000 high-limit 2000 discard-probability 20 queue 3 drop-level 1 low-limit 1000 high-limit 2000 discard-probability 20 queue 3 drop-level 2 low-limit 1000 high-limit 2000 discard-probability 20 queue 3 weighting-constant 0 queue 3 ecn |

Configure WRED table settings for queue 3. |

Set small values for the low limit and high limit for the RoCE queue and large values for other queues. |

|

queue 4 drop-level 0 low-limit 37999 high-limit 38000 discard-probability 20 queue 4 drop-level 1 low-limit 37999 high-limit 38000 discard-probability 20 queue 4 drop-level 2 low-limit 37999 high-limit 38000 discard-probability 20 |

queue 4 drop-level 0 low-limit 37999 high-limit 38000 discard-probability 20 queue 4 drop-level 1 low-limit 37999 high-limit 38000 discard-probability 20 queue 4 drop-level 2 low-limit 37999 high-limit 38000 discard-probability 20 |

Configure WRED table settings for queue 4. |

N/A |

|

queue 5 drop-level 0 low-limit 37999 high-limit 38000 discard-probability 20 queue 5 drop-level 1 low-limit 37999 high-limit 38000 discard-probability 20 queue 5 drop-level 2 low-limit 37999 high-limit 38000 discard-probability 20 |

queue 5 drop-level 0 low-limit 37999 high-limit 38000 discard-probability 20 queue 5 drop-level 1 low-limit 37999 high-limit 38000 discard-probability 20 queue 5 drop-level 2 low-limit 37999 high-limit 38000 discard-probability 20 |

Configure WRED table settings for queue 5. |

N/A |

|

queue 6 drop-level 0 low-limit 37999 high-limit 38000 discard-probability 20 queue 6 drop-level 1 low-limit 37999 high-limit 38000 discard-probability 20 queue 6 drop-level 2 low-limit 37999 high-limit 38000 discard-probability 20 queue 6 ecn |

queue 6 drop-level 0 low-limit 37999 high-limit 38000 discard-probability 20 queue 6 drop-level 1 low-limit 37999 high-limit 38000 discard-probability 20 queue 6 drop-level 2 low-limit 37999 high-limit 38000 discard-probability 20 queue 6 ecn |

Configure WRED table settings for queue 6. |

N/A |

|

queue 7 drop-level 0 low-limit 37999 high-limit 38000 discard-probability 20 queue 7 drop-level 1 low-limit 37999 high-limit 38000 discard-probability 20 queue 7 drop-level 2 low-limit 37999 high-limit 38000 discard-probability 20 |

queue 7 drop-level 0 low-limit 37999 high-limit 38000 discard-probability 20 queue 7 drop-level 1 low-limit 37999 high-limit 38000 discard-probability 20 queue 7 drop-level 2 low-limit 37999 high-limit 38000 discard-probability 20 |

Configure WRED table settings for queue 7. |

N/A |

|

qos wred queue table 25G-WRED-Template |

qos wred queue table 25G-WRED-Template |

Create a queue-based WRED table. |

N/A |

|

queue 0 drop-level 0 low-limit 37999 high-limit 38000 discard-probability 20 queue 0 drop-level 1 low-limit 37999 high-limit 38000 discard-probability 20 queue 0 drop-level 2 low-limit 37999 high-limit 38000 discard-probability 20 |

queue 0 drop-level 0 low-limit 37999 high-limit 38000 discard-probability 20 queue 0 drop-level 1 low-limit 37999 high-limit 38000 discard-probability 20 queue 0 drop-level 2 low-limit 37999 high-limit 38000 discard-probability 20 |

Configure WRED table settings for queue 0. |

N/A |

|

queue 1 drop-level 0 low-limit 37999 high-limit 38000 discard-probability 20 queue 1 drop-level 1 low-limit 37999 high-limit 38000 discard-probability 20 queue 1 drop-level 2 low-limit 37999 high-limit 38000 discard-probability 20 |

queue 1 drop-level 0 low-limit 37999 high-limit 38000 discard-probability 20 queue 1 drop-level 1 low-limit 37999 high-limit 38000 discard-probability 20 queue 1 drop-level 2 low-limit 37999 high-limit 38000 discard-probability 20 |

Configure WRED table settings for queue 1. |

N/A |

|

queue 2 drop-level 0 low-limit 37999 high-limit 38000 discard-probability 20 queue 2 drop-level 1 low-limit 37999 high-limit 38000 discard-probability 20 queue 2 drop-level 2 low-limit 37999 high-limit 38000 discard-probability 20 |

queue 2 drop-level 0 low-limit 37999 high-limit 38000 discard-probability 20 queue 2 drop-level 1 low-limit 37999 high-limit 38000 discard-probability 20 queue 2 drop-level 2 low-limit 37999 high-limit 38000 discard-probability 20 |

Configure WRED table settings for queue 2. |

N/A |

|

queue 3 drop-level 0 low-limit 400 high-limit 1625 discard-probability 20 queue 3 drop-level 1 low-limit 400 high-limit 1625 discard-probability 20 queue 3 drop-level 2 low-limit 400 high-limit 1625 discard-probability 20 queue 3 weighting-constant 0 queue 3 ecn |

queue 3 drop-level 0 low-limit 400 high-limit 1625 discard-probability 20 queue 3 drop-level 1 low-limit 400 high-limit 1625 discard-probability 20 queue 3 drop-level 2 low-limit 400 high-limit 1625 discard-probability 20 queue 3 weighting-constant 0 queue 3 ecn |

Configure WRED table settings for queue 3. |

Set small values for the low limit and high limit for the RoCE queue and large values for other queues. |

|

queue 4 drop-level 0 low-limit 37999 high-limit 38000 discard-probability 20 queue 4 drop-level 1 low-limit 37999 high-limit 38000 discard-probability 20 queue 4 drop-level 2 low-limit 37999 high-limit 38000 discard-probability 20 |

queue 4 drop-level 0 low-limit 37999 high-limit 38000 discard-probability 20 queue 4 drop-level 1 low-limit 37999 high-limit 38000 discard-probability 20 queue 4 drop-level 2 low-limit 37999 high-limit 38000 discard-probability 20 |

Configure WRED table settings for queue 4. |

N/A |

|

queue 5 drop-level 0 low-limit 37999 high-limit 38000 discard-probability 20 queue 5 drop-level 1 low-limit 37999 high-limit 38000 discard-probability 20 queue 5 drop-level 2 low-limit 37999 high-limit 38000 discard-probability 20 |

queue 5 drop-level 0 low-limit 37999 high-limit 38000 discard-probability 20 queue 5 drop-level 1 low-limit 37999 high-limit 38000 discard-probability 20 queue 5 drop-level 2 low-limit 37999 high-limit 38000 discard-probability 20 |

Configure WRED table settings for queue 5. |

N/A |

|

queue 6 drop-level 0 low-limit 37999 high-limit 38000 discard-probability 20 queue 6 drop-level 1 low-limit 37999 high-limit 38000 discard-probability 20 queue 6 drop-level 2 low-limit 37999 high-limit 38000 discard-probability 20 queue 6 ecn |

queue 6 drop-level 0 low-limit 37999 high-limit 38000 discard-probability 20 queue 6 drop-level 1 low-limit 37999 high-limit 38000 discard-probability 20 queue 6 drop-level 2 low-limit 37999 high-limit 38000 discard-probability 20 queue 6 ecn |

Configure WRED table settings for queue 6. |

N/A |

|

queue 7 drop-level 0 low-limit 37999 high-limit 38000 discard-probability 20 queue 7 drop-level 1 low-limit 37999 high-limit 38000 discard-probability 20 queue 7 drop-level 2 low-limit 37999 high-limit 38000 discard-probability 20 |

queue 7 drop-level 0 low-limit 37999 high-limit 38000 discard-probability 20 queue 7 drop-level 1 low-limit 37999 high-limit 38000 discard-probability 20 queue 7 drop-level 2 low-limit 37999 high-limit 38000 discard-probability 20 |

Configure WRED table settings for queue 7. |

N/A |

Configuring priority mapping

|

|

NOTE: · To configure PFC on an interface connecting a leaf device to a server, you must configure the interface to trust the 802.1p or DSCP priority in packets. To configure PFC on a Layer 3 interface connecting a leaf device to a spine device, you must configure the interface to trust the DSCP priority in packets. · As a best practice, configure outgoing packets of servers to carry the DSCP priority and to be enqueued by DSCP priority. In this case, configure the interface connecting a leaf device to a server to trust the DSCP priority in packets. If the server does not support carrying the DSCP priority, configure the interface connecting a leaf device to a server to trust the 802.1p priority in packets and configure DSCP-to-802.1p mappings for all involved packets. · This section describes the procedure to configure DSCP-to-802.1p mappings when an interface is configured to trust 802.1p priority in packets. This section does not need to be configured if an interface is configured to trust the DSCP priority in packets. |

|

Leaf 1 |

Leaf 2 |

Description |

Remarks |

|

qos map-table dot1p-lp import 0 export 0 import 1 export 1 import 2 export 2 |

qos map-table dot1p-lp import 0 export 0 import 1 export 1 import 2 export 2 |

Configure the dot1p-lp mapping table. |

N/A |

|

traffic classifier dot1p0 operator and |

traffic classifier dot1p0 operator and |

Create a traffic class with the AND operator. |

If the downlink interface is configured with the command, the QoS policy does not need to be configured. |

|

if-match service-dot1p 0 |

if-match service-dot1p 0 |

Configure an outer 802.1p priority match criterion. |

N/A |

|

traffic classifier dot1p1 operator and |

traffic classifier dot1p1 operator and |

Create a traffic class with the AND operator. |

N/A |

|

if-match service-dot1p 1 |

if-match service-dot1p 1 |

Configure an outer 802.1p priority match criterion. |

N/A |

|

traffic classifier dot1p2 operator and |

traffic classifier dot1p2 operator and |

Create a traffic class with the AND operator. |

N/A |

|

if-match service-dot1p 2 |

if-match service-dot1p 2 |

Configure an outer 802.1p priority match criterion. |

N/A |

|

traffic classifier dot1p3 operator and |

traffic classifier dot1p3 operator and |

Create a traffic class with the AND operator. |

N/A |

|

if-match service-dot1p 3 |

if-match service-dot1p 3 |

Configure an outer 802.1p priority match criterion. |

N/A |

|

traffic classifier dot1p4 operator and |

traffic classifier dot1p4 operator and |

Create a traffic class with the AND operator. |

N/A |

|

if-match service-dot1p 4 |

if-match service-dot1p 4 |

Configure an outer 802.1p priority match criterion. |

N/A |

|

traffic classifier dot1p5 operator and |

traffic classifier dot1p5 operator and |

Create a traffic class with the AND operator. |

N/A |

|

if-match service-dot1p 5 |

if-match service-dot1p 5 |

Configure an outer 802.1p priority match criterion. |

N/A |

|

traffic classifier dot1p5 operator and |

traffic classifier dot1p5 operator and |

Create a traffic class with the AND operator. |

N/A |

|

if-match service-dot1p 6 |

if-match service-dot1p 6 |

Configure an outer 802.1p priority match criterion. |

N/A |

|

traffic classifier dot1p7 operator and |

traffic classifier dot1p7 operator and |

Create a traffic class with the AND operator. |

N/A |

|

if-match service-dot1p 7 |

if-match service-dot1p 7 |

Configure an outer 802.1p priority match criterion. |

N/A |

|

traffic classifier dscp0 operator and |

traffic classifier dscp0 operator and |

Create a traffic class with the AND operator. |

N/A |

|

if-match dscp default |

if-match dscp default |

Configure an outer 802.1p priority match criterion. |

N/A |

|

traffic classifier dscp10 operator and |

traffic classifier dscp10 operator and |

Create a traffic class with the AND operator. |

N/A |

|

if-match dscp af11 |

if-match dscp af11 |

Configure a DSCP priority match criterion. |

N/A |

|

traffic classifier dscp18 operator and |

traffic classifier dscp18 operator and |

Create a traffic class with the AND operator. |

N/A |

|

if-match dscp af21 |

if-match dscp af21 |

Configure a DSCP priority match criterion. |

N/A |

|

traffic classifier dscp26 operator and |

traffic classifier dscp26 operator and |

Create a traffic class with the AND operator. |

N/A |

|

if-match dscp af31 |

if-match dscp af31 |

Configure a DSCP priority match criterion. |

N/A |

|

traffic classifier dscp34 operator and |

traffic classifier dscp34 operator and |

Create a traffic class with the AND operator. |

N/A |

|

if-match dscp af41 |

if-match dscp af41 |

Configure a DSCP priority match criterion. |

N/A |

|

traffic classifier dscp40 operator and |

traffic classifier dscp40 operator and |

Create a traffic class with the AND operator. |

N/A |

|

if-match dscp cs5 |

if-match dscp cs5 |

Configure a DSCP priority match criterion. |

N/A |

|

traffic classifier dscp48 operator and |

traffic classifier dscp48 operator and |

Create a traffic class with the AND operator. |

N/A |

|

if-match dscp cs6 |

if-match dscp cs6 |

Configure a DSCP priority match criterion. |

N/A |

|

traffic classifier dscp56 operator and |

traffic classifier dscp56 operator and |

Create a traffic class with the AND operator. |

N/A |

|

if-match dscp cs7 |

if-match dscp cs7 |

Configure a DSCP priority match criterion. |

N/A |

|

traffic behavior dot1p0 |

traffic behavior dot1p0 |

Create a traffic behavior. |

N/A |

|

remark dot1p 0 |

remark dot1p 0 |

Configure an 802.1p priority marking action. |

N/A |

|

traffic behavior dot1p1 |

traffic behavior dot1p1 |

Create a traffic behavior. |

N/A |

|

remark dot1p 1 |

remark dot1p 1 |

Configure an 802.1p priority marking action. |

N/A |

|

traffic behavior dot1p2 |

traffic behavior dot1p2 |

Create a traffic behavior. |

N/A |

|

remark dot1p 2 |

remark dot1p 2 |

Configure an 802.1p priority marking action. |

N/A |

|

traffic behavior dot1p3 |

traffic behavior dot1p3 |

Create a traffic behavior. |

N/A |

|

remark dot1p 3 |

remark dot1p 3 |

Configure an 802.1p priority marking action. |

N/A |

|

traffic behavior dot1p4 |

traffic behavior dot1p4 |

Create a traffic behavior. |

N/A |

|

remark dot1p 4 |

remark dot1p 4 |

Configure an 802.1p priority marking action. |

N/A |

|

traffic behavior dot1p5 |

traffic behavior dot1p5 |

Create a traffic behavior. |

N/A |

|

remark dot1p 5 |

remark dot1p 5 |

Configure an 802.1p priority marking action. |

N/A |

|

traffic behavior dot1p5 |

traffic behavior dot1p5 |

Create a traffic behavior. |

N/A |

|

remark dot1p 6 |

remark dot1p 6 |

Configure an 802.1p priority marking action. |

N/A |

|

traffic behavior dot1p7 |

traffic behavior dot1p7 |

Create a traffic behavior. |

N/A |

|

remark dot1p 7 |

remark dot1p 7 |

Configure an 802.1p priority marking action. |

N/A |

|

traffic behavior dscp-0 |

traffic behavior dscp-0 |

Create a traffic behavior. |

N/A |

|

remark dscp default |

remark dscp default |

Configure a DSCP priority marking action. |

N/A |

|

traffic behavior dscp-af11 |

traffic behavior dscp-af11 |

Create a traffic behavior. |

N/A |

|

remark dscp af11 |

remark dscp af11 |

Configure a DSCP priority marking action. |

N/A |

|

traffic behavior dscp-af21 |

traffic behavior dscp-af21 |

Create a traffic behavior. |

N/A |

|

remark dscp af21 |

remark dscp af21 |

Configure a DSCP priority marking action. |

N/A |

|

traffic behavior dscp-af31 |

traffic behavior dscp-af31 |

Create a traffic behavior. |

N/A |

|

remark dscp af31 |

remark dscp af31 |

Configure a DSCP priority marking action. |

N/A |

|

traffic behavior dscp-af41 |

traffic behavior dscp-af41 |

Create a traffic behavior. |

N/A |

|

remark dscp af41 |

remark dscp af41 |

Configure a DSCP priority marking action. |

N/A |

|

traffic behavior dscp-cs5 |

traffic behavior dscp-cs5 |

Create a traffic behavior. |

N/A |

|

remark dscp cs5 |

remark dscp cs5 |

Configure a DSCP priority marking action. |

N/A |

|

traffic behavior dscp-cs6 |

traffic behavior dscp-cs6 |

Create a traffic behavior. |

N/A |

|

remark dscp cs6 |

remark dscp cs6 |

Configure a DSCP priority marking action. |

N/A |

|

traffic behavior dscp-cs7 |

traffic behavior dscp-cs7 |

Create a traffic behavior. |

N/A |

|

remark dscp cs7 |

remark dscp cs7 |

Configure a DSCP priority marking action. |

N/A |

|

qos policy dot1p-dscp |

qos policy dot1p-dscp |

Create a generic QoS policy. |

N/A |

|

classifier dot1p0 behavior dscp-0 |

classifier dot1p0 behavior dscp-0 |

Associate a traffic class with a traffic behavior. |

N/A |

|

classifier dot1p3 behavior dscp-af31 |

classifier dot1p3 behavior dscp-af31 |

Associate a traffic class with a traffic behavior. |

N/A |

|

classifier dot1p1 behavior dscp-af11 |

classifier dot1p1 behavior dscp-af11 |

Associate a traffic class with a traffic behavior. |

N/A |

|

classifier dot1p2 behavior dscp-af21 |

classifier dot1p2 behavior dscp-af21 |

Associate a traffic class with a traffic behavior. |

N/A |

|

classifier dot1p4 behavior dscp-af41 |

classifier dot1p4 behavior dscp-af41 |

Associate a traffic class with a traffic behavior. |

N/A |

|

classifier dot1p5 behavior dscp-cs5 |

classifier dot1p5 behavior dscp-cs5 |

Associate a traffic class with a traffic behavior. |

N/A |

|

classifier dot1p5 behavior dscp-cs6 |

classifier dot1p5 behavior dscp-cs6 |

Associate a traffic class with a traffic behavior. |

N/A |

|

classifier dot1p7 behavior dscp-cs7 |

classifier dot1p7 behavior dscp-cs7 |

Associate a traffic class with a traffic behavior. |

N/A |

|

qos policy dscptodot1p |

qos policy dscptodot1p |

Create a generic QoS policy. |

N/A |

|

classifier dscp0 behavior dot1p0 |

classifier dscp0 behavior dot1p0 |

Associate a traffic class with a traffic behavior. |

N/A |

|

classifier dscp26 behavior dot1p3 |

classifier dscp26 behavior dot1p3 |

Associate a traffic class with a traffic behavior. |

N/A |

|

classifier dscp10 behavior dot1p1 |

classifier dscp10 behavior dot1p1 |

Associate a traffic class with a traffic behavior. |

N/A |

|

classifier dscp18 behavior dot1p2 |

classifier dscp18 behavior dot1p2 |

Associate a traffic class with a traffic behavior. |

N/A |

|

classifier dscp34 behavior dot1p4 |

classifier dscp34 behavior dot1p4 |

Associate a traffic class with a traffic behavior. |

N/A |

|

classifier dscp40 behavior dot1p5 |

classifier dscp40 behavior dot1p5 |

Associate a traffic class with a traffic behavior. |

N/A |

|

classifier dscp48 behavior dot1p5 |

classifier dscp48 behavior dot1p5 |

Associate a traffic class with a traffic behavior. |

N/A |

|

classifier dscp56 behavior dot1p7 |

classifier dscp56 behavior dot1p7 |

Associate a traffic class with a traffic behavior. |

N/A |

Configuring the links towards the spine tier

|

Leaf 1 |

Leaf 2 |

Description |

|

monitor-link group 1 |

monitor-link group 1 |

Configure a monitor link group. |

|

interface HundredGigE1/0/25 |

interface HundredGigE1/0/25 |

Configure the interface connected to Spine 1. |

|

port link-mode route |

port link-mode route |

Configure the interface to operate in route mode as a Layer 3 interface. |

|

ip address 172.16.2.154 255.255.255.252 |

ip address 172.16.2.158 255.255.255.252 |

Configure an IP address. |

|

port monitor-link group 1 uplink |

port monitor-link group 1 uplink |

Configure the interface as the uplink interface of the monitor link group. |

|

interface HundredGigE1/0/26 |

interface HundredGigE1/0/26 |

Configure the interface connected to Spine 1. |

|

port link-mode route |

port link-mode route |

Configure the interface to operate in route mode as a Layer 3 interface. |

|

ip address 172.16.3.154 255.255.255.252 |

ip address 172.16.3.158 255.255.255.252 |

Configure an IP address. |

|

port monitor-link group 1 uplink |

port monitor-link group 1 uplink |

Configure the interface as the uplink interface of the monitor link group. |

Configuring RoCE settings for the links towards the spine tier

Configuring a WRED table

|

Leaf 1 |

Leaf 2 |

Description |

Remarks |

|

interface range HundredGigE1/0/25 HundredGigE1/0/26 |

interface range HundredGigE1/0/25 HundredGigE1/0/26 |

Configure the interfaces connected to Spine 1. |

N/A |

|

priority-flow-control deadlock enable |

priority-flow-control deadlock enable |

Enable PFC deadlock detection on an interface. |

N/A |

|

priority-flow-control enable |

priority-flow-control enable |

Enable PFC on all Ethernet interfaces. |

N/A |

|

priority-flow-control no-drop dot1p 3 |

priority-flow-control no-drop dot1p 3 |

Enable PFC for the queue of RoCE packets. |

N/A |

|

priority-flow-control dot1p 3 headroom 491 |

priority-flow-control dot1p 3 headroom 491 |

Set the headroom buffer threshold to 491 for 802.1p priority 3. |

After you enable PFC for the specified 802.1p priority, the device automatically deploys the PFC threshold settings. For more information, see "Recommended PFC settings." |

|

priority-flow-control dot1p 3 reserved-buffer 17 |

priority-flow-control dot1p 3 reserved-buffer 17 |

Set the PFC reserved threshold. |

|

|

priority-flow-control dot1p 3 ingress-buffer dynamic 5 |

priority-flow-control dot1p 3 ingress-buffer dynamic 5 |

Set the dynamic back pressure frame triggering threshold. |

|

|

priority-flow-control dot1p 3 ingress-threshold-offset 12 |

priority-flow-control dot1p 3 ingress-threshold-offset 12 |

Set the offset between the back pressure frame stopping threshold and triggering threshold. |

|

|

qos trust dscp |

qos trust dscp |

Configure the interface to trust the DSCP priority |

N/A |

|

qos wfq byte-count |

qos wfq byte-count |

Enable byte-count WFQ. |

N/A |

|

qos wfq be group 1 byte-count 15 |

qos wfq be group 1 byte-count 15 |

Configure the weight for queue 0. |

Adjust the weight according to your business needs. |

|

qos wfq af1 group 1 byte-count 2 |

qos wfq af1 group 1 byte-count 2 |

Configure the weight for queue 1. |

Adjust the weight according to your business needs. |

|

qos wfq af2 group 1 byte-count 2 |

qos wfq af2 group 1 byte-count 2 |

Configure the weight for queue 2. |

Adjust the weight according to your business needs. |

|

qos wfq af3 group 1 byte-count 60 |

qos wfq af3 group 1 byte-count 60 |

Configure the weight for queue 3. |

Adjust the weight according to your business needs. This example configures the weights of the RoCE queue and other queues at a 4:1 ratio. |

|

qos wfq cs6 group sp |

qos wfq cs6 group sp |

Assign queue 6 to the SP group. |

Adjust the setting according to your business needs. |

|

qos wfq cs7 group sp |

qos wfq cs7 group sp |

Assign queue 7 to the SP group. |

Adjust the setting according to your business needs. |

|

qos wred apply 100G-WRED-Template |

qos wred apply 100G-WRED-Template |

Apply the WRED table to the interface. |

N/A |

|

qos gts queue 6 cir 50000000 cbs 16000000 |

qos gts queue 6 cir 50000000 cbs 16000000 |

Configure GTS with a CIR of 50 Gbps for the CNP queue. |

N/A |

Configuring WRED on an interface

|

Leaf 1 |

Leaf 2 |

Description |

Remarks |

|

interface range HundredGigE1/0/25 HundredGigE1/0/26 |

interface range HundredGigE1/0/25 HundredGigE1/0/26 |

Configure the interfaces connected to Spine 1. |

N/A |

|

priority-flow-control deadlock enable |

priority-flow-control deadlock enable |

Enable PFC deadlock detection on an interface. |

N/A |

|

priority-flow-control enable |

priority-flow-control enable |

Enable PFC on all Ethernet interfaces. |

N/A |

|

priority-flow-control no-drop dot1p 3 |

priority-flow-control no-drop dot1p 3 |

Enable PFC for the queue of RoCE packets. |

N/A |

|

priority-flow-control dot1p 3 headroom 491 |

priority-flow-control dot1p 3 headroom 491 |

Set the headroom buffer threshold to 491 for 802.1p priority 3. |

After you enable PFC for the specified 802.1p priority, the device automatically deploys the PFC threshold settings. For more information, see "Recommended PFC settings." |

|

priority-flow-control dot1p 3 reserved-buffer 17 |

priority-flow-control dot1p 3 reserved-buffer 17 |

Set the PFC reserved threshold. |

|

|

priority-flow-control dot1p 3 ingress-buffer dynamic 5 |

priority-flow-control dot1p 3 ingress-buffer dynamic 5 |

Set the dynamic back pressure frame triggering threshold. |

|

|

priority-flow-control dot1p 3 ingress-threshold-offset 12 |

priority-flow-control dot1p 3 ingress-threshold-offset 12 |

Set the offset between the back pressure frame stopping threshold and triggering threshold. |

|

|

qos trust dscp |

qos trust dscp |

Configure the interface to trust the DSCP priority |

N/A |

|

qos wfq byte-count |

qos wfq byte-count |

Enable byte-count WFQ. |

N/A |

|

qos wfq be group 1 byte-count 15 |

qos wfq be group 1 byte-count 15 |

Configure the weight for queue 0. |

Adjust the weight according to your business needs. |

|

qos wfq af1 group 1 byte-count 2 |

qos wfq af1 group 1 byte-count 2 |

Configure the weight for queue 1. |

Adjust the weight according to your business needs. |

|

qos wfq af2 group 1 byte-count 2 |

qos wfq af2 group 1 byte-count 2 |

Configure the weight for queue 2. |

Adjust the weight according to your business needs. |

|

qos wfq af3 group 1 byte-count 60 |

qos wfq af3 group 1 byte-count 60 |

Configure the weight for queue 3. |

Adjust the weight according to your business needs. This example configures the weights of the RoCE queue and other queues at a 4:1 ratio. |

|

qos wfq cs6 group sp |

qos wfq cs6 group sp |

Assign queue 6 to the SP group. |

Adjust the setting according to your business needs. |

|

qos wfq cs7 group sp |

qos wfq cs7 group sp |

Assign queue 7 to the SP group. |

Adjust the setting according to your business needs. |

|

qos wred queue 3 drop-level 0 low-limit 1000 high-limit 2000 discard-probability 20 qos wred queue 3 drop-level 1 low-limit 1300 high-limit 2100 discard-probability 20 qos wred queue 3 drop-level 2 low-limit 1300 high-limit 2100 discard-probability 20 |

qos wred queue 3 drop-level 0 low-limit 1000 high-limit 2000 discard-probability 20 qos wred queue 3 drop-level 1 low-limit 1300 high-limit 2100 discard-probability 20 qos wred queue 3 drop-level 2 low-limit 1300 high-limit 2100 discard-probability 20 |

Set the drop-related parameters for the RoCE queue. |

Set small values for the low limit and high limit for the RoCE queue and large values for other queues. |

|

qos wred queue 3 weighting-constant 0 |

qos wred queue 3 weighting-constant 0 |

Set the WRED exponent for average queue size calculation for the RoCE queue. |

N/A |

|

qos wred queue 3 ecn |

qos wred queue 3 ecn |

Enable ECN for the RoCE queue. |

N/A |

|

qos wred queue 6 drop-level 0 low-limit 37999 high-limit 38000 discard-probability 20 qos wred queue 6 drop-level 1 low-limit 37999 high-limit 38000 discard-probability 20 qos wred queue 6 drop-level 2 low-limit 37999 high-limit 38000 discard-probability 20 |

qos wred queue 6 drop-level 0 low-limit 37999 high-limit 38000 discard-probability 20 qos wred queue 6 drop-level 1 low-limit 37999 high-limit 38000 discard-probability 20 qos wred queue 6 drop-level 2 low-limit 37999 high-limit 38000 discard-probability 20 |

Set the drop-related parameters for the CNP queue. |

N/A |

|

qos wred queue 6 ecn |

qos wred queue 6 ecn |

Set the WRED exponent for average queue size calculation for the CNP queue. |

N/A |

|

qos gts queue 6 cir 50000000 cbs 16000000 |

qos gts queue 6 cir 50000000 cbs 16000000 |

Enable ECN for the CNP queue. |

N/A |

Configuring M-LAG

|

Leaf 1 |

Leaf 2 |

Description |

Remarks |

|

interface Bridge-Aggregation1000 |

interface Bridge-Aggregation1000 |

Create the Layer 2 aggregate interface to be used as the peer-link interface and enter its interface view. |

N/A |

|

link-aggregation mode dynamic |

link-aggregation mode dynamic |

Configure the aggregate interface to operate in dynamic mode. |

N/A |

|

quit |

quit |

Return to system view. |

N/A |

|

interface range HundredGigE1/0/29 to HundredGigE1/0/32 |

interface range HundredGigE1/0/29 to HundredGigE1/0/32 |

Enter interface range view. |

N/A |

|

port link-aggregation group 1000 |

port link-aggregation group 1000 |

Assign the ports to the link aggregation group for the peer-link interface (aggregation group 1000). |

N/A |

|

quit |

quit |

Return to system view. |

N/A |

|

interface Bridge-Aggregation1000 |

interface Bridge-Aggregation1000 |

Enter the interface view for the port to be used as the peer-link interface. |

N/A |

|

port m-lag peer-link 1 |

port m-lag peer-link 1 |

Specify the aggregate interface as the peer-link interface. |

N/A |

|

quit |

quit |

Return to system view. |

N/A |

|

ip vpn-instance M-LAGKeepalive |

ip vpn-instance M-LAGKeepalive |

Create a VPN instance used for M-LAG keepalive. |

N/A |

|

quit |

quit |

Return to system view. |

N/A |

|

interface Route-Aggregation1000 |

interface Route-Aggregation1000 |

Create the Layer 3 aggregate interface to be used as the keepalive interface. |

N/A |

|

ip binding vpn-instance M-LAGKeepalive |

ip binding vpn-instance M-LAGKeepalive |

Associate the interface with the VPN instance. |

N/A |

|

ip address 1.1.1.1 255.255.255.252 |

ip address 1.1.1.2 255.255.255.252 |

Assign an IP address to the keepalive interface. |

N/A |

|

link-aggregation mode dynamic |

link-aggregation mode dynamic |

Configure the aggregate interface to operate in dynamic mode. |

N/A |

|

quit |

quit |

Return to system view. |

N/A |

|

interface Twenty-FiveGigE1/0/55 |

interface Twenty-FiveGigE1/0/55 |

Configure the interface as a member port of the keepalive interface. |

N/A |

|

port link-mode route |

port link-mode route |

Configure the interface for keepalive detection to operate in route mode as a Layer 3 interface. |

N/A |

|

port link-aggregation group 1000 |

port link-aggregation group 1000 |

Assign the port to the link aggregation group for the peer-link interface (aggregation group 1000). |

N/A |

|

quit |

quit |

Return to system view. |

N/A |

|

interface Twenty-FiveGigE1/0/56 |

interface Twenty-FiveGigE1/0/56 |

Configure the interface as a member port of the keepalive interface. |

N/A |

|

port link-mode route |

port link-mode route |

Configure the interface for keepalive detection to operate in route mode as a Layer 3 interface. |

N/A |

|

port link-aggregation group 1000 |

port link-aggregation group 1000 |

Assign the port to the link aggregation group for the peer-link interface (aggregation group 1000). |

N/A |

|

quit |

quit |

Return to system view. |

N/A |

|

m-lag role priority 50 |

m-lag role priority 100 |

Set the M-LAG role priority. |

N/A |

|

m-lag system-mac 2001-0000-0018 |

m-lag system-mac 2001-0000-0018 |

Configure the M-LAG system MAC address. |

You must configure the same M-LAG system MAC address for all M-LAG member devices. |

|

m-lag system-number 1 |

m-lag system-number 2 |

Set the M-LAG system number. |

You must assign different M-LAG system numbers to the member devices in an M-LAG system. |

|

m-lag system-priority 110 |

m-lag system-priority 110 |

(Optional.) Set the M-LAG system priority. |

You must set the same M-LAG system priority on the member devices in an M-LAG system. The lower the value, the higher the priority. |

|

m-lag keepalive ip destination 1.1.1.2 source 1.1.1.1 vpn-instance M-LAGKeepalive |

m-lag keepalive ip destination 1.1.1.1 source 1.1.1.2 vpn-instance M-LAGKeepalive |

Configure M-LAG keepalive packet parameters. |

If the interface of an IP address is not an interface excluded from the MAD shutdown action, configure it as an interface excluded from the MAD shutdown action. |

|

m-lag mad exclude interface LoopBack1 |

m-lag mad exclude interface LoopBack1 |

Exclude Loopback1 from the shutdown action by M-LAG MAD. |

N/A |

|

m-lag mad exclude interface Route-Aggregation1000 |

m-lag mad exclude interface Route-Aggregation1000 |

Exclude Route-Aggregation 1000 from the shutdown action by M-LAG MAD. |

N/A |

|

m-lag mad exclude interface Twenty-FiveGigE1/0/55 |

m-lag mad exclude interface Twenty-FiveGigE1/0/55 |

Exclude Twenty-FiveGigE 1/0/55 from the shutdown action by M-LAG MAD. |

N/A |

|

m-lag mad exclude interface Twenty-FiveGigE1/0/56 |

m-lag mad exclude interface Twenty-FiveGigE1/0/56 |

Exclude Twenty-FiveGigE 1/0/56 from the shutdown action by M-LAG MAD. |

N/A |

|

m-lag mad exclude interface M-GigabitEthernet0/0/0 |

m-lag mad exclude interface M-GigabitEthernet0/0/0 |

Exclude M-GigabitEthernet 0/0/0 from the shutdown action by M-LAG MAD. |

N/A |

Configuring RoCE settings for the peer link

Configuring a WRED table

|

Leaf 1 |

Leaf 2 |

Description |

Remarks |

|

interface range HundredGigE1/0/29 to HundredGigE1/0/32 |

interface range HundredGigE1/0/29 to HundredGigE1/0/32 |

Enter the view for bulk configuring member ports of the peer-link interface. |

N/A |

|

qos trust dscp |

qos trust dscp |

Configure the interface to trust the DSCP priority. |

N/A |

|

qos wfq byte-count |

qos wfq byte-count |

Enable byte-count WFQ. |

N/A |

|

qos wfq be group 1 byte-count 15 |

qos wfq be group 1 byte-count 15 |

Configure the weight for queue 0. |

Adjust the weight according to your business needs. |

|

qos wfq af1 group 1 byte-count 2 |

qos wfq af1 group 1 byte-count 2 |

Configure the weight for queue 1. |

Adjust the weight according to your business needs. |

|

qos wfq af2 group 1 byte-count 2 |

qos wfq af2 group 1 byte-count 2 |

Configure the weight for queue 2. |

Adjust the weight according to your business needs. |

|

qos wfq af3 group 1 byte-count 60 |

qos wfq af3 group 1 byte-count 60 |

Configure the weight for queue 3. |

Adjust the weight according to your business needs. This example configures the weights of the RoCE queue and other queues at a 4:1 ratio. |

|

qos wfq cs6 group sp |

qos wfq cs6 group sp |

Assign queue 6 to the SP group. |

Adjust the setting according to your business needs. |

|

qos wfq cs7 group sp |

qos wfq cs7 group sp |

Assign queue 7 to the SP group. |

Adjust the setting according to your business needs. |

|

qos wred apply 100G-WRED-Template |

qos wred apply 100G-WRED-Template |

Apply the WRED table to the interface. |

N/A |

|

qos gts queue 6 cir 50000000 cbs 16000000 |

qos gts queue 6 cir 50000000 cbs 16000000 |

Configure GTS with a CIR of 50 Gbps for the CNP queue. |

N/A |

|

quit |

quit |

Return to system view. |

N/A |

Configuring WRED on an interface

|

Leaf 1 |

Leaf 2 |

Description |

Remarks |

|

interface range HundredGigE1/0/29 to HundredGigE1/0/32 |

interface range HundredGigE1/0/29 to HundredGigE1/0/32 |

Enter the view for bulk configuring member ports of the peer-link interface. |

N/A |

|

qos trust dscp |

qos trust dscp |

Configure the interface to trust the DSCP priority. |

N/A |

|

qos wfq byte-count |

qos wfq byte-count |

Enable byte-count WFQ. |

N/A |

|

qos wfq be group 1 byte-count 15 |

qos wfq be group 1 byte-count 15 |

Configure the weight for queue 0. |

Adjust the weight according to your business needs. |

|

qos wfq af1 group 1 byte-count 2 |

qos wfq af1 group 1 byte-count 2 |

Configure the weight for queue 1. |

Adjust the weight according to your business needs. |

|

qos wfq af2 group 1 byte-count 2 |

qos wfq af2 group 1 byte-count 2 |

Configure the weight for queue 2. |

Adjust the weight according to your business needs. |

|

qos wfq af3 group 1 byte-count 60 |

qos wfq af3 group 1 byte-count 60 |

Configure the weight for queue 3. |

Adjust the weight according to your business needs. This example configures the weights of the RoCE queue and other queues at a 4:1 ratio. |

|

qos wfq cs6 group sp |

qos wfq cs6 group sp |

Assign queue 6 to the SP group. |

Adjust the setting according to your business needs. |

|

qos wfq cs7 group sp |

qos wfq cs7 group sp |

Assign queue 7 to the SP group. |

Adjust the setting according to your business needs. |

|

qos wred queue 3 drop-level 0 low-limit 1000 high-limit 2000 discard-probability 20 qos wred queue 3 drop-level 1 low-limit 1300 high-limit 2100 discard-probability 20 qos wred queue 3 drop-level 2 low-limit 1300 high-limit 2100 discard-probability 20 |

qos wred queue 3 drop-level 0 low-limit 1000 high-limit 2000 discard-probability 20 qos wred queue 3 drop-level 1 low-limit 1300 high-limit 2100 discard-probability 20 qos wred queue 3 drop-level 2 low-limit 1300 high-limit 2100 discard-probability 20 |

Set the drop-related parameters for the RoCE queue. |

Set small values for the low limit and high limit for the RoCE queue and large values for other queues. |

|

qos wred queue 3 weighting-constant 0 |

qos wred queue 3 weighting-constant 0 |

Set the WRED exponent for average queue size calculation for the RoCE queue. |

N/A |

|

qos wred queue 3 ecn |

qos wred queue 3 ecn |

Enable ECN for the RoCE queue. |

N/A |

|

qos wred queue 6 drop-level 0 low-limit 37999 high-limit 38000 discard-probability 20 qos wred queue 6 drop-level 1 low-limit 37999 high-limit 38000 discard-probability 20 qos wred queue 6 drop-level 2 low-limit 37999 high-limit 38000 discard-probability 20 |

qos wred queue 6 drop-level 0 low-limit 37999 high-limit 38000 discard-probability 20 qos wred queue 6 drop-level 1 low-limit 37999 high-limit 38000 discard-probability 20 qos wred queue 6 drop-level 2 low-limit 37999 high-limit 38000 discard-probability 20 |

Set the drop-related parameters for the CNP queue. |

N/A |

|

qos wred queue 6 ecn |

qos wred queue 6 ecn |

Set the WRED exponent for average queue size calculation for the CNP queue. |

N/A |

|

qos gts queue 6 cir 50000000 cbs 16000000 |

qos gts queue 6 cir 50000000 cbs 16000000 |

Enable ECN for the CNP queue. |

N/A |

|

quit |

quit |

N/A |

N/A |

Configuring the links towards the servers

Configuring M-LAG links

|

|

NOTE: This example describes the procedure to configure Twenty-FiveGigE 1/0/1 on Leaf 1 and Leaf 2. The same procedure applies to other interfaces. |

|

Leaf 1 |

Leaf 2 |

Description |

|

interface bridge-aggregation 100 |

interface bridge-aggregation 100 |

Create an aggregate interface to be used as an M-LAG interface. |

|

link-aggregation mode dynamic |

link-aggregation mode dynamic |

Configure the aggregate interface to operate in dynamic mode. |

|

port m-lag group 1 |

port m-lag group 1 |

Assign the aggregate interface to an M-LAG group. |

|

quit |

quit |

N/A |

|

interface Twenty-FiveGigE1/0/1 |

interface Twenty-FiveGigE1/0/1 |

Enter the view of interface connected to servers. |

|

port link-aggregation group 100 |

port link-aggregation group 100 |

Add the physical interface to the Layer 2 aggregation group. |

|

broadcast-suppression 1 |

broadcast-suppression 1 |

Enable broadcast suppression and set the broadcast suppression threshold. |

|

multicast-suppression 1 |

multicast-suppression 1 |

Enable multicast suppression and set the multicast suppression threshold. |

|

stp edged-port |

stp edged-port |

Configure the interface as an edge port. |

|

port monitor-link group 1 downlink |

port monitor-link group 1 downlink |

Configure the interface as the downlink interface of a monitor link group. |

|

quit |

quit |

N/A |

|

interface bridge-aggregation 100 |

interface bridge-aggregation 100 |

N/A |

|

port link-type trunk |

port link-type trunk |

Set the link type of the interface to trunk. |

|

undo port trunk permit vlan 1 |

undo port trunk permit vlan 1 |

Remove the trunk port from VLAN 1. |

|

port trunk permit vlan 1100 to 1500 |

port trunk permit vlan 1100 to 1500 |

Assign the trunk port to VLANs 1100 through 1500. |

|

quit |

quit |

N/A |

Configuring a single link

|

Leaf 3 |

Leaf 3 |

Description |

Remarks |

|

interface range Twenty-FiveGigE1/0/1 Twenty-FiveGigE1/0/2 |

interface range Twenty-FiveGigE1/0/1 Twenty-FiveGigE1/0/2 |

Configure the interfaces connected to servers. |

N/A |

|

port link-type trunk |

port link-type trunk |

Set the link type of the interface to trunk. |

N/A |

|

undo port trunk permit vlan 1 |

undo port trunk permit vlan 1 |

Remove the trunk port from VLAN 1. |

N/A |

|

port trunk permit vlan 1100 to 1500 |

port trunk permit vlan 1100 to 1500 |

Assign the trunk port to VLANs 1100 through 1500. |

N/A |

|

broadcast-suppression 1 |

broadcast-suppression 1 |

Enable broadcast suppression and set the broadcast suppression threshold. |

N/A |

|

multicast-suppression 1 |

multicast-suppression 1 |

Enable multicast suppression and set the multicast suppression threshold. |

N/A |

|

stp edged-port |

stp edged-port |

Configure the interface as an edge port. |

N/A |

|

port monitor-link group 1 downlink |

port monitor-link group 1 downlink |

Configure the interface as the downlink interface of a monitor link group. |

N/A |

|

quit |

quit |

N/A |

N/A |

|

interface bridge-aggregation 100 |

interface bridge-aggregation 100 |

Create a Layer 2 aggregate interface. |

Use this step to create an empty M-LAG interface to act as a workaround to the restrictions in the section "Restrictions and guidelines for single-homed servers attached to non-M-LAG interfaces" in M-LAG Network Planning. |

|

link-aggregation mode dynamic |

link-aggregation mode dynamic |

Configure the aggregate interface to operate in dynamic mode. |

N/A |

|

port m-lag group 1 |

port m-lag group 1 |

Assign the aggregate interface to an M-LAG group. |

N/A |

|

port link-type trunk |

port link-type trunk |

Set the link type of the interface to trunk. |

N/A |

|

undo port trunk permit vlan 1 |

undo port trunk permit vlan 1 |

Remove the trunk port from VLAN 1. |

N/A |

|

port trunk permit vlan 1100 to 1500 |

port trunk permit vlan 1100 to 1500 |

Assign the trunk port to VLANs 1100 through 1500. |

N/A |

|

quit |

quit |

N/A |

N/A |

Configuring RoCE settings for the links towards the servers

Configuring a WRED table

|

Leaf 1 |

Leaf 2 |

Description |

Remarks |

|

interface Twenty-FiveGigE1/0/1 |

interface Twenty-FiveGigE1/0/1 |

Configure the interface connected to servers. |

N/A |

|

priority-flow-control deadlock enable |

priority-flow-control deadlock enable |

Enable PFC deadlock detection on an interface. |

N/A |

|

priority-flow-control enable |

priority-flow-control enable |

Enable PFC on all Ethernet interfaces. |

N/A |

|

priority-flow-control no-drop dot1p 3 |

priority-flow-control no-drop dot1p 3 |

Enable PFC for the queue of RoCE packets. |

N/A |

|

priority-flow-control dot1p 3 headroom 125 |

priority-flow-control dot1p 3 headroom 125 |

Set the headroom buffer threshold to 125 for 802.1p priority 3. |

After you enable PFC for the specified 802.1p priority, the device automatically deploys the PFC threshold settings. For more information, see "Recommended PFC settings." |

|

priority-flow-control dot1p 3 reserved-buffer 17 |

priority-flow-control dot1p 3 reserved-buffer 17 |

Set the PFC reserved threshold. |

|

|

priority-flow-control dot1p 3 ingress-buffer dynamic 5 |

priority-flow-control dot1p 3 ingress-buffer dynamic 5 |

Set the dynamic back pressure frame triggering threshold. |

|

|

priority-flow-control dot1p 3 ingress-threshold-offset 12 |

priority-flow-control dot1p 3 ingress-threshold-offset 12 |

Set the offset between the back pressure frame stopping threshold and triggering threshold. |

|

|

qos trust dot1p |

qos trust dot1p |

Configure the interface to trust the DSCP priority |

N/A |

|

qos wfq byte-count |

qos wfq byte-count |

Enable byte-count WFQ. |

N/A |

|

qos wfq be group 1 byte-count 15 |

qos wfq be group 1 byte-count 15 |

Configure the weight for queue 0. |

Adjust the weight according to your business needs. |

|

qos wfq af1 group 1 byte-count 2 |

qos wfq af1 group 1 byte-count 2 |

Configure the weight for queue 1. |

Adjust the weight according to your business needs. |

|

qos wfq af2 group 1 byte-count 2 |

qos wfq af2 group 1 byte-count 2 |

Configure the weight for queue 2. |

Adjust the weight according to your business needs. |

|

qos wfq af3 group 1 byte-count 60 |

qos wfq af3 group 1 byte-count 60 |

Configure the weight for queue 3. |

Adjust the weight according to your business needs. This example configures the weights of the RoCE queue and other queues at a 4:1 ratio. |

|

qos wfq cs6 group sp |

qos wfq cs6 group sp |

Assign queue 6 to the SP group. |

Adjust the setting according to your business needs. |

|

qos wfq cs7 group sp |

qos wfq cs7 group sp |

Assign queue 7 to the SP group. |

Adjust the setting according to your business needs. |

|

qos apply policy dot1p-dscp inbound |

qos apply policy dot1p-dscp inbound |

Configure a QoS policy in the inbound direction to perform dot1p-dscp mapping. |

N/A |

|

qos apply policy dscptodot1p outbound |

qos apply policy dscptodot1p outbound |

Configure a QoS policy in the outbound direction to perform dscp-dot1p mapping. |

N/A |

|

qos wred apply 25G-WRED-Template |

qos wred apply 25G-WRED-Template |

Apply the WRED table to the interface. |

N/A |

|

qos gts queue 6 cir 12500000 cbs 16000000 |

qos gts queue 6 cir 12500000 cbs 16000000 |

Configure GTS with a CIR of 25 Gbps for the CNP queue. |

N/A |

Configuring WRED on an interface

|

Leaf 1 |

Leaf 2 |

Description |

Remarks |

|

interface Twenty-FiveGigE1/0/1 |

interface Twenty-FiveGigE1/0/1 |

Configure the interface connected to servers. |

N/A |

|

priority-flow-control deadlock enable |

priority-flow-control deadlock enable |

Enable PFC deadlock detection on an interface. |

N/A |

|

priority-flow-control enable |

priority-flow-control enable |

Enable PFC on all Ethernet interfaces. |

N/A |

|

priority-flow-control no-drop dot1p 3 |

priority-flow-control no-drop dot1p 3 |

Enable PFC for the queue of RoCE packets. |

N/A |

|

priority-flow-control dot1p 3 headroom 125 |

priority-flow-control dot1p 3 headroom 125 |

Set the headroom buffer threshold to 125 for 802.1p priority 3. |

After you enable PFC for the specified 802.1p priority, the device automatically deploys the PFC threshold settings. For more information, see "Recommended PFC settings." |

|

priority-flow-control dot1p 3 reserved-buffer 17 |

priority-flow-control dot1p 3 reserved-buffer 17 |

Set the PFC reserved threshold. |

|

|

priority-flow-control dot1p 3 ingress-buffer dynamic 5 |

priority-flow-control dot1p 3 ingress-buffer dynamic 5 |

Set the dynamic back pressure frame triggering threshold. |

|

|

priority-flow-control dot1p 3 ingress-threshold-offset 12 |

priority-flow-control dot1p 3 ingress-threshold-offset 12 |

Set the offset between the back pressure frame stopping threshold and triggering threshold. |

|

|

qos trust dscp |

qos trust dscp |

Configure the interface to trust the DSCP priority. |

N/A |

|

qos wfq byte-count |

qos wfq byte-count |

Enable byte-count WFQ. |

N/A |

|

qos wfq be group 1 byte-count 15 |

qos wfq be group 1 byte-count 15 |

Configure the weight for queue 0. |

Adjust the weight according to your business needs. |

|

qos wfq af1 group 1 byte-count 2 |

qos wfq af1 group 1 byte-count 2 |

Configure the weight for queue 1. |

Adjust the weight according to your business needs. |

|

qos wfq af2 group 1 byte-count 2 |

qos wfq af2 group 1 byte-count 2 |

Configure the weight for queue 2. |

Adjust the weight according to your business needs. |

|

qos wfq af3 group 1 byte-count 60 |

qos wfq af3 group 1 byte-count 60 |

Configure the weight for queue 3. |

Adjust the weight according to your business needs. This example configures the weights of the RoCE queue and other queues at a 4:1 ratio. |

|

qos wfq cs6 group sp |

qos wfq cs6 group sp |

Assign queue 6 to the SP group. |

Adjust the setting according to your business needs. |

|

qos wfq cs7 group sp |

qos wfq cs7 group sp |

Assign queue 7 to the SP group. |

Adjust the setting according to your business needs. |

|

qos apply policy dot1p-dscp inbound |

qos apply policy dot1p-dscp inbound |

Configure a QoS policy in the inbound direction to perform dot1p-dscp mapping. |

N/A |

|

qos apply policy dscptodot1p outbound |

qos apply policy dscptodot1p outbound |

Configure a QoS policy in the outbound direction to perform dscp-dot1p mapping. |

N/A |

|

qos wred queue 3 drop-level 0 low-limit 400 high-limit 1625 discard-probability 20 qos wred queue 3 drop-level 1 low-limit 400 high-limit 1625 discard-probability 20 qos wred queue 3 drop-level 2 low-limit 400 high-limit 1625 discard-probability 20 |

qos wred queue 3 drop-level 0 low-limit 400 high-limit 1625 discard-probability 20 qos wred queue 3 drop-level 1 low-limit 400 high-limit 1625 discard-probability 20 qos wred queue 3 drop-level 2 low-limit 400 high-limit 1625 discard-probability 20 |

Set the drop-related parameters for the RoCE queue. |

Set small values for the low limit and high limit for the RoCE queue and large values for other queues. |

|

qos wred queue 3 ecn |

qos wred queue 3 ecn |

Enable ECN for the RoCE queue. |

N/A |

|

qos wred queue 3 weighting-constant 0 |

qos wred queue 3 weighting-constant 0 |

Set the WRED exponent for average queue size calculation for the RoCE queue. |

N/A |

|

qos wred queue 6 drop-level 0 low-limit 37999 high-limit 38000 discard-probability 20 qos wred queue 6 drop-level 1 low-limit 37999 high-limit 38000 discard-probability 20 qos wred queue 6 drop-level 2 low-limit 37999 high-limit 38000 discard-probability 20 |

qos wred queue 6 drop-level 0 low-limit 37999 high-limit 38000 discard-probability 20 qos wred queue 6 drop-level 1 low-limit 37999 high-limit 38000 discard-probability 20 qos wred queue 6 drop-level 2 low-limit 37999 high-limit 38000 discard-probability 20 |

Set the drop-related parameters for the CNP queue. |

N/A |

|

qos wred queue 6 ecn |

qos wred queue 6 ecn |

Set the WRED exponent for average queue size calculation for the CNP queue. |

N/A |

|

qos gts queue 6 cir 12500000 cbs 16000000 |

qos gts queue 6 cir 12500000 cbs 16000000 |

Enable ECN for the CNP queue. |

N/A |

Configuring an underlay BGP instance

|

Leaf 1 |

Leaf 2 |

Description |

Remarks |

|

bgp 64636 |

bgp 64636 |

Enable a BGP instance. |

N/A |

|

router-id 50.50.255.41 |

router-id 50.50.255.42 |

Specify a unique router ID for the BGP instance on each BGP device. |

To run BGP, a BGP instance must have a router ID. If you do not specify a router ID for the BGP instance on a device, it uses the global router ID. |

|

group Spine external |

group Spine external |

Create an EBGP peer group. |

N/A |

|

peer Spine as-number 64637 |

peer Spine as-number 64637 |

Specify an AS number for a peer group. |

N/A |

|

peer 172.16.2.153 group Spine |

peer 172.16.2.157 group Spine |

Add the specified spine device to the peer group. |

N/A |

|

peer 172.16.3.153 group Spine |

peer 172.16.3.157 group Spine |

Add the specified spine device to the peer group. |

N/A |

|

address-family ipv4 unicast |

address-family ipv4 unicast |

Create the BGP IPv4 unicast address family and enter its view. |

N/A |

|

balance 32 |

balance 32 |

Enable load balancing and set the maximum number of BGP ECMP routes for load balancing. |

N/A |

|

network 55.50.138.0 255.255.255.128 |

network 55.50.138.0 255.255.255.128 |

Inject a network to the BGP routing table and configure BGP to advertise the network. |

N/A |

|

network 55.50.153.128 255.255.255.128 |

network 55.50.153.128 255.255.255.128 |

Inject a network to the BGP routing table and configure BGP to advertise the network. |

N/A |

|

network 55.50.250.0 255.255.255.192 |

network 55.50.250.0 255.255.255.192 |

Inject a network to the BGP routing table and configure BGP to advertise the network. |

N/A |

|

network 55.50.255.41 255.255.255.255 |

network 55.50.255.41 255.255.255.255 |

Inject a network to the BGP routing table and configure BGP to advertise the network. |

N/A |

|

peer Spine enable |

peer Spine enable |

Enable the device to exchange routes with the peer group. |

N/A |

Configuring VLAN interfaces and gateways (dual-active gateways)

|

Border 1 |

Border 2 |

Description |

Remarks |

|

interface Vlan-interface1121 |

interface Vlan-interface1121 |

Create a VLAN interface. |

N/A |

|

ip address 55.50.138.124 255.255.255.128 |

ip address 55.50.138.124 255.255.255.128 |

Assign an IP address to the VLAN interface. |

N/A |

|

mac-address 0001-0001-0001 |

mac-address 0001-0001-0001 |

Configure a MAC address for the VLAN interface. |

N/A |

|

dhcp select relay |

dhcp select relay |

Enable the DHCP relay agent on the interface. |

N/A |

|

dhcp relay server-address 55.50.128.12 |

dhcp relay server-address 55.50.128.12 |

Specify DHCP server address 55.50.128.12 on the interface. |

N/A |

|

quit |

quit |

N/A |

N/A |

|

m-lag mad exclude interface Vlan-interface 1121 |

m-lag mad exclude interface Vlan-interface 1121 |

Exclude VLAN interface 1121 from the shutdown action by M-LAG MAD. |

Execute this command on the downlink port. |

Configuring VLAN interfaces and gateways (VRRP gateways)

|

Border 1 |

Border 2 |

Description |

Remarks |

|

interface Vlan-interface1121 |

interface Vlan-interface1121 |

Create a VLAN interface. |

N/A |

|

ip address 55.50.138.124 255.255.255.128 |

ip address 55.50.138.123 255.255.255.128 |

Assign an IP address to the VLAN interface. |

N/A |

|

vrrp vrid 1 virtual-ip 55.50.138.125 |

vrrp vrid 1 virtual-ip 55.50.138.125 |

Create an IPv4 VRRP group and assign a virtual IP address to it. |

N/A |

|

vrrp vrid 1 priority 150 |

vrrp vrid 1 priority 120 |

Set the priority of the device in the IPv4 VRRP group. |

N/A |

|

dhcp select relay |

dhcp select relay |

Enable the DHCP relay agent on the interface |

N/A |

|

dhcp relay server-address 55.50.128.12 |

dhcp relay server-address 55.50.128.12 |

Specify DHCP server address 55.50.128.12 on the interface. |

N/A |

|

quit |